By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

Can AI beat a human at the game of twenty questions? Yes.

And can a server enhanced by CXL beat an AI server without it? Yes, and by a wide margin.

While CXL technology was originally developed for general-purpose cloud servers, the technology is now finding a home in AI as a vehicle for economically and efficiently boosting the performance of AI infrastructure. To this end, Marvell has been conducting benchmark tests on different AI use cases.

In December, Marvell, Samsung and Liqid showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for conducting vector searches (for analyzing unstructured data within documents) by more than 5x.

In February, Marvell showed how a trio of Structera A CXL compute accelerators can process more queries per second than a cutting-edge server CPU and at a lower latency while leaving the host CPU open for different computing tasks.

Today, this blog post will show how Structera CXL memory expanders can boost performance of inference tasks.

AI and Memory Expansion

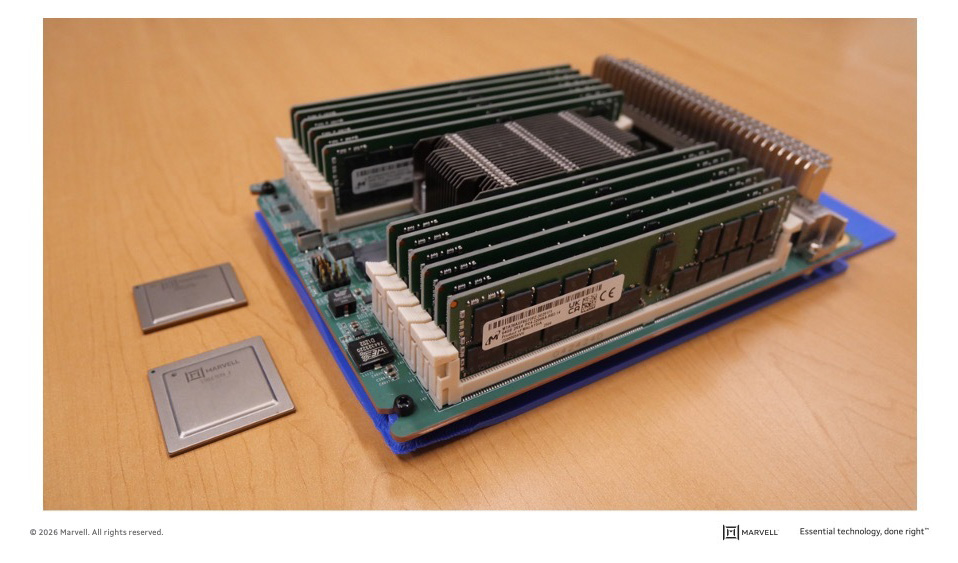

Unlike CXL compute accelerators, CXL memory expanders do not contain additional processing cores for near-memory computing. Instead, they supersize memory capacity and bandwidth. Marvell Structera X, released last year, provides a path for adding up to 4TB of DDR5 DRAM or 6TB of DDR4 DRAM to servers (12TB with integrated LZ4 compression) along with 200GB/second of additional bandwidth. Multiple Structera X modules, moreover, can be added to a single server; CXL modules slot into PCIe ports rather than the more limited DIMM slots used for memory.

By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

While CXL technology was originally developed for general-purpose cloud servers, it is now emerging as a key enabler for boosting the performance and ROI of AI infrastructure.

The logic is straightforward. Training and inference require rapid access to massive amounts of data. However, the memory channels on today’s XPUs and CPUs struggle to keep pace, creating the so-called “memory wall” that slows processing. CXL breaks this bottleneck by leveraging available PCIe ports to deliver additional memory bandwidth, expand memory capacity and, in some cases, integrate near-memory processors. As an added advantage, CXL provides these benefits at a lower cost and lower power profile than the usual way of adding more processors.

To showcase these benefits, Marvell conducted benchmark tests across multiple use cases to demonstrate how CXL technology can elevate AI performance.

In December, Marvell and its partners showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for vector searches used to analyze unstructured data within documents by more than 5x.

Here’s another one: CXL is deployed to lower latency.

Lower Latency? Through CXL?

At first glance, lower latency and CXL might seem contradictory. Memory connected through a CXL device sits farther from the processor than memory connected via local memory channels. With standard CXL devices, this typically results in higher latency between CXL memory and the primary processor.

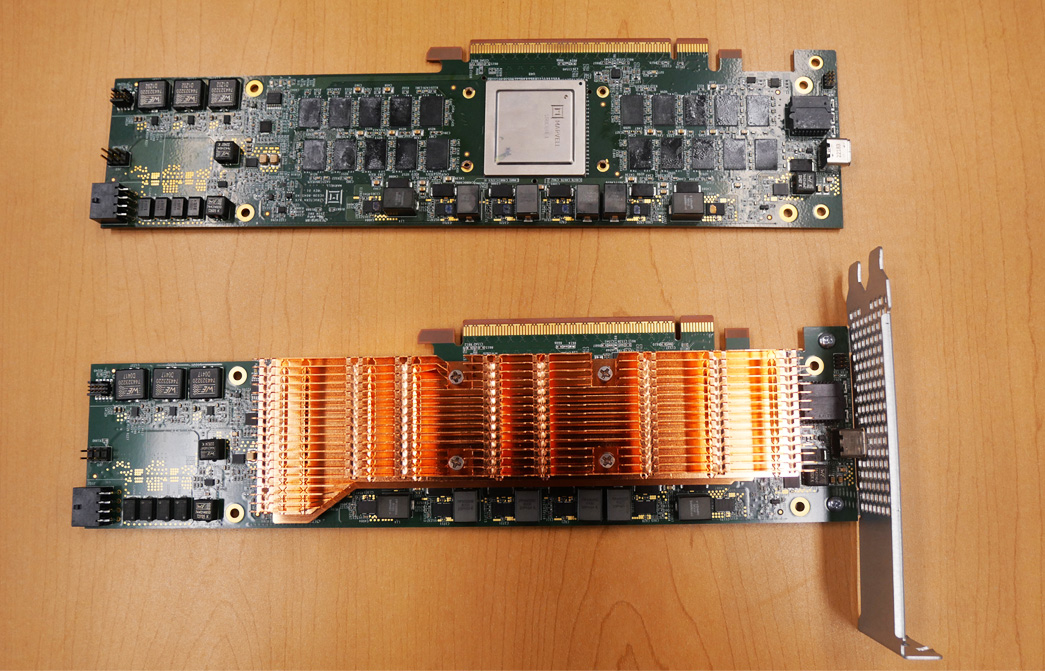

Marvell Structera A CXL memory accelerator boards with and without heat sinks.

By Sandeep Bharathi, president, Data Center Group, Marvell

This blog was originally posted at Fortune.

Semiconductors have transformed virtually every aspect of our lives. Now, the semiconductor industry is on the verge of a profound transformation itself.

Customized silicon—chips uniquely tailored to meet the performance and power requirements of an individual customer for a particular use case—will increasingly become pervasive as data center operators and AI developers seek to harness the power of AI. Expanded educational opportunities, better decision making, ways to improve the sustainability of the planet all become possible if we get the computational infrastructure right.

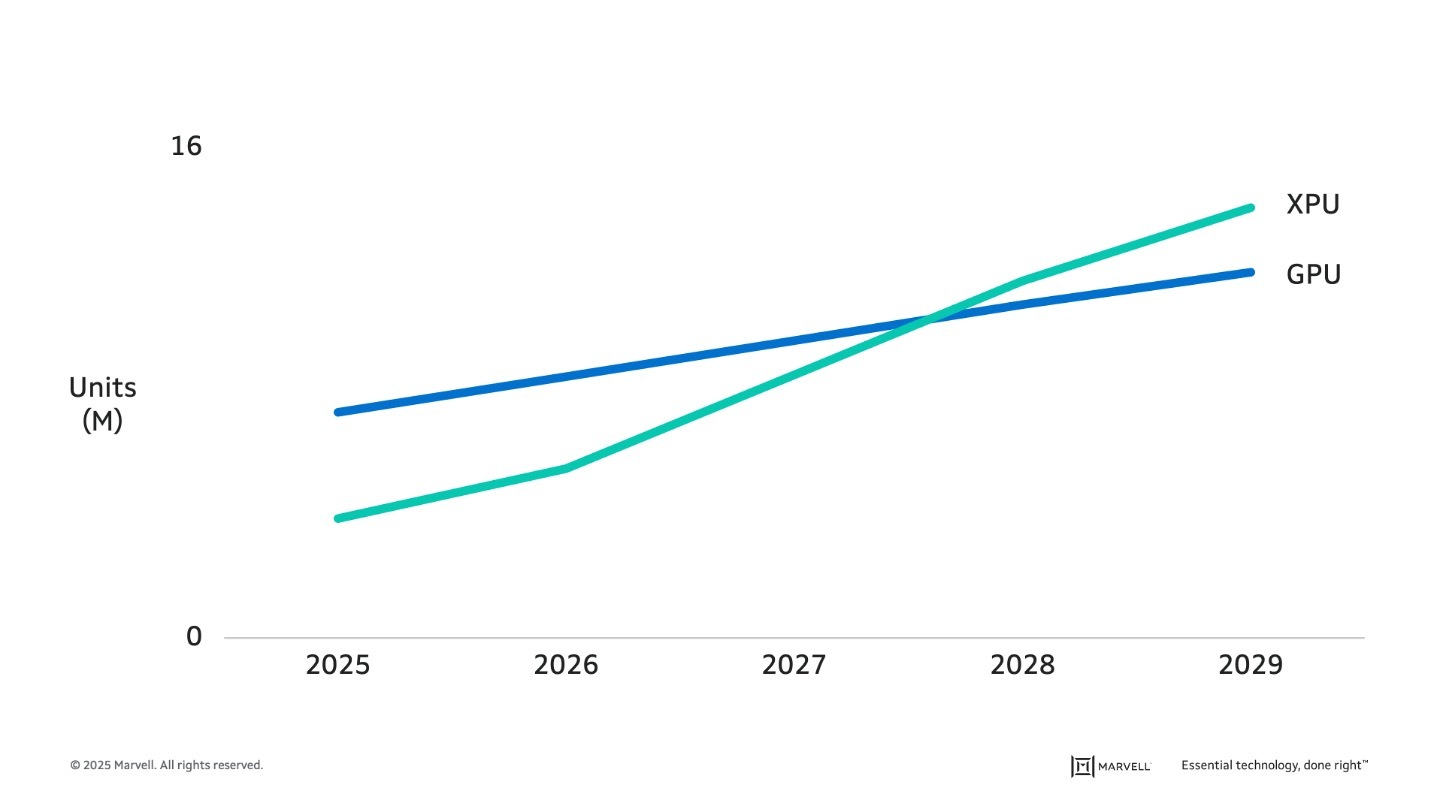

The turn to custom, in fact, is already underway. The number of GPUs—the merchant chips employed for AI training and inference—produced today is nearly double the number of custom XPUs built for the same tasks. By 2028, custom accelerators will likely pass GPUs in units shipped, with the gap expected to grow.1

By Michael Kanellos, Head of Influencer Relations, Marvell

Chiplets—devices made up of smaller, specialized cores linked together to function like a unified device—have dramatically transformed semiconductors over the past decade. Here’s a quick overview of their history and where the design concept goes next.

1. Initially, they went by the name RAMP

In 2006, Dave Patterson, the storied professor of computer science at UC Berkeley, and his lab published a paper describing how semiconductors will shift from monolithic silicon to devices where different dies are connected and combined into a package that, to the rest of the system, acts like a single device.1

While the paper also coined the term chiplet, the Berkeley team preferred RAMP (Research Accelerator for Multiple Processors).

2. In Silicon Valley fashion, the early R&D took place in a garage

Marvell co-founder and former CEO Sehat Sutardja started experimenting with combining different chips into a unified package in the 2010s in his garage, according to journalist Junko Yoshida.2 In 2015, he unveiled the MoChi (Modular Chip) concept, often credited as the first commercial platform for chiplets, in a keynote at ISSCC in February 2015.3

The first products came out a few months later in October.

“The introduction of Marvell’s AP806 MoChi module is the first step in creating a new process that can change the way that the industry designs chips,” wrote Linley Gwennap in Microprocessor Report.4

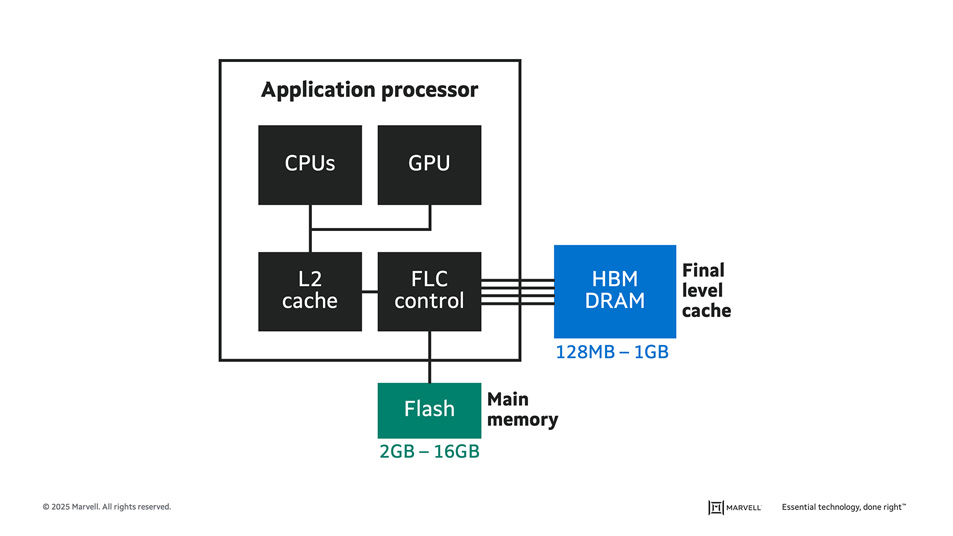

An early MoChi concept combining CPUs, a GPU and a FLC (final level cache) controller for distributing data across flash and DRAM for optimizing power. Credit: Microprocessor Forum.

By Michael Kanellos, Head of Influencer Relations, Marvell

More customers, more devices, more technologies, and more performance—that, ultimately, is where custom silicon is headed. While Moore’s Law is still alive, customization is taking over fast as the engine for driving change, innovation and performance in data infrastructure. A growing universe of users and chip designers are embracing the trend and if you want to see what’s at the cutting edge of custom, the best chips to study are the compute devices for data centers, i.e. the XPUs, CPUs, and GPUs powering AI clusters and clouds. By 2028, custom computing devices are to account for $55 billion in revenue, or 25% of the market.1 Technologies developed for this segment will trickle down into others.

Here are three of the latest innovations from Marvell:

Multi-Die Packaging with RDL Interposers

Achieving performance and power gains by shrinking transistors is getting more difficult and expensive. “There has been a pretty pronounced slowing of Moore’s Law. For every technology generation we don’t get the doubling (of performance) that we used to get,” says Marvell’s Mark Kuemerle, Vice President of Technology, Custom Cloud Solutions. “Unfortunately, data centers don’t care. They need a way to increase performance every generation.”

Instead of shrinking transistors to get more of them into a finite space, chiplets effectively allow designers to stack cores on top of each other with the packaging serving as the vertical superstructure.

2.5D packaging, debuted by Marvell in May, increases the effective amount of compute silicon for a given space by 2.8 times.2 At the same time, the RDL interposer wires them in a more efficient manner. In conventional chiplets, a single interposer spans the floor space of the chips it connects as well as any area between them. If two computing cores are on opposite sides of a chiplet package, the interposer will cover the entire space.

Marvell® RDL interposers, by contrast, are form-fitted to individual computing die with six layers of interconnects managing the connections.

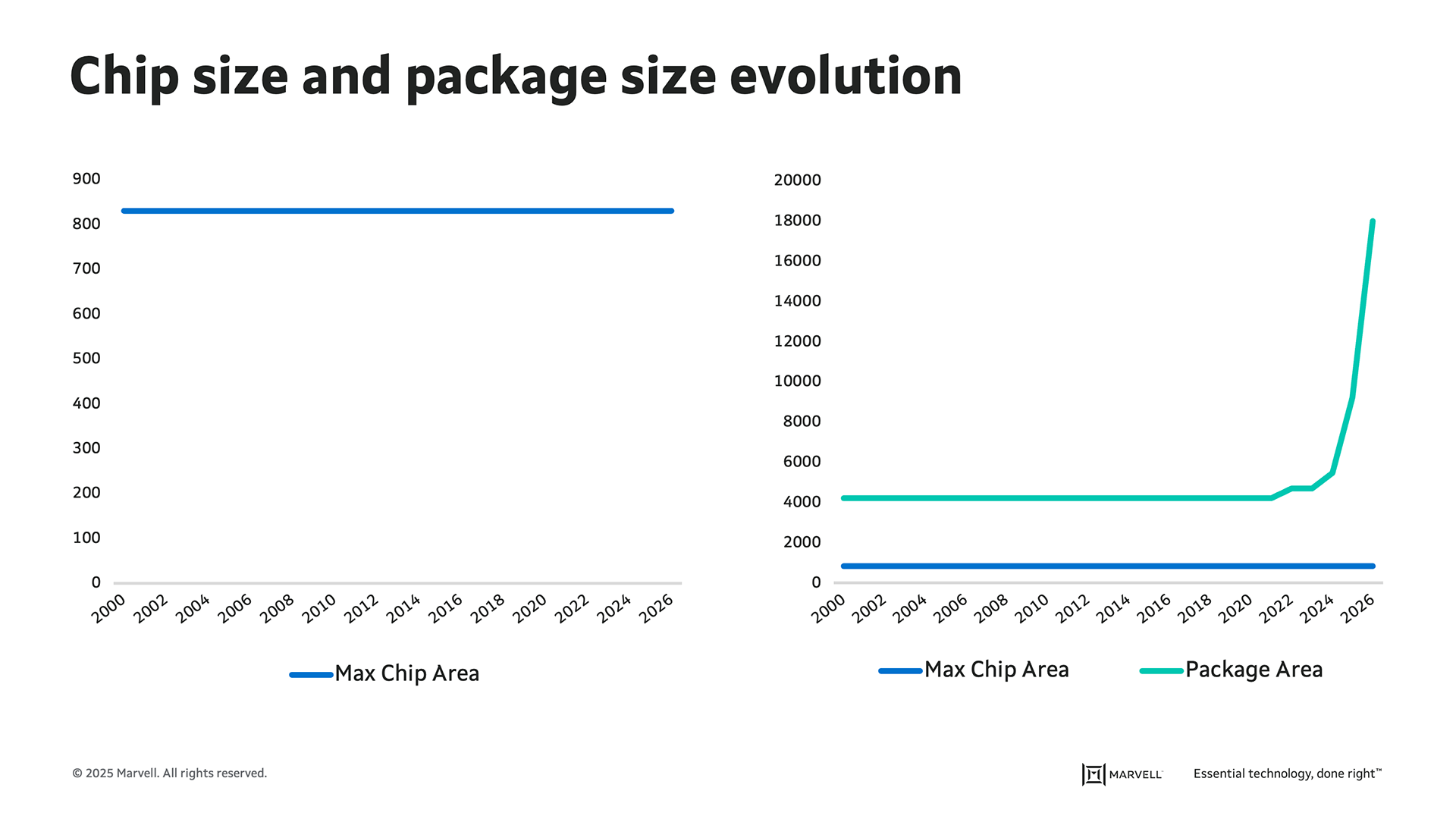

2.5D and multilayer packaging. With current manufacturing technologies, chips can achieve a maximum area of just over 800 sq. mm. By stacking them, the total number of transistors in an XY footprint can grow exponentially. Within these packages, RDL interposers are the elevator shafts, providing connectivity between and across layers in a space-efficient manner.