- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

2006: The Twelve Months That Changed the Chip Industry

The semiconductor market is vastly different than it was a few years ago. Cloud service providers want custom silicon and collaborating with partners on designs. Chiplets and 3D devices, long discussed in the future tense, are a growing sector of the market. Moore’s Law? It’s still alive, but manufacturers and designers are following it by different means than simply shrinking transistors.

And by sheer coincidence, many of the forces propelling these changes happened in the same year: 2006.

The Magic of Scaling Slows.

While Moore’s Law has slowed, it is still alive; semiconductor companies continue to be able to shrink the size of transistors at a somewhat predictable cadence.

The benefits, however, changed. With so-called “Dennard Scaling,” chip designers could increase clock speed, reduce power—or both—with transistor shrinks. In practical terms, it meant that PC makers, phone designers and software developers could plan on a steady stream of hardware advances.

Dennard Scaling effectively stopped in 20061. New technologies for keeping the hamster wheel spinning needed to be found, and fast.

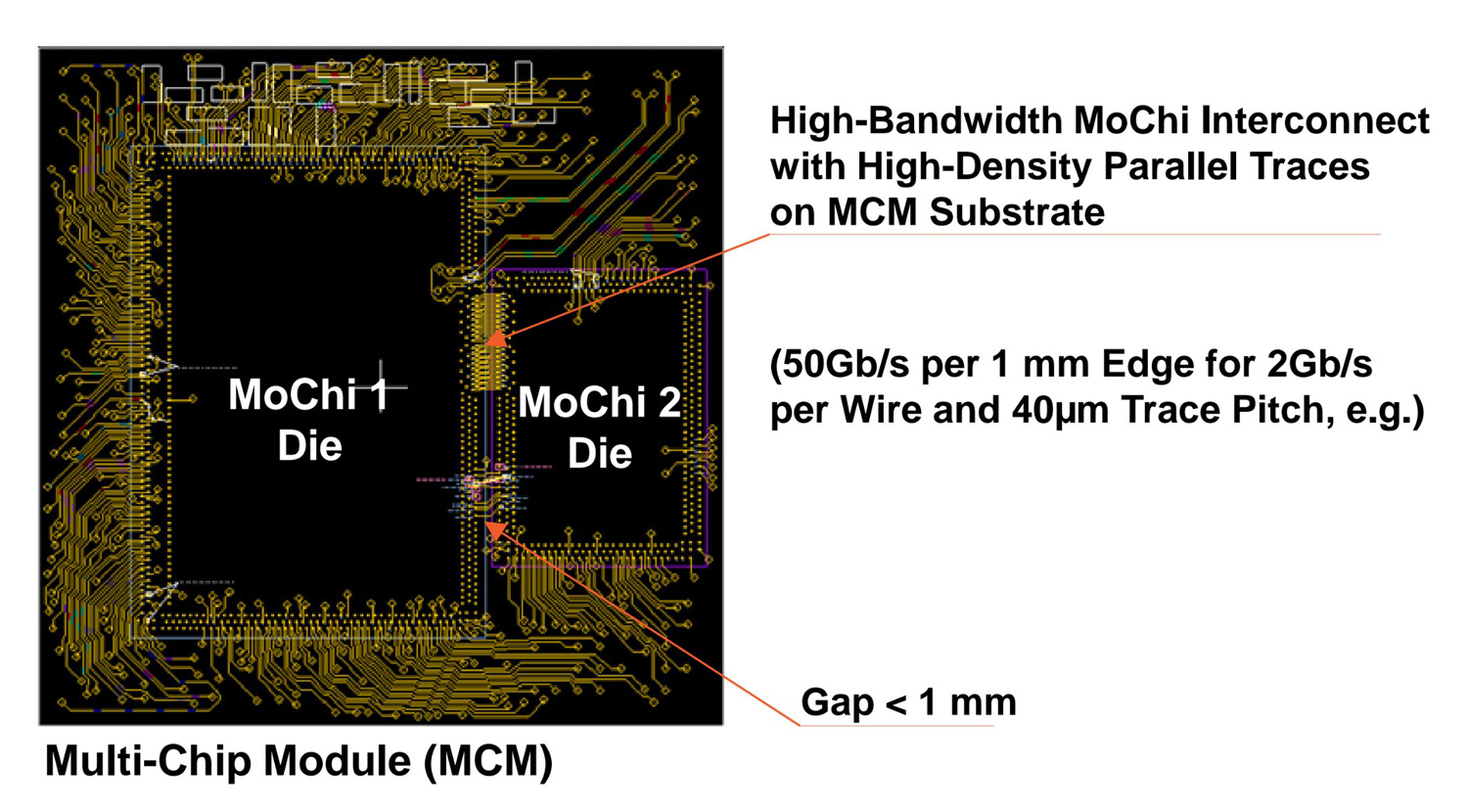

The term chiplet was coined in 2006 in a paper from the Patterson lab at UC Berkeley. Marvell co-founder began tinkering with the idea of multi-chip packages around the same time. In 2015, the MoChi concept from Marvell ushered in the first commercial chiplets along with a precursor of high bandwidth memory or HBM.

GPUs Went to Work.

On November 8, 20062, NVIDIA unfurled the G80 GPU, the first NVIDIA GPU targeted at HPC and general-purpose computing. The 90nm parallel co-processor, as it was marketed, sported a then whopping 686 million transistors3.

NVIDIA also created CUDA to simplify the process for programming on GPUs. By 2012, GPU-powered supercomputers topped the Top 500 List4. Now, nearly 90%5 of systems on the list have GPUs.

XPUs Were Born.

While GPUs can outperform CPUs in training and inference, optimized devices designed around specific workloads can outperform both CPUs and GPUs. The IBM Cell processor and the Cavium line of security processors in the early 2000s propagated the first wave toward optimized chips.

After reading an article6 about the rise of probabilistic computing in AI, MIT student Ben Vigoda switched his PhD thesis and founded Lyric Semiconductor7 in 2006 to create devices focused on calculating probabilities to reduce the cost, power and size of devices needed for tasks like deep learning recommendation models or fraud detection. It came out of stealth mode in 2010, and Analog Devices bought it in 2011. (Vigoda also beat Google to the punch: the search giant mulled the idea of TPUs in 2006 before moving forward on the concept in 20138.)

For the rest of the story, go to EE Times.

1. University of California Irvine, Wikipedia.

2. ExtremeTech, Nov 2016.

3. Next Platform, Feb 2018.

4. Data Center Knowledge, Nov 2012.

5. Next Platform, May 2024.

6. CNET, Dec 2003.

7. GTM, Aug 2010.

8. Google. May 2017.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this article. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact