Archive for the 'Ethernet Adapters and Controllers' Category

-

June 13, 2023

FC-NVMe 成为 HPE 下一代块存储的主流

By Todd Owens, Field Marketing Director, Marvell

While Fibre Channel (FC) has been around for a couple of decades now, the Fibre Channel industry continues to develop the technology in ways that keep it in the forefront of the data center for shared storage connectivity. Always a reliable technology, continued innovations in performance, security and manageability have made Fibre Channel I/O the go-to connectivity option for business-critical applications that leverage the most advanced shared storage arrays.

A recent development that highlights the progress and significance of Fibre Channel is Hewlett Packard Enterprise’s (HPE) recent announcement of their latest offering in their Storage as a Service (SaaS) lineup with 32Gb Fibre Channel connectivity. HPE GreenLake for Block Storage MP powered by HPE Alletra Storage MP hardware features a next-generation platform connected to the storage area network (SAN) using either traditional SCSI-based FC or NVMe over FC connectivity. This innovative solution not only provides customers with highly scalable capabilities but also delivers cloud-like management, allowing HPE customers to consume block storage any way they desire – own and manage, outsource management, or consume on demand. HPE GreenLake for Block Storage powered by Alletra Storage MP

HPE GreenLake for Block Storage powered by Alletra Storage MPAt launch, HPE is providing FC connectivity for this storage system to the host servers and supporting both FC-SCSI and native FC-NVMe. HPE plans to provide additional connectivity options in the future, but the fact they prioritized FC connectivity speaks volumes of the customer demand for mature, reliable, and low latency FC technology.

-

June 02, 2021

用 NVMe 打破数字困境

By Ian Sagan, Marvell Field Applications Engineer and Jacqueline Nguyen, Marvell Field Marketing Manager and Nick De Maria, Marvell Field Applications Engineer

-

January 29, 2021

By Amir Bar-Niv, VP of Marketing, Automotive Business Unit, Marvell and John Bergen, Sr. Product Marketing Manager, Automotive Business Unit, Marvell

Some one hundred-and-sixty years later, as Marvell and its competitors race to reinvent the world’s transportation networks, universal design standards are more important than ever. Recently, Marvell’s 88Q5050 Ethernet Device Bridge became the first of its type in the automotive industry to receive Avnu certification, meeting exacting new technical standards that facilitate the exchange of information between diverse in-car networks, which enable today’s data-dependent vehicles to operate smoothly, safely and reliably.

-

August 27, 2020

如何在 2020 年获得 NVMe over Fabric 的优势

By Todd Owens, Field Marketing Director, Marvell

As native Non-volatile Memory Express (NVMe®) share-storage arrays continue enhancing our ability to store and access more information faster across a much bigger network, customers of all sizes – enterprise, mid-market and SMBs – confront a common question: what is required to take advantage of this quantum leap forward in speed and capacity?

NVMe provides an efficient command set that is specific to memory-based storage, provides increased performance that is designed to run over PCIe 3.0 or PCIe 4.0 bus architectures, and -- offering 64,000 command queues with 64,000 commands per queue -- can provide much more scalability than other storage protocols.

-

August 19, 2020

By Todd Owens, Field Marketing Director, Marvell

-

July 28, 2020

实现网络处理边缘化: 安全

By Alik Fishman, Director of Product Management, Marvell

我们在先前的“实现网络处理边缘化”系列中探讨了将智能、性能和遥测向网络边缘推进的相关趋势。 本部分将介绍网络安全的角色转变,以及将安全功能整合至网络接入的方法。这些方法可以在整个基础设施内有效推动策略实施、保护和修复的简化。

随着移动设备和物联网设备等新数据源在工作场所的快速普及,遭黑客攻击和遭泄露的数据数量也迅猛增长。不少企业深受其害,网络安全威胁成为困扰他们的日常难题。 安全漏洞也呈现新的发展趋势,不但数量持续上升,严重性和持续时间也在增长,从入侵到控制的平均生命周期长达近一年,1给运营带来巨大的成本压力。 而数字化转型和新兴技术格局(远程访问、云原生模型、物联网设备激增等)的出现,使网络架构和运营受到巨大影响,也催生出新的安全风险。 大刀阔斧地变革企业基础设施,提升网络智能、性能、可视性和安全性2,已经刻不容缓。

-

2019 年 5 月 13 日

FastLinQ® NIC + RedHat SDN

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

A bit of validation once in a while is good for all of us - that’s pretty true whether you are the one providing it or, conversely, the one receiving it. 大多数情况下,我更多的是给予认可的一方,而很少是受到认可的一方。 比如几天前我妻子试穿了一条新裙子,然后问我:“我看上去怎么样?” Now, of course, we all know there is only one way to answer a question like that - if they want to avoid sleeping on the couch at least.

最近,Marvell 团队的努力受到了有合作的伙伴的认可。 我们向行业供应的 FastLinQ 45000/41000 高性能以太网络接口控制器 (NIC) 系列产品支持运行 10/25/50/100GbE,现已达到了 Red Hat 的快速数据通路 (FDP) 19.B 标准。

图 1:Marvell 推出的 FastLinQ 45000 和 41000 以太网适配器系列产品

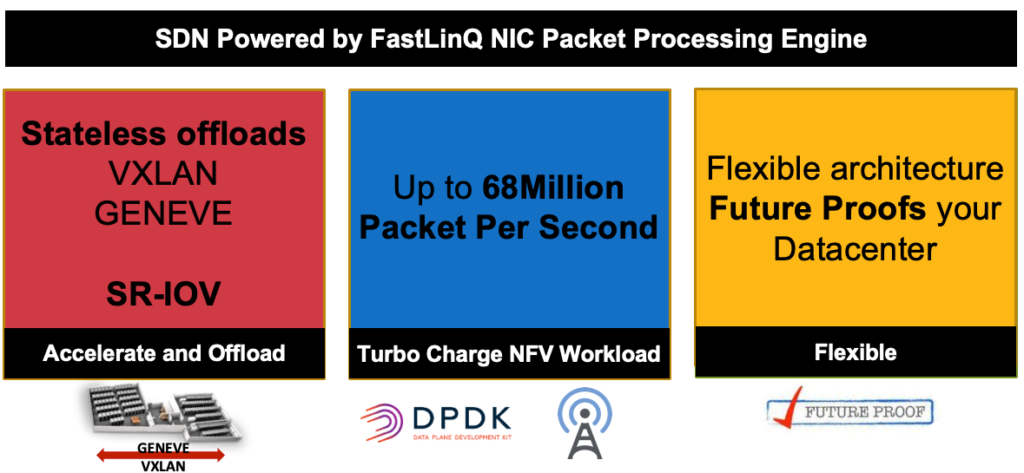

Red Hat FDP is employed in an extensive array of the products found within the Red Hat portfolio - such as the Red Hat OpenStack Platform (RHOSP), as well as the Red Hat OpenShift Container Platform and Red Hat Virtualization (RHV). Having FDP-qualification means that FastLinQ can now address a far broader scope of the open-source Software Defined Networking (SDN) use cases - including Open vSwitch (OVS), Open vSwitch with the Data Plane Development Kit (OVS-DPDK), Single Root Input/Output Virtualization (SR-IOV) and Network Functions Virtualization (NFV).

Marvell 的工程师与 Red Hat 的相应工作人员在该项目中密切合作,只为确保 FastLinQ 功能集能够与 FDP 产品通道共同运作。 这次的合作涉及到数个小时复杂、深入的测试。 达到 FDP 19.B 标准后,Marvell FastLinQ 以太网适配器即可在 RHOSP 14、RHEL 8.0、RHEV 4.3 和 OpenShift 3.11 中开启无缝 SDN 部署。

Marvell 的工程师与 Red Hat 的相应工作人员在该项目中密切合作,只为确保 FastLinQ 功能集能够与 FDP 产品通道共同运作。 这次的合作涉及到数个小时复杂、深入的测试。 达到 FDP 19.B 标准后,Marvell FastLinQ 以太网适配器即可在 RHOSP 14、RHEL 8.0、RHEV 4.3 和 OpenShift 3.11 中开启无缝 SDN 部署。我们的 FastLinQ 45000/41000 以太网适配器公认为数据网络领域的“瑞士军刀”,其优势在于高度灵活的可编程架构。 该架构能够实现高达每秒 6800 万个小型数据包的性能水平,加之 支持多达240 项 SR-IOV VF功能并支持在维持无状态卸载下的隧道功能。 借助该产品,客户即可拥有无缝部署所需的硬件,并在日益增长的虚拟化形势中有效管理富有挑战的网络工作负荷。 支持通用 RDMA(RoCE、RoCEv2 和 iWARP 并发操作),并且与大多数 NIC 竞争产品不同,以上产品解决方案还可带来高度的可扩展性和灵活性。 点击此处了解更多。

被认可的感觉太棒了。 向 RedHat 和 Marvell 团队表达由衷的祝贺!

-

February 20, 2019

NVMe/TCP - 简化是创新的关键

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

Whether it is the aesthetics of the iPhone or a work of art like Monet’s ‘Water Lillies’, simplicity is often a very attractive trait. I hear this resonate in everyday examples from my own life - with my boss at work, whose mantra is “make it simple”, and my wife of 15 years telling my teenage daughter “beauty lies in simplicity”. For the record, both of these statements generally fall upon deaf ears.

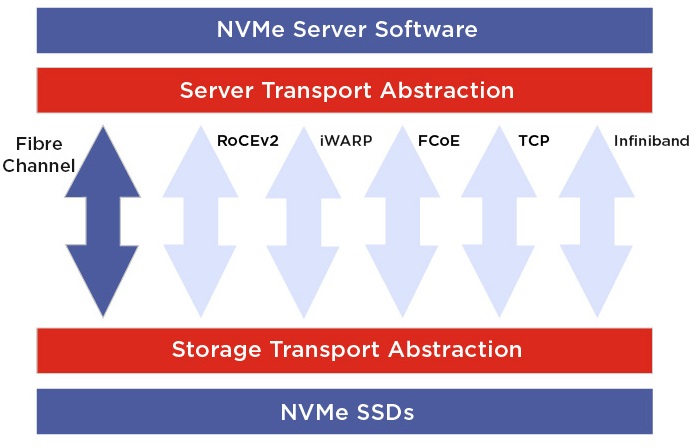

如今正推动数据存储发展的 Non-Volatile Memory over PCIe Express (NVMe) 技术,便是简单价值开始逐渐受到认可的又一领域。尤其是随着 NVMe-over-Fabrics (NVMe-oF) 拓扑的出现,该技术的部署将越来越多。这一简单又可信的以太网光纤,即传输控制协议 (TCP),现已成为经 NVMe Group 批准认可的 NVMe-oF 标准[1]。

这里给大家提供一些背景信息,大体而言,NVMe 是借助高速接口(如 PCIe)访问以及专用于闪存实施的简化指令集,以高效利用基于闪存的固态硬盘 (SSD)。现在,按照定义,NVMe 限于单个服务器,这就带来了一项挑战,即很难横向扩展 NVMe 并从数据中心的任何元素进行访问。这便是 NVMe-oF 派上用处的地方。全闪存阵列 (AFA)、即闪存簇 (JBOF) 或网络化闪存簇 (FBOF) 和软件定义存储 (SDS) 架构皆能整合一个基于 NVMe-oF 连接的前端。由此一来,将大大提升服务器、客户端和应用访问外部存储资源的效率。

A series of ‘fabrics’ have now emerged for scaling out NVMe. The first of these being Ethernet Remote Direct Memory Access (RDMA) - in both its RDMA over Converged Ethernet (RoCE) and Internet Wide-Area RDMA Protocol (iWARP) derivatives. It has been followed soon after by NVMe-over-Fiber Channel (FC-NVMe), and then ones based on FCoE, Infiniband and OmniPath.

But with so many fabric options already out there, why is it necessary to come up with another one? Do we really need NVMe-over-TCP (NVMe/TCP) too? Well RDMA (whether it is RoCE or iWARP) based NVMe fabrics are supposed to deliver the extremely low level latency that NVMe requires via a myriad of different technologies - like zero copy and kernel bypass - driven by specialized Network Interface Controller (NICs). However, there are several factors which hamper this, and these need to be taken into account.

- 首先,大多数早期的光纤(如 RoCE/iWARP)并没有什么值得一提的现有存储网络安装基础(Fiber Channel 是一个明显例外)。 例如,目前在企业数据中心运行的 1200 万 10GbE+ NIC 端口中,只有不到 5% 具有 RDMA 功能(根据我的粗略估计)。

- 非常流行的 RDMA 协议 (RoCE) 要求在无损网络上运行(而这则需要配备薪水要求更高的高技术网络工程师)。更令人沮丧的是,即便如此,该协议也容易发生拥堵问题。

- 最后,可能也是富有说服力的一点,这两项 RDMA 协议(RoCE 和 iWARP)相互不兼容。

Unlike any other NVMe fabric, the pervasiveness of TCP is huge - it is absolutely everywhere. TCP/IP is the fundamental foundation of the Internet, every single Ethernet NIC/network out there supports the TCP protocol. With TCP, availability and reliability are just not issues to that need to be worried about. Extending the scale of NVMe over a TCP fabric seems like the logical thing to do.

NVMe/TCP 非常快速(尤其是在使用 Marvell FastLinQ 10/25/50/100GbE NIC 的情况下 – 因为它们具有一个用于 NVMe/TCP 的内置充分卸载),可以充分利用现有的基础架构,让事务本身变得更加简单。这是所有技术人员期盼的美好前景,而且对于担忧预算的公司首席信息官来说也充满吸引力。

从长远角度来看,简化再一次获胜!

[1] https://nvmexpress.org/welcome-nvme-tcp-to-the-nvme-of-family-of-transports/

-

October 18, 2018

寻求网络聚合? HPE 推出新一代 Marvell FastLinQ CNA

By Todd Owens, Field Marketing Director, Marvell

将网络和存储 I/O 融合到单一网卡上可以减少所需的连接量,从而显着降低中小型数据中心的成本。 更少的适配器端口意味着更少的电缆、光学器件和交换机端口消耗,这些都能够减少数据中心的运行费用(OPEX)。 客户可以凭借部署融合网络适配器 (CNA),不仅可以提供网络连接,也为 iSCSI 和 FCoE 提供存储卸载。

最近,HPE 已推出两款基于 Marvell® FastLinQ® 41000 系列技术的新型 CNA。 HPE StoreFabric CN1200R 10GBASE-T 融合网络适配器和 HPE StoreFabric CN1300R 10/25Gb 融合网络适配器都是 HPE CNA 组合中的新面世产品。 这些是一项支持远程直接内存访问 (RDMA) 技术(且同时支持存储卸载功能)的 HPE StoreFabric CNA。

众所周知,需要存储设备的数据生成量正在不断增加。 最近,中端市场、分支机构和校园环境中的 iSCSI 连接存储设备的数量有所增加。iSCSI 非常适合这些环境,因为它易于部署、支持在标准以太网上运行,并且支持各种新型的 iSCSI 存储产品,如 Nimble 和 MSA 全闪存存储阵列 (AFA)。

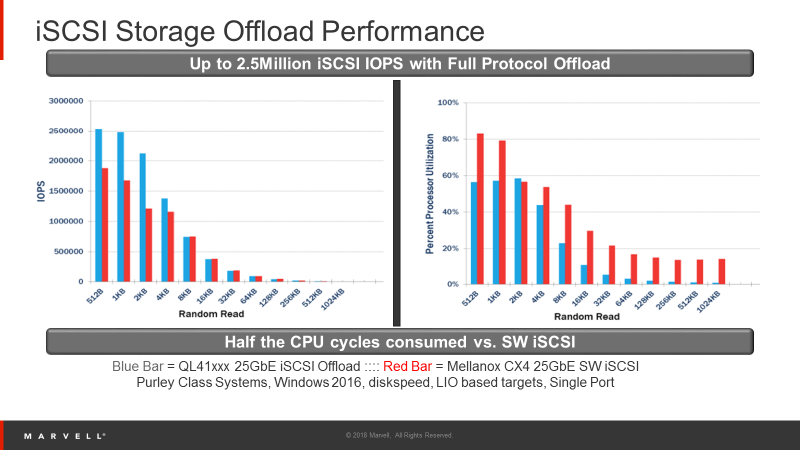

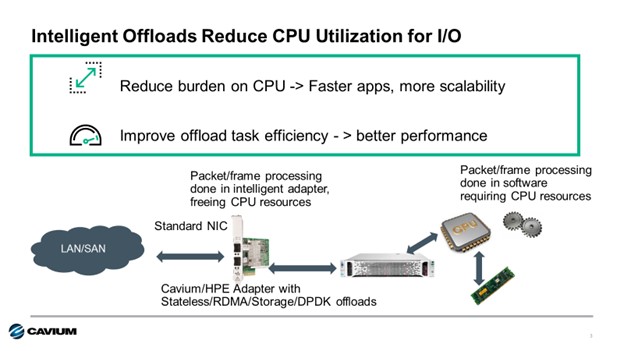

iSCSI 面临的一项挑战在于,使用软件启动器(此为存储连接的一般方法)时,处理存储流量所形成的服务器 CPU 负载。 为解决这一问题,存储管理员可以改用配有完整 iSCSI 协议硬件卸载的 CNA。 O将处理存储 I/O 的负担从 CPU 上卸载并转移至适配器。

图 1: Benefits of Adapter Offloads

图 1: Benefits of Adapter Offloads 如图 1 所示,通过 Marvell 驱动测试可以看出,相较于配置软件启动器的以太网 NIC,在 FastLinQ 10/25GbE 适配器中使用 H/W 卸载可以将 CPU 使用率降低 50% 之多。 这表明 CPU 的负担得以缓解,因此您可以为各服务器添加更多虚拟机,并且还可能减少物理服务器的需求数量。 使用 Marvell 智能 I/O 适配器等就可以大幅度节约 TCO。

而另一项挑战则是以太网连接的相关延迟问题。 如今,利用 RDMA 技术即可解决这一难题。iWARP, 通过融合以太网的 RDMA (RoCE) 以及通过以太网配置 RDMA 的 iSCSI (iSER) 均可绕过(bypass) O/S 用户空间中的软件内核,实现内存与适配器的直接访问。 这能加快事务执行的速度,并减少整体 I/O 延迟情况。 由此将获得更好的性能和更快的应用程序。

新型 HPE StoreFabric CNA 现已成为 HPE ProLiant 和 Apollo 客户融合网络和 iSCSI 存储流量的理想设备。 HPE StoreFabric CN1300R 10/25GbE CNA 支持同时分配至网络和存储流量的海量宽带。 此外,此款适配器支持 通用 RDMA (支持 iWARP 和 RoCE)以及 iSER,因此在网络和存储流量上的延迟均显著低于标准网络适配器。

HPE StoreFabric 1300R 还支持 Marvell SmartAN™, 技术,允许适配器在 10GbE 和 25GbE 网络间转变时进行自动配置。 这一点非常关键的原因在于,在 25GbE 速率下,可以根据使用电缆请求前向纠错 (FEC)。 执行两种不同类型的 FEC 可以增加复杂度。 如要消除所有的复杂性,SmartAN™ 将自动配置适配器以匹配 FEC、缆线和交换机设置,实现 10GbE 或 25GbE 自适应连接,且全程无需用户干预。

如果预算是关键考虑因素,HPE StoreFabric CN1200R 将是优质选择。 支持 10GBASE-T 连接,此款适配器采用 RJ-45 连接与现有的 CAT6A 铜缆相连接。 这消除了对昂贵的 DAC 电缆或光收发器的需求。 StoreFabric CN1200R 还支持 RDMA 协议(iWARP、RoCE 和 iSER),降低整体延迟情况。

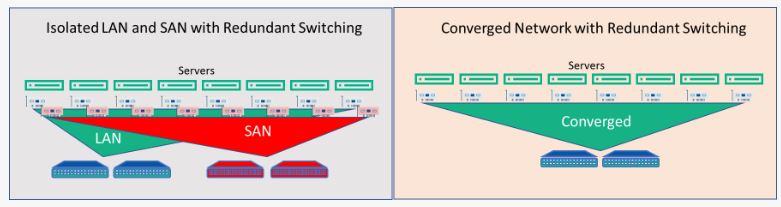

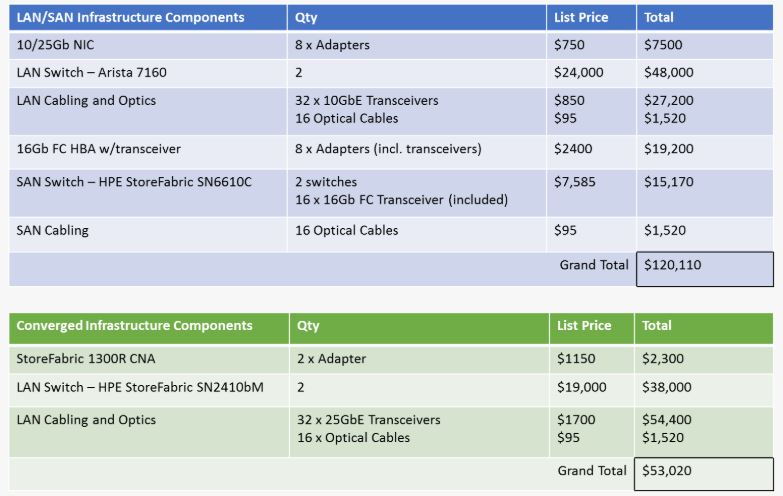

但是为什么要实现融合呢? 这是权衡成本和性能作出的折衷办法。 如果我们计算并对比分别部署 LAN 和存储网络与融合网络的成本就能从中发现,融合 I/O 在极大程度上降低了基础设施的复杂程度,并能将购置成本减半。 另外,相较于管理两个网络,管理单个网络还能节约更多长期成本。

图 2: Eight Server Network Infrastructure Comparison

图 2: Eight Server Network Infrastructure ComparisonIn this pricing scenario, we are looking at eight servers connecting to separate LAN and SAN environments versus connecting to a single converged environment as shown in figure 2.

Table 1: LAN/SAN versus Converged Infrastructure Price Comparison

Table 1: LAN/SAN versus Converged Infrastructure Price Comparison 融合环境的价格比分离式网络方式低 55%。 不足之处在于,从分离网络环境中的光纤通道 SAN 转移到融合 iSCSI 环境中所产生的潜在存储性能影响。 利用数据中心桥接、优先级流量控制以及 RoCE RDMA 实现无损以太网环境,从而优化 iSCSI 性能。 这样做的确非常大地增加了网络复杂程度,但将通过减少存储流量中断数从而提高 iSCSI 性能。

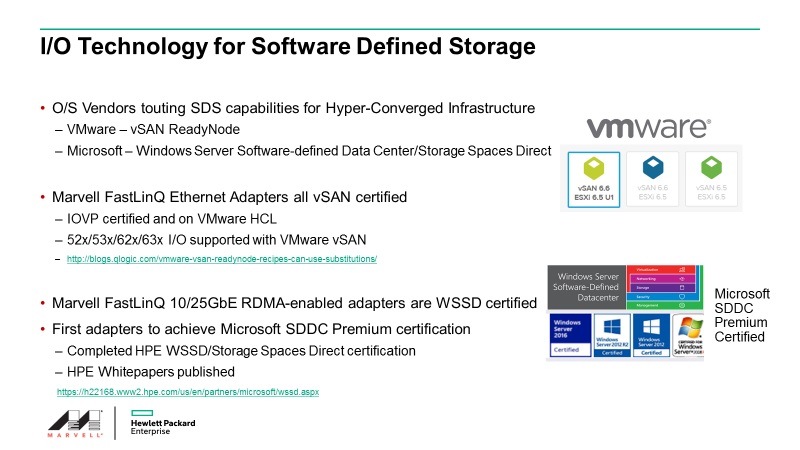

这些新型适配器还有其它应用场景,如超融合基础设施 (HCI) 实施。 利用 HCI 中由软件定义的存储。 这表示服务器中的存储属于跨网络共享。 常见实施包括部署 Windows Storage Spaces Direct (S2D) 和 VMware vSAN 就绪节点。 HPE StoreFabric CN1200R BASE-T 和 CN1300R 10/25GbE CNA 经证明均可用于这些 HCI 实施。

图 3: FastLinQ Technology Certified for Microsoft WSSD and VMware vSAN Ready Node

图 3: FastLinQ Technology Certified for Microsoft WSSD and VMware vSAN Ready Node 总之,HPE StoreFabric 团队推出的新型 CNA 实现了融合环境的高性能低成本连接。 SmartAN™ 技术支持 10Gb 和 25Gb 以太网宽带、iWARP、RoCE RDMA,以及自动协调 10GbE 和 25GbE 连接之间的变化,对于中小型服务器和存储网络而言,这些都是理想的 I/O 连接选项。 欢迎选择 Marvell FastLinQ Ethernet I/O 技术(此技术能够从源头为您考虑性能、购置成本、灵活性和可扩展性),以充分利用对服务器的投资。

如欲获取融合网络的更多信息,请联系 HPE 的相关领域专家探讨您的需求。 使用 HPE 联系方式 链接即可与其取得联系,欢迎访问 HPE 子网站 www.marvell.com/hpe获取相关信息。

-

August 03, 2018

打造基础设施行业巨头: Marvell 与 Cavium 强强联合!

By Todd Owens, Field Marketing Director, Marvell

Marvell 已于 2018 年 7 月 6 日完成收购 Cavium 的相关事宜,目前整合工作正在顺利进行。 Cavium 将充分整合到 Marvell 公司。 Marvell 后,我们担负的共同使命是开发和交付半导体解决方案,实现对全球数据进行更加快速安全的处理、移动、存储和保护。两家公司强强联合使基础设施行业巨头的地位更加稳固,服务的客户包括云/数据中心、企业/校园、服务供应商、SMB/SOHO、工业和汽车行业等。

有关与 HPE 之间的业务往来,您首先需要了解的是所有事宜仍将照常进行。 针对向 HPE 提供 I/O 和处理器技术相关事宜,收购前后您所打交道的合作伙伴保持不变。 Marvell 是存储技术领域排名靠前的供应商,业务涵盖非常高速的读取通道、高性能处理器和收发器等,广泛应用于当今 HPE ProLiant 和 HPE 存储产品所使用的绝大多数硬盘驱动器 (HDD) 和固态硬盘驱动器 (SSD) 模块。

行业排名靠前的 QLogic® 8/16/32Gb 光纤通道和 FastLinQ® 10/20/25/50Gb 以太网 I/O 技术将继续提供 HPE 服务器连接和存储解决方案。 此类产品仍将 HPE 智能 I/O 选择作为重点,并以卓越的性能、灵活性和可靠性著称。

Marvell FastLinQ 以太网和 Qlogic 光纤通道 I/O 适配器组合

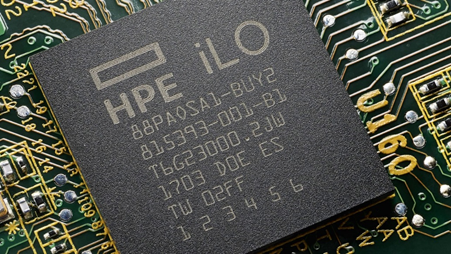

我们将继续推出适用于 HPC 服务器的 ThunderX2® Arm® 处理器技术,如高性能计算应用程序适用的 HPE Apollo 70。 今后我们还将继续推出嵌入 HPE 服务器和存储的以太网网络技术,以及应用于所有 HPE ProLiant 和 HPE Synergy Gen10 服务器中 iLO5 基板管理控制器 (BMC) 的 Marvell ASIC 技术。

iLO 5 for HPE ProLiant Gen10 部署在 Marvell SoC 上

这听起来非常棒,但是真正合并后会出现什么变化呢?

如今,合并后的公司拥有更为广泛的技术组合,这将帮助 HPE 在边缘、网络以及数据中心提供出众的解决方案。

Marvell 拥有行业排名靠前的交换机技术,可实现 1GbE 到 100GbE 乃至更大速率。 这让我们能够提供从 IoT 边缘到数据中心和云之间的连接。 我们的智能网卡 (Intelligent NIC) 技术具有压缩、加密等多重功能,客户能够比以往更加快速智能地对网络流量进行分析。 我们的安全解决方案和增强型 SoC 和处理器性能将帮助我们的 HPE 设计团队与 HPE 展开合作,共同对新一代服务器和解决方案进行革新。

随着合并的不断深化,您将注意到品牌的转变,并且访问信息的位置也在不断变更。 但是我们的特定产品品牌仍将保留,具体包括如 Arm ThunderX2、光纤通道 QLogic 以及以太网 FastLinQ 等,但是多数产品将从 Cavium 向 Marvell 转移。 并且我们的网络资源和电子邮件地址也将进行变更。 例如,您现在可以通过 www.marvell.com/hpe 访问 HPE 子网站。 并且您很快就能通过“hpesolutions@marvell.com”与我们取得联系. 您当前使用的附属产品随后仍将不断更新。 现已对 HPE 特定网卡, HPE以太网快速参考指南, 光纤通道快速参考 指南和演示材料等切实进行更新。 更新将在未来几个月内持续进行。

总而言之,我们正在不断发展突破、创造辉煌。 如今,作为一个 统一的团队 凭借出众的技术,我们将更加专注于为 HPE 及其有合作的伙伴和客户的发展提供助力。 即刻联系我们,了解更多相关内容。 由此获取我们的相关领域联系方式. 这一全新起点让我们兴奋不已,日益巩固“I/O 和基础设施的关键地位!”。

-

2018 年 5 月 2 日

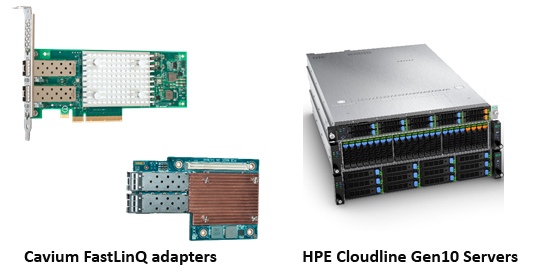

可用于 HPE Cloudline 服务器的 Cavium FastLinQ 以太网适配器

By Todd Owens, Field Marketing Director, Marvell

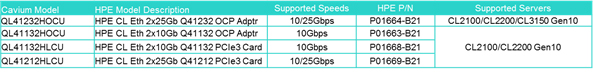

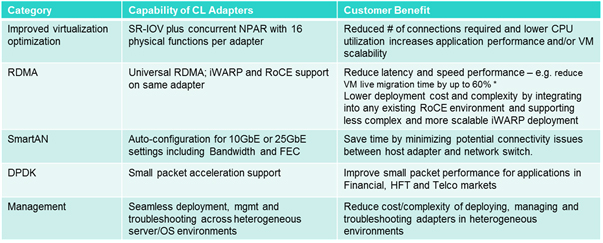

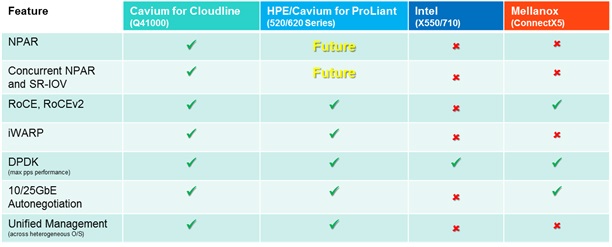

Are you considering deploying HPE Cloudline servers in your hyper-scale environment? If you are, be aware that HPE now offers select Cavium ™ FastLinQ® 10GbE and 10/25GbE Adapters as options for HPE Cloudline CL2100, CL2200 and CL3150 Gen 10 Servers. The adapters supported on the HPE Cloudline servers are shown in table 1 below.

Faster processors, increase in scale, high performance NVMe and SSD storage and the need for better performance and lower latency have started to shift some of the performance bottlenecks from servers and storage to the network itself.

- Lower CPU utilization to free up resources for applications or more VM scalability

- Accelerate processing of small-packet I/O with DPDK

- Save time by automating adapter connectivity between 10GbE and 25GbE

- Reduced latency through direct memory access for I/O transactions to increase performance

- Network isolation and QoS at the VM level to improve VM application performance

- Reduce TCO with heterogeneous management

* Source; Demartek findings

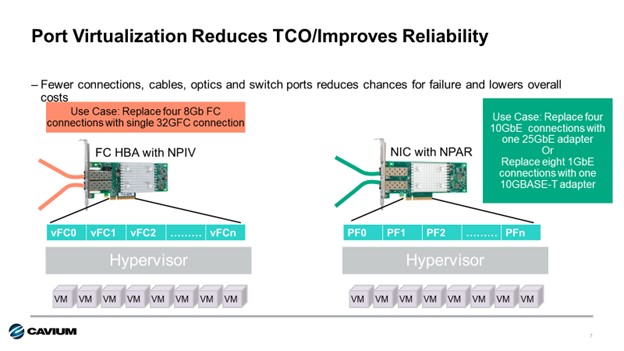

Table 2: Advanced Features in Cavium FastLinQ Adapters for HPE CloudlineNetwork Partitioning (NPAR) virtualizes the physical port into eight virtual functions on the PCIe bus. This makes a dual port adapter appear to the host O/S as if it were eight individual NICs. Furthermore, the bandwidth of each virtual function can be fine-tuned in increments of 500Mbps, providing full Quality of Service on each connection. SR-IOV is an additional virtualization offload these adapters support that moves management of VM to VM traffic from the host hypervisor to the adapter. This frees up CPU resources and reduces VM to VM latency.

Remote Direct Memory Access (RDMA) is an offload that routes I/O traffic directly from the adapter to the host memory. This bypasses the O/S kernel and can improve performance by reducing latency. The Cavium adapters support what is called Universal RDMA, which is the ability to support both RoCEv2 and iWARP protocols concurrently. This provides network administrators more flexibility and choice for low latency solutions built with HPE Cloudline servers.

SmartAN is a Cavium technology available on the 10/25GbE adapters that addresses issues related to bandwidth matching and the need for Forward Error Correction (FEC) when switching between 10Gbe and 25GbE connections. For 25GbE connections, either Reed Solomon FEC (RS-FEC) or Fire Code FEC (FC-FEC) is required to correct bit errors that occur at higher bandwidths. For the details behind SmartAN technology you can refer to the Marvell technology brief here.

For simplified management, Cavium provides a suite of utilities that allow for configuration and monitoring of the adapters that work across all the popular O/S environments including Microsoft Windows Server, VMware and Linux. Cavium’s unified management suite includes QCC GUI, CLI and v-Center plugins, as well as PowerShell Cmdlets for scripting configuration commands across multiple servers. Cavum’s unified management utilities can be downloaded from www.cavium.com .

-

February 20, 2018

如果您还没有使用智能 I/O 适配器,您值得拥有!

By Todd Owens, Field Marketing Director, Marvell

Like a kid in a candy store, choose I/O wisely.

But with so many choices, the decision was often confusing. With time running out, you’d usually just grab the name-brand candy you were familiar with. But what were you missing out on?

There are lots of choices and it takes time to understand all the differences. As a result, system architects in many cases just fall back to the legacy name-brand adapter they have become familiar with. Is this the best option for their client though? Not always.

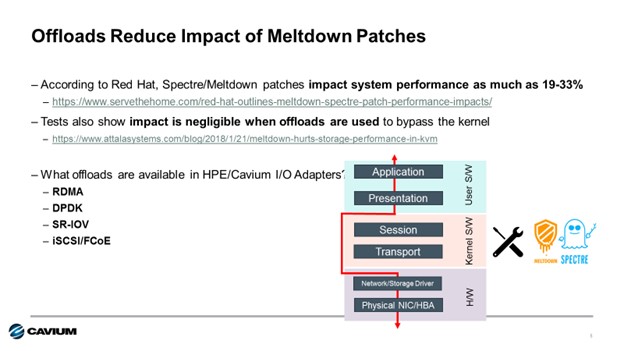

These adapters utilize a variety of offload technology and other capabilities to take on tasks associated with I/O processing that are typically done in software by the CPU when using a basic “standard” Ethernet adapter. Intelligent offloads include things like SR-IOV, RDMA, iSCSI, FCoE or DPDK. Each of these offloads the work to the adapter and, in many cases, bypasses the O/S kernel, speeding up I/O transactions and increasing performance.

Another reason is to mitigate performance impact to the Spectre and Meltdown fixes required now for X86 server processors. The side channel vulnerability known as Spectre and Meltdown in X86 processors required kernel patches from the CPU vendor. These patches can have a significantly reduce CPU performance. For example, Red Hat reported the impact could be as much as a 19% performance degradation. That’s a big performance hit.

Some intelligent I/O adapters have port virtualization capabilities. Cavium Fibre Channel HBAs implement N-port ID Virtualization, or NPIV, to allow a single Fibre Channel port appear as multiple virtual Fibre Channel adapters to the hypervisor. For Cavium FastLinQ Ethernet Adapters, Network Partitioning, or NPAR, is utilized to provide similar capability for Ethernet connections. Up to eight independent connections can be presented to the host O/S making a single dual-port adapter look like 16 NICs to the operating system. Each virtual connection can be set to specific bandwidth and priority settings, providing full quality of service per connection.

At HPE, there are more than fifty 10Gb-100GbE Ethernet adapters to choose from across the HPE ProLiant, Apollo, BladeSystem and HPE Synergy server portfolios. That’s a lot of documentation to read and compare. Cavium is proud to be a supplier of eighteen of these Ethernet adapters, and we’ve created a handy quick reference guide to highlight which of these offloads and virtualization features are supported on which adapters. View the complete Cavium HPE Ethernet Adapter Quick Reference guide here.

For Fibre Channel HBAs, there are fewer choices (only nineteen), but we make a quick reference document available for our HBA offerings at HPE as well. You can view the Fibre Channel HBA Quick Reference here.

最近推文

- HashiCorp and Marvell: Teaming Up for Multi-Cloud Security Management

- Cryptomathic and Marvell: Enhancing Crypto Agility for the Cloud

- The Big, Hidden Problem with Encryption and How to Solve It

- Self-Destructing Encryption Keys and Static and Dynamic Entropy in One Chip

- Dual Use IP: Shortening Government Development Cycles from Two Years to Six Months