Co-Packaged Copper Extending Its Reach Inside Scale-Up Networks

Power and space are two of the most critical resources in building AI infrastructure. That’s why Marvell is working with cabling partners and other industry experts to build a framework that enables data center operators to integrate co-packaged copper (CPC) interconnects into scale-up networks.

Unlike traditional printed circuit board (PCB) traces, CPCs aren’t embedded in circuit boards. Instead, CPCs consist of discrete ribbons or bundles of twinax cable that run alongside the board. By taking the connection out of the board, CPCs extend the reach of copper connections without the need for additional components such as equalizers or amplifiers as well as reduce interference, improve signal integrity, and lower the power budget of AI networks.

Being completely passive, CPCs can’t match the reach of active electrical cables (AECs) or optical transceivers. They extend farther than traditional direct attach copper (DAC) cables, making them an optimal solution for XPU-to-XPU connections within a tray or connecting XPUs in a tray to the backplane. Typical 800G CPC connections between processors within the same tray span a few hundred millimeters while XPU-to-backplane connections can reach 1.5 meters. Looking ahead,1.6T CPCs based around 200G lanes are expected within the next two years, followed by 3.2T solutions.

While the vision can be straightforward to describe, it involves painstaking engineering and cooperation across different ecosystems. Marvell has been cultivating partnerships to ensure a smooth transition to CPCs as well as create an environment where the technology can evolve and scale rapidly.

Innovating Through Collaboration

The collaboration with thermal management experts Mikros Technologies, a Jabil company, exemplifies this approach. Founded in 1991, the company pioneered groundbreaking single-phase and two-phase liquid cooling solutions for space station electronics and cabin temperature control for NASA’s Lyndon B. Johnson Space Center. Today, Mikros Technologies offers the highest level of heat control, enabling higher power densities and reduced energy consumption across complex systems.

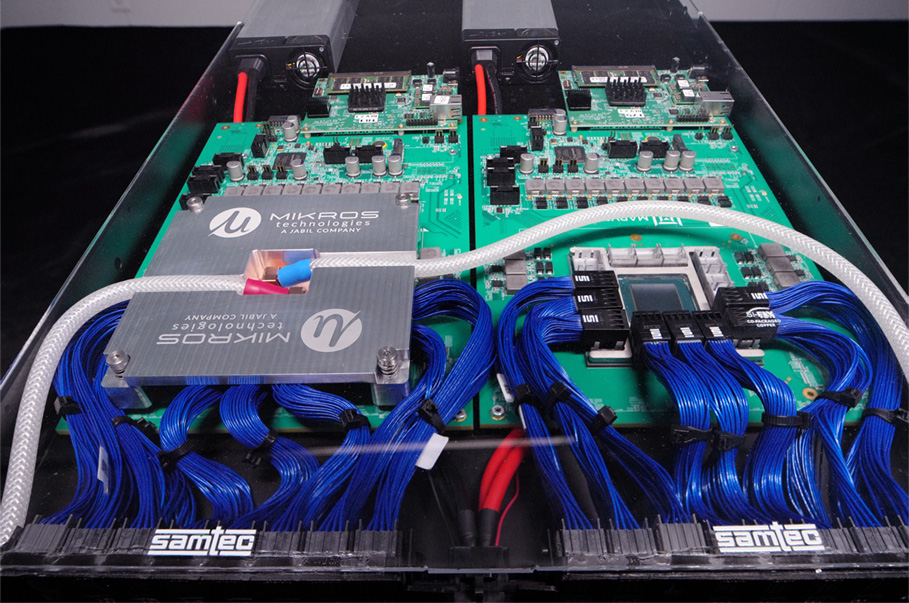

This Marvell board has two XPUs connected by Si-Fly ® co-packaged copper cable arrays from Samtec (blue) and a custom liquid cooled solution from Mikros Technologies (on the left).

The board photographed above is an example of 14 CPC ports delivering a cumulative 89.6Tbps of bandwidth. To keep the system cool, the Mikros Technologies cold plate on the left features a low-profile design that preserves a 1U system height. This solution is optimized for higher inlet temperatures, enabling greater compute power in a smaller footprint while reducing pumping and chilling power usage and costs. See an overview of the CPC system in the first section of the short video below:

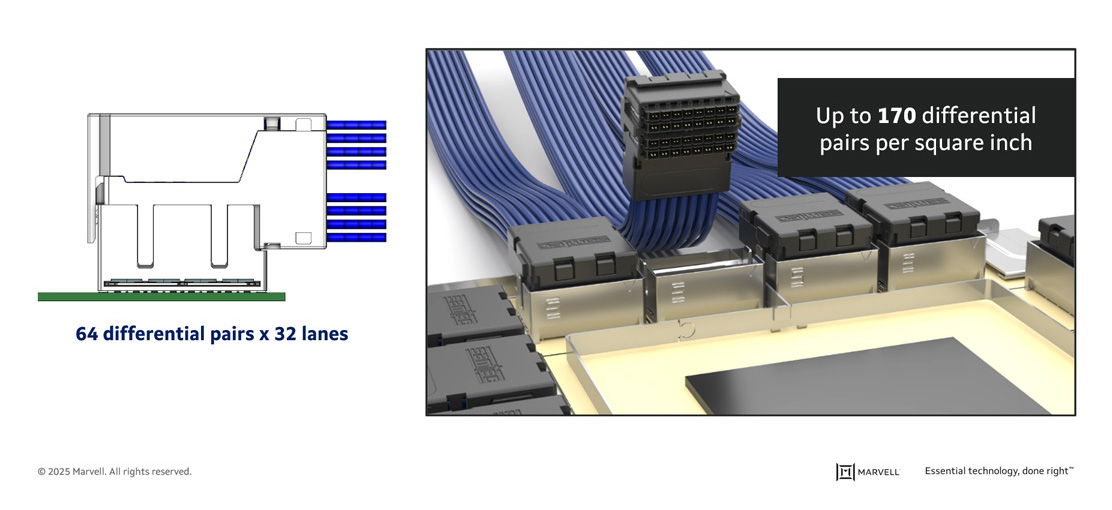

Another collaborator is Samtec. The company’s Flyover® cable technology can help extend signal reach and density to achieve next-gen speeds by routing signals via ultra-low skew twinax cable versus through lossy PCB. Serving a wide range of industries, Samtec also offers high-speed board-to-board solutions, micro/rugged interconnects, optical and RF technologies, and complements these products with engineering support and custom solutions. In the photographed display, each of the 14 ports connects to 32 lanes operating 200 Gbps, delivering a total bandwidth of 6.4Tbps of bandwidth per port. The high-density connectors from Samtec provide up to 170 differential pin pairs per square inch, enabling exceptional throughput.

The modular design simplifies assembly, configuration, replacement, and ensures a seamless connection to the backplane.

The modular design simplifies assembly, configuration, replacement, and ensures a seamless connection to the backplane.

The TCO and ROI Benefits

CPCs optimize data center economics by reducing both power consumption and PCB costs, delivering efficiency without compromising performance. While active copper cables and similar devices consume only a few watts of power, the cumulative impact across thousands of short connections, typically 1.5 meters or less, can be significant.

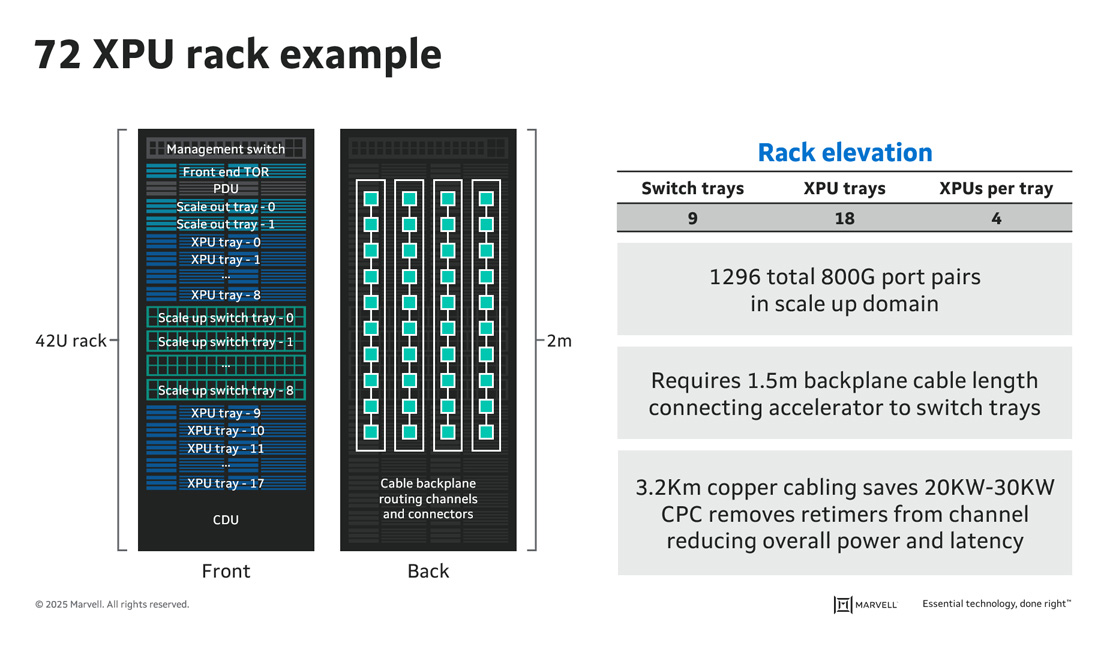

For example, a standard 72-processor rack may require up to 1,300 800Gbps port pairs scale-up connections, totaling more than three kilometers end-to-end. These short links generally eliminate the need for retimers, which are essential for longer connections, resulting in an additional 15–20% reduction in rack power. Over hundreds of racks in a data center and thousands across global infrastructures, these factors make CPCs a compelling solution for data center operators seeking cost-effective scalability and improved energy efficiency.

A standard 72-XPU rack contains ~1,300 connections. A complete row, which usually contains ten racks, would have 13,000 connections that would collectively run for 30 kilometers and need 200-300kW. (Marvell estimates from industry averages)

CPCs also pave the way for transmit-retimed optical modules (TROs) which use digital signal processors (DSPs) to process signals during transmission. TROs can reduce pluggable power consumption by more than 40%, bringing it down to 9 watts or lower, depending on the module.1 Moreover, as stated above, combining CPC with liquid cooling can dramatically lower the volumetric space required per tray, thereby increasing compute density which may give data center operators the ability to improve revenue per rack and maximize return on investment.

The Evolution of Interconnect

CPCs, AECs and TROs represent a rapid evolution of interconnect technologies designed to meet the escalating performance demands of modern data centers. In the past, a few classes of cables were sufficient to support most workloads. Today, however, the growing diversity of applications and environments demands a broader portfolio of cables. This expanded range gives organizations the flexibility to optimize their infrastructure for specific use cases. While these new cable classes may overlap in functionality, they primarily complement each other, enabling tailored solutions for modern performance needs.

Other new product categories in the interconnect ecosystem that have emerged in recent years include:

- Active Copper Cables (ACC), which include an equalizer at the terminal ends of a copper cable for connecting trays within a rack and are complimentary to CPCs.

- DSPs with integrated TIAs and drivers and DSPs with integrated drivers and separate TIAs for reducing power over optical interconnects in select use cases.

- Coherent lite DSPs, which are mid-range DSPs for connecting data center campuses between 2 and 20km apart. In the 3.2T era of networking, coherent lite DSPs are expected to move inside of data centers.

- Long-range coherent DSPs and modules with probabilistic constellation shaping for continent-scale connections (1000km and beyond) between data centers.

Expect more developments to come. As data centers scale and operators refine their ability to optimize infrastructure for training, inference, edge deployment and other use cases, the demand for diverse classes of interconnects will accelerate.

1. Marvell, March 2024 and Marvell estimates

Hyperscale data centers, Optical Connectivity, data center interconnect, Optical Interconnect, Data Center Interconnects, Optical Interconnect Packaging, high speed optical connectivity

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP