Archive for the 'AI' Category

-

January 25, 2024

How PCIe Interconnect is Critical for the Emerging AI Era

By Annie Liao, Product Management Director, Connectivity, Marvell

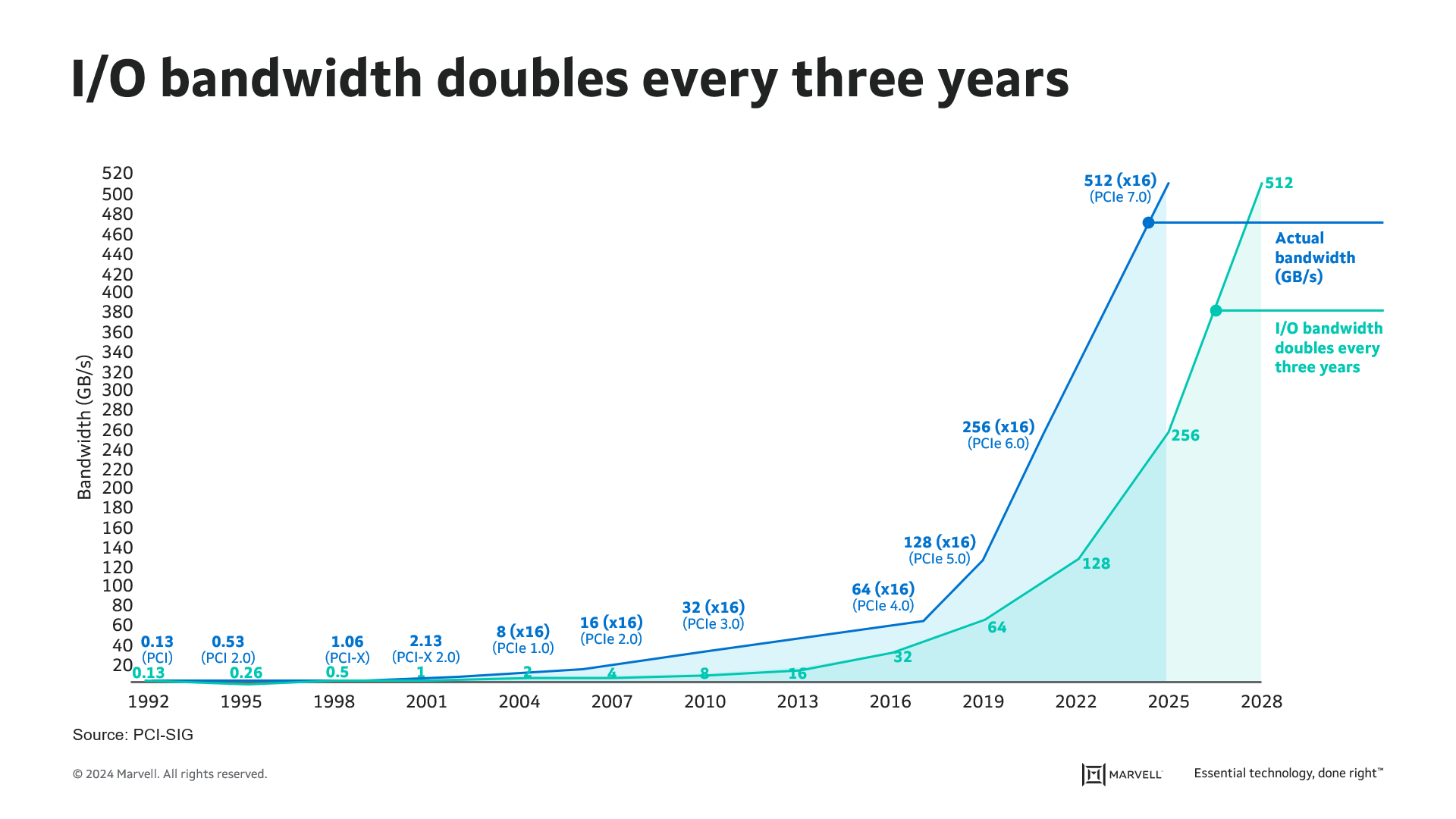

PCIe has historically been used as protocol for communication between CPU and computer subsystems. It has gradually increased speed since its debut in 2003 (PCI Express) and after 20 years of PCIe development, we are currently at PCIe Gen 5 with I/O bandwidth of 32Gbps per lane. There are many factors driving the PCIe speed increase. The most prominent ones are artificial intelligence (AI) and machine learning (ML). In order for CPU and AI Accelerators/GPUs to effectively work with each other for larger training models, the communication bandwidth of the PCIe-based interconnects between them needs to scale to keep up with the exponentially increasing size of parameters and data sets used in AI models. As the number of PCIe lanes supported increases with each generation, the physical constraints of the package beachfront and PCB routing put a limit to the maximum number of lanes in a system. This leaves I/O speed increase as the only way to push more data transactions per second. The compute interconnect bandwidth demand fueled by AI and ML is driving a faster transition to the next generation of PCIe, which is PCIe Gen 6.

PCIe has been using 2-level Non-Return-to-Zero (NRZ) modulation since its inception. Increasing PCIe speed up to Gen 5 has been achieved through doubling of the I/O speed. For Gen 6, PCI-SIG decided to adopt Pulse-Amplitude Modulation 4 (PAM4), which carries 4-level signal encoding 2 bits of data (00, 01, 10, 11). The reduced margin resulting from the transition of 2-level signaling to 4-level signaling has also necessitated the use of Forward Error Correction (FEC) protection, a first for PCIe links. With the adoptions of PAM4 signaling and FEC, Gen 6 marks an inflection point for PCIe both from signaling and protocol layer perspectives.

In addition to AI/ML, disaggregation of memory and storage is an emerging trend in compute applications that has a significant impact in the applications of PCIe based interconnect. PCIe has historically been adopted on-board and for in-chassis interconnects. Attaching more front-facing NVMe SSDs is one of the common PCIe interconnect examples. With the increasing trends toward flexible resource allocation, and the advancement of CXL technology, the server industry is now moving toward disaggregated and composable infrastructure. In this disaggregated architecture, the PCIe end points are located at different chassis away from the PCIe root complex, requiring the PCIe link to travel out of the system chassis. This is typically achieved through direct attach cables (DAC) that can range up to 3-5m.

-

October 19, 2023

走近 AI 时代下的 Marvell 光学技术与创新

By Kristin Hehir, Senior Manager, PR and Marketing, Marvell

The sheer volume of data traffic moving across networks daily is mind-boggling almost any way you look at it. During the past decade, global internet traffic grew by approximately 20x, according to the International Energy Agency. One contributing factor to this growth is the popularity of mobile devices and applications: Smartphone users spend an average of 5 hours a day, or nearly 1/3 of their time awake, on their devices, up from three hours just a few years ago. The result is incredible amounts of data in the cloud that need to be processed and moved. Around 70% of data traffic is east-west traffic, or the data traffic inside data centers. Generative AI, and the exponential growth in the size of data sets needed to feed AI, will invariably continue to push the curb upward.

Yet, for more than a decade, total power consumption has stayed relatively flat thanks to innovations in storage, processing, networking and optical technology for data infrastructure. The debut of PAM4 digital signal processors (DSPs) for accelerating traffic inside data centers and coherent DSPs for pluggable modules have played a large, but often quiet, role in paving the way for growth while reducing cost and power per bit.

Marvell at ECOC 2023

At Marvell, we’ve been gratified to see these technologies get more attention. At the recent European Conference on Optical Communication, Dr. Loi Nguyen, EVP and GM of Optical at Marvell, talked with Lightwave editor in chief, Sean Buckley, on how Marvell 800 Gbps and 1.6 Tbps technologies will enable AI to scale.

-

September 05, 2023

800G: 光网络的一个拐点

By Samuel Liu, Senior Director, Product Line Management, Marvell

Digital technology has what you could call a real estate problem. Hyperscale data centers now regularly exceed 100,000 square feet in size. Cloud service providers plan to build 50 to 100 edge data centers a year and distributed applications like ChatGPT are further fueling a growth of data traffic between facilities. Similarly, this explosive surge in traffic also means telecommunications carriers need to upgrade their wired and wireless networks, a complex and costly undertaking that will involve new equipment deployment in cities all over the world.

Weaving all of these geographically dispersed facilities into a fast, efficient, scalable and economical infrastructure is now one of the dominant issues for our industry.

Pluggable modules based on coherent digital signal processors (CDSPs) debuted in the last decade to replace transponders and other equipment used to generate DWDM compatible optical signals. These initial modular products didn’t offer the same performance as the incumbent solutions, and could only be deployed in limited use cases. These early modules, with their large form factors, had performance limitations and did not support the required high-density data transmission. Over time, advances in technology optimized the performance of pluggable modules, and CDSP speeds grew from 100 to 200 and 400 Gbps. Continued innovation, and the development of an open ecosystem, helped expand the potential applications.

-

June 27, 2023

利用高速光学连接扩展人工智能基础设施

By Suhas Nayak, Senior Director of Solutions Marketing, Marvell

In the world of artificial intelligence (AI), where compute performance often steals the spotlight, there's an unsung hero working tirelessly behind the scenes. It's something that connects the dots and propels AI platforms to new frontiers. Welcome to the realm of optical connectivity, where data transfer becomes lightning-fast and AI's true potential is unleashed. But wait, before you dismiss the idea of optical connectivity as just another technical detail, let's pause and reflect. Think about it: every breakthrough in AI, every mind-bending innovation, is built on the shoulders of data—massive amounts of it. And to keep up with the insatiable appetite of AI workloads, we need more than just raw compute power. We need a seamless, high-speed highway that allows data to flow freely, powering AI platforms to conquer new challenges.

In this post, I’ll explain the importance of optical connectivity, particularly the role of DSP-based optical connectivity, in driving scalable AI platforms in the cloud. So, buckle up, get ready to embark on a journey where we unlock the true power of AI together.

-

June 12, 2023

人工智能与数据基础设施的结构转变

By Michael Kanellos, Head of Influencer Relations, Marvell

AI’s growth is unprecedented from any angle you look at it. The size of large training models is growing 10x per year. ChatGPT’s 173 million plus users are turning to the website an estimated 60 million times a day (compared to zero the year before.). And daily, people are coming up with new applications and use cases.

As a result, cloud service providers and others will have to transform their infrastructures in similarly dramatic ways to keep up, says Chris Koopmans, Chief Operations Officer at Marvell in conversation with Futurum’s Daniel Newman during the Six Five Summit on June 8, 2023.

“We are at the beginning of at least a decade-long trend and a tectonic shift in how data centers are architected and how data centers are built,” he said.

The transformation is already underway. AI training, and a growing percentage of cloud-based inference, has already shifted from running on two-socket servers based around general processors to systems containing eight more GPUs or TPUs optimized to solve a smaller set of problems more quickly and efficiently.

-

2023 年 5 月 22 日

我们准备好迎接大规模人工智能工作量了吗?

By Noam Mizrahi, Executive Vice President, Chief Technology Officer, Marvell

Originally published in Embedded

ChatGPT has fired the world’s imagination about AI. The chatbot can write essays, compose music, and even converse in different languages. If you’ve read any ChatGPT poetry, you can see it doesn’t pass the Turing Test yet, but it’s a huge leap forward from what even experts expected from AI just three months ago. Over one million people became users in the first five days, shattering records for technology adoption.

The groundswell also strengthens arguments that AI will have an outsized impact on how we live—with some predicting AI will contribute significantly to global GDP by 2030 by fine-tuning manufacturing, retail, healthcare, financial systems, security, and other daily processes.

But the sudden success also shines light on AI’s most urgent problem: our computing infrastructure isn’t built to handle the workloads AI will throw at it. The size of AI networks grew by 10x per year over the last 5 years. By 2027 one in five Ethernet switch ports in data centers will be dedicated to AI, ML and accelerated computing.

-

March 10, 2023

Nova 介绍:针对下一代 AI/ML 系统中高性能光纤优化的 1.6T PAM4 DSP

By Kevin Koski, Product Marketing Director, Marvell

Last week, Marvell introduced Nova™, its latest, fourth generation PAM4 DSP for optical modules. It features breakthrough 200G per lambda optical bandwidth, which enables the module ecosystem to bring to market 1.6 Tbps pluggable modules. You can read more about it in the press release and the product brief.

In this post, I’ll explain why the optical modules enabled by Nova are the optimal solution to high-bandwidth connectivity in artificial intelligence and machine learning systems.

Let’s begin with a look into the architecture of supercomputers, also known as high-performance computing (HPC).

Historically, HPC has been realized using large-scale computer clusters interconnected by high-speed, low-latency communications networks to act as a single computer. Such systems are found in national or university laboratories and are used to simulate complex physics and chemistry to aid groundbreaking research in areas such as nuclear fusion, climate modeling and drug discovery. They consume megawatts of power.

The introduction of graphics processing units (GPUs) has provided a more efficient way to complete specific types of computationally intensive workloads. GPUs allow for the use of massive, multi-core parallel processing, while central processing units (CPUs) execute serial processes within each core. GPUs have both improved HPC performance for scientific research purposes and enabled a machine learning (ML) renaissance of sorts. With these advances, artificial intelligence (AI) is being pursued in earnest.

-

March 02, 2023

Introducing the 51.2T Teralynx 10, the Industry’s Lowest Latency Programmable Switch

By Amit Sanyal, Senior Director, Product Marketing, Marvell

If you’re one of the 100+ million monthly users of ChatGPT—or have dabbled with Google’s Bard or Microsoft’s Bing AI—you’re proof that AI has entered the mainstream consumer market.

And what’s entered the consumer mass-market will inevitably make its way to the enterprise, an even larger market for AI. There are hundreds of generative AI startups racing to make it so. And those responsible for making these AI tools accessible—cloud data center operators—are investing heavily to keep up with current and anticipated demand.

Of course, it’s not just the latest AI language models driving the coming infrastructure upgrade cycle. Operators will pay equal attention to improving general purpose cloud infrastructure too, as well as take steps to further automate and simplify operations.

To help operators meet their scaling and efficiency objectives, today Marvell introduces Teralynx® 10, a 51.2 Tbps programmable 5nm monolithic switch chip designed to address the operator bandwidth explosion while meeting stringent power- and cost-per-bit requirements. It’s intended for leaf and spine applications in next-generation data center networks, as well as AI/ML and high-performance computing (HPC) fabrics.

A single Teralynx 10 replaces twelve of the 12.8 Tbps generation, the last to see widespread deployment. The resulting savings are impressive: 80% power reduction for equivalent capacity.

-

February 14, 2023

下一代数据中心网络化需要做的三件事

By Amit Sanyal, Senior Director, Product Marketing, Marvell

Data centers are arguably the most important buildings in the world. Virtually everything we do—from ordinary business transactions to keeping in touch with relatives and friends—is accomplished, or at least assisted, by racks of equipment in large, low-slung facilities.

And whether they know it or not, your family and friends are causing data center operators to spend more money. But it’s for a good cause: it allows your family and friends (and you) to continue their voracious consumption, purchasing and sharing of every kind of content—via the cloud.

Of course, it’s not only the personal habits of your family and friends that are causing operators to spend. The enterprise is equally responsible. They’re collecting data like never before, storing it in data lakes and applying analytics and machine learning tools—both to improve user experience, via recommendations, for example, and to process and analyze that data for economic gain. This is on top of the relentless, expanding adoption of cloud services.

-

December 05, 2022

Leading Lights Award 对 Deneb CDSP 的佼佼者地位予以肯定

By Johnny Truong, Senior Manager, Public Relations, Marvell

At this weeks’ Leading Lights Awards Ceremony, hosted by Light Reading, Editor-in-Chief Phil Harvey announced that the Marvell® Deneb™ Coherent Digital Signal Processor (CDSP) is the winner of the Most Innovative Service Provider Transport Solution category. This recognition is awarded to the optical systems vendor or optical components vendor providing the most innovative optical transport solution for service provider customers.

At this weeks’ Leading Lights Awards Ceremony, hosted by Light Reading, Editor-in-Chief Phil Harvey announced that the Marvell® Deneb™ Coherent Digital Signal Processor (CDSP) is the winner of the Most Innovative Service Provider Transport Solution category. This recognition is awarded to the optical systems vendor or optical components vendor providing the most innovative optical transport solution for service provider customers.Driving the industry's largest standards-based ecosystem, the Marvell Deneb CDSP enables disaggregation which is critical for carriers to lower their CAPEX and OPEX as they increase network capacity. This recognition underscores Marvell’s success in bringing leading-edge density and performance optimization advantages to carrier networks.

In its 18th year, the Leading Lights is Light Reading’s flagship awards program which recognizes top companies and executives for their outstanding achievements in next-generation communications technology, applications, services, strategies, and innovations.

Visit the Light Reading blog for a full list of categories, finalists and winners.

-

October 12, 2022

云存储和内存的演变

By Gary Kotzur, CTO, Storage Products Group, Marvell and Jon Haswell, SVP, Firmware, Marvel

The nature of storage is changing much more rapidly than it ever has historically. This evolution is being driven by expanding amounts of enterprise data and the inexorable need for greater flexibility and scale to meet ever-higher performance demands.

If you look back 10 or 20 years, there used to be a one-size-fits-all approach to storage. Today, however, there is the public cloud, the private cloud, and the hybrid cloud, which is a combination of both. All these clouds have different storage and infrastructure requirements. What’s more, the data center infrastructure of every hyperscaler and cloud provider is architecturally different and is moving towards a more composable architecture. All of this is driving the need for highly customized cloud storage solutions as well as demanding the need for a comparable solution in the memory domain.

最近推文

- HashiCorp and Marvell: Teaming Up for Multi-Cloud Security Management

- Cryptomathic and Marvell: Enhancing Crypto Agility for the Cloud

- The Big, Hidden Problem with Encryption and How to Solve It

- Self-Destructing Encryption Keys and Static and Dynamic Entropy in One Chip

- Dual Use IP: Shortening Government Development Cycles from Two Years to Six Months