Posts Tagged 'Fibre Channel'

-

November 05, 2023

光纤通道: 关键任务共享存储连接的优选

By Todd Owens, Director, Field Marketing, Marvell

Here at Marvell, we talk frequently to our customers and end users about I/O technology and connectivity. This includes presentations on I/O connectivity at various industry events and delivering training to our OEMs and their channel partners. Often, when discussing the latest innovations in Fibre Channel, audience questions will center around how relevant Fibre Channel (FC) technology is in today’s enterprise data center. This is understandable as there are many in the industry who have been proclaiming the demise of Fibre Channel for several years. However, these claims are often very misguided due to a lack of understanding about the key attributes of FC technology that continue to make it the gold standard for use in mission-critical application environments.

From inception several decades ago, and still today, FC technology is designed to do one thing, and one thing only: provide secure, high-performance, and high-reliability server-to-storage connectivity. While the Fibre Channel industry is made up of a select few vendors, the industry has continued to invest and innovate around how FC products are designed and deployed. This isn’t just limited to doubling bandwidth every couple of years but also includes innovations that improve reliability, manageability, and security.

-

June 13, 2023

FC-NVMe 成为 HPE 下一代块存储的主流

By Todd Owens, Field Marketing Director, Marvell

While Fibre Channel (FC) has been around for a couple of decades now, the Fibre Channel industry continues to develop the technology in ways that keep it in the forefront of the data center for shared storage connectivity. Always a reliable technology, continued innovations in performance, security and manageability have made Fibre Channel I/O the go-to connectivity option for business-critical applications that leverage the most advanced shared storage arrays.

A recent development that highlights the progress and significance of Fibre Channel is Hewlett Packard Enterprise’s (HPE) recent announcement of their latest offering in their Storage as a Service (SaaS) lineup with 32Gb Fibre Channel connectivity. HPE GreenLake for Block Storage MP powered by HPE Alletra Storage MP hardware features a next-generation platform connected to the storage area network (SAN) using either traditional SCSI-based FC or NVMe over FC connectivity. This innovative solution not only provides customers with highly scalable capabilities but also delivers cloud-like management, allowing HPE customers to consume block storage any way they desire – own and manage, outsource management, or consume on demand. HPE GreenLake for Block Storage powered by Alletra Storage MP

HPE GreenLake for Block Storage powered by Alletra Storage MPAt launch, HPE is providing FC connectivity for this storage system to the host servers and supporting both FC-SCSI and native FC-NVMe. HPE plans to provide additional connectivity options in the future, but the fact they prioritized FC connectivity speaks volumes of the customer demand for mature, reliable, and low latency FC technology.

-

October 26, 2022

64G 光纤通道的品鉴记录

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

While age is just a number and so is new speed for Fibre Channel (FC), the number itself is often irrelevant and it’s the maturity that matters – kind of like a bottle of wine! Today as we make a toast to the data center and pop open (announce) the Marvell® QLogic® 2870 Series 64G Fibre Channel HBAs, take a glass and sip into its maturity to find notes of trust and reliability alongside of operational simplicity, in-depth visibility, and consistent performance.

Big words on the label? I will let you be the sommelier as you work through your glass and my writings.

-

February 08, 2022

存储网络的新发展: 自动驾驶 SANs

By Todd Owens, Field Marketing Director, Marvell and Jacqueline Nguyen, Marvell Field Marketing Manager

Storage area network (SAN) administrators know they play a pivotal role in ensuring mission-critical workloads stay up and running. The workloads and applications that run on the infrastructure they manage are key to overall business success for the company.

Like any infrastructure, issues do arise from time to time, and the ability to identify transient links or address SAN congestion quickly and efficiently is paramount. Today, SAN administrators typically rely on proprietary tools and software from the Fibre Channel (FC) switch vendors to monitor the SAN traffic. When SAN performance issues arise, they rely on their years of experience to troubleshoot the issues.

What creates congestion in a SAN anyway?

Refresh cycles for servers and storage are typically shorter and more frequent than that of SAN infrastructure. This results in servers and storage arrays that run at different speeds being connected to the SAN. Legacy servers and storage arrays may connect to the SAN at 16GFC bandwidth while newer servers and storage are connected at 32GFC.

Fibre Channel SANs use buffer credits to manage the prioritization of the traffic flow in the SAN. When a slower device intermixes with faster devices on the SAN, there can be situations where response times to buffer credit requests slow down, causing what is called “Slow Drain” congestion. This is a well-known issue in FC SANs that can be time consuming to troubleshoot and, with newer FC-NVMe arrays, this problem can be magnified. But these days are soon coming to an end with the introduction of what we can refer to as the self-driving SAN.

-

September 07, 2021

Windows Server 2022 和 Marvell QLogic 光纤通道

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

Recently, Microsoft® announced the general availability of Windows® Server 2022, a release that us geeks refer to with its codename “Iron.” At Marvell we have long worked to integrate our server connectivity solutions into Windows and like to think of the Marvell® QLogic® Fibre Channel (FC) technology as that tiny bit of “carbon” that turns “iron” to “steel” – strong yet flexible and designed to make business applications shine. Let’s dive into the bits and bytes of how the combination of Windows Server 2022 and Marvell QLogic FC makes for great chemistry.

-

August 18, 2020

认识一下 QLogic

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

Marvell® Fibre Channel HBAs are getting a promotion and here is the announcement email -

“I am pleased to announce the promotion of “Mr. QLogic® Fibre Channel” to Senior Transport Officer, Storage Connectivity at Enterprise Datacenters Inc. Mr. QLogic has been an excellent partner and instrumental in optimizing mission critical enterprise application access to external storage over the past 20 years. When Mr. QLogic first arrived at Enterprise Datacenters, block storage was in a disarray and efficiently scaling out performance seemed like an unsurmountable challenge. Mr. QLogic quickly established himself as a go-to leader and trusted partner for enabling low latency access to external storage across disk and flash. Mr. QLogic successfully collaborated with other industry leaders like Brocade and Mr. Cisco MDS to lay the groundwork for a broad set of innovative technologies under the StorFusion™ umbrella. In his new role, Mr. QLogic will further extend the value of StorFusion by bringing awareness of Storage Area Network (SAN) congestion into the server, while taking decisive action to prevent bottlenecks that may degrade mission critical enterprise application performance.

Please join me in congratulating QLogic on this well-deserved promotion.”

-

August 12, 2020

By Nishant Lodha, Director of Product Marketing – Emerging Technologies, Marvell

-

March 02, 2018

Connecting Shared Storage – iSCSI or Fibre Channel

By Todd Owens, Field Marketing Director, Marvell

One of the questions we get quite often is which protocol is best for connecting servers to shared storage?

What customers need to do is look at the reality of what they need from a shared storage environment and make a decision based on cost, performance and manageability.

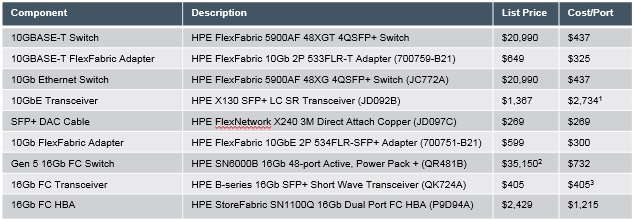

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port

Notes: 1. Optical transceiver needed at both adapter and switch ports for 10GbE networks. Thus cost/port is two times the transceiver cost 2. FC switch pricing includes full featured management software and licenses 3. FC Host Bus Adapters (HBAs) ship with transceivers, thus only one additional transceiver is needed for the switch port So if we do the math, the cost per port looks like this:

10GbE iSCSI with SFP+ Optics = $437+$2,734+$300 = $3,471

10GbE iSCSI with 3 meter Direct Attach Cable (DAC) =$437+$269+300 = $1,006

16GFC with SFP+ Optics = $773 + $405 + $1,400 = $2,578

Note, in my example, I chose 3 meter cable length, but even if you choose shorter or longer cables (HPE supports from 0.65 to 7 meter cable lengths), this is still the lowest cost connection option. Surprisingly, the cost of the 10GbE optics makes the iSCSI solution with optical connections the most expensive configuration.

It really comes down to distance and the number of connections required. The DAC cables can only span up to 7 meters or so. That means customers have only limited reach within or across racks. If customers have multiple racks or distance requirements of more than 7 meters, FC becomes the more attractive option, from a cost perspective.

- Latency is an order of magnitude lower for FC compared to iSCSI. Latency of Brocade Gen 5 (16Gb) FC switching (using cut-through switch architecture) is in the 700 nanoseconds range and for 10GbE it is in the range of 5 to 50 microseconds. The impact of latency gets compounded with iSCSI should the user implement 10GBASE-T connections in the iSCSI adapters. This adds another significant hit to the latency equation for iSCSI.

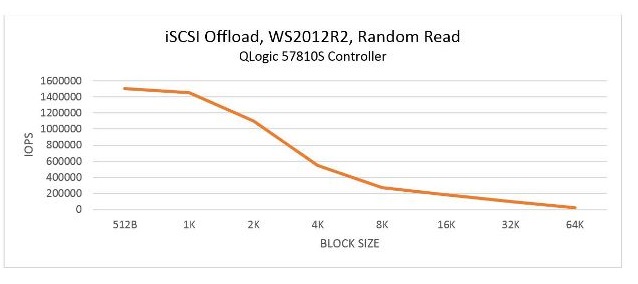

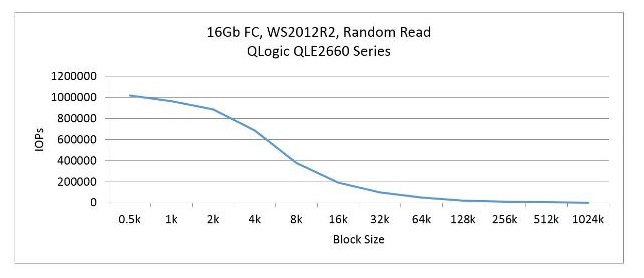

图 1: Cavium’s iSCSI Hardware Offload IOPS Performance

图 2:

Keep in mind, Ethernet network management hasn’t really changed much. Network administrators create virtual LANs (vLANs) to separate network traffic and reduce congestion. These network administrators have a variety of tools and processes that allow them to monitor network traffic, run diagnostics and make changes on the fly when congestion starts to impact application performance.

On the FC side, companies like Cavium and HPE have made significant improvements on the software side of things to simplify SAN deployment, orchestration and management. Technologies like fabric-assigned port worldwide name (FA-WWN) from Cavium and Brocade enable the SAN administrator to configure the SAN without having HBAs available and allow a failed server to be replaced without having to reconfigure the SAN fabric. Cavium and Brocade have also teamed up to improve the FC SAN diagnostics capability with Gen 5 (16Gb) Fibre Channel fabrics by implementing features such as Brocade ClearLink™ diagnostics, Fibre Chanel Ping (FC ping) and Fibre Channel traceroute (FC traceroute), link cable beacon (LCB) technology and more. HPE’s Smart SAN for HPE 3PAR provides the storage administrator the ability to zone the fabric and map the servers and LUNs to an HPE 3PAR StoreServ array from the HPE 3PAR StoreServ management console.

In many enterprise environments, there are typically dozens of network administrators. In those same environments, there may be less than a handful of “SAN” administrators. Yes, there are lots of LAN connected devices that need to be managed and monitored, but so few for SAN connected devices.

Well, it depends. If application performance is the biggest criteria, it’s hard to beat the combination of bandwidth, IOPS and latency of the 16GFC SAN. If compatibility and commonality with existing infrastructures is a critical requirement, 10GbE iSCSI is a good option (assuming the 10GbE infrastructure exists in the first place). If security is a key concern, FC is the best choice. When is the last time you heard of a FC network being hacked into?

最近推文

- HashiCorp and Marvell: Teaming Up for Multi-Cloud Security Management

- Cryptomathic and Marvell: Enhancing Crypto Agility for the Cloud

- The Big, Hidden Problem with Encryption and How to Solve It

- Self-Destructing Encryption Keys and Static and Dynamic Entropy in One Chip

- Dual Use IP: Shortening Government Development Cycles from Two Years to Six Months