Archive for the 'Data Center' Category

-

November 05, 2023

光纤通道: 关键任务共享存储连接的优选

By Todd Owens, Director, Field Marketing, Marvell

Here at Marvell, we talk frequently to our customers and end users about I/O technology and connectivity. This includes presentations on I/O connectivity at various industry events and delivering training to our OEMs and their channel partners. Often, when discussing the latest innovations in Fibre Channel, audience questions will center around how relevant Fibre Channel (FC) technology is in today’s enterprise data center. This is understandable as there are many in the industry who have been proclaiming the demise of Fibre Channel for several years. However, these claims are often very misguided due to a lack of understanding about the key attributes of FC technology that continue to make it the gold standard for use in mission-critical application environments.

From inception several decades ago, and still today, FC technology is designed to do one thing, and one thing only: provide secure, high-performance, and high-reliability server-to-storage connectivity. While the Fibre Channel industry is made up of a select few vendors, the industry has continued to invest and innovate around how FC products are designed and deployed. This isn’t just limited to doubling bandwidth every couple of years but also includes innovations that improve reliability, manageability, and security.

-

October 19, 2023

走近 AI 时代下的 Marvell 光学技术与创新

By Kristin Hehir, Senior Manager, PR and Marketing, Marvell

The sheer volume of data traffic moving across networks daily is mind-boggling almost any way you look at it. During the past decade, global internet traffic grew by approximately 20x, according to the International Energy Agency. One contributing factor to this growth is the popularity of mobile devices and applications: Smartphone users spend an average of 5 hours a day, or nearly 1/3 of their time awake, on their devices, up from three hours just a few years ago. The result is incredible amounts of data in the cloud that need to be processed and moved. Around 70% of data traffic is east-west traffic, or the data traffic inside data centers. Generative AI, and the exponential growth in the size of data sets needed to feed AI, will invariably continue to push the curb upward.

Yet, for more than a decade, total power consumption has stayed relatively flat thanks to innovations in storage, processing, networking and optical technology for data infrastructure. The debut of PAM4 digital signal processors (DSPs) for accelerating traffic inside data centers and coherent DSPs for pluggable modules have played a large, but often quiet, role in paving the way for growth while reducing cost and power per bit.

Marvell at ECOC 2023

At Marvell, we’ve been gratified to see these technologies get more attention. At the recent European Conference on Optical Communication, Dr. Loi Nguyen, EVP and GM of Optical at Marvell, talked with Lightwave editor in chief, Sean Buckley, on how Marvell 800 Gbps and 1.6 Tbps technologies will enable AI to scale.

-

October 18, 2023

数据中心的改头换面

By Dr. Radha Nagarajan, Senior Vice President and Chief Technology Officer, Optical and Cloud Connectivity Group, Marvell

This article was originally published in Data Center Knowledge

People or servers?

Communities around the world are debating this question as they try to balance the plans of service providers and the concerns of residents.

Last year, the Greater London Authority told real estate developers that new housing projects in West London may not be able to go forward until 2035 because data centers have taken all of the excess grid capacity1. EirGrid2 said it won’t accept new data center applications until 2028. Beijing3 and Amsterdam have placed strict limits on new facilities. Cities in the southwest and elsewhere4, meanwhile, are increasingly worried about water consumption as mega-sized data centers can use over 1 million gallons a day5.

When you add in the additional computing cycles needed for AI and applications like ChatGPT, the outline of the conflict becomes more heated.

On the other hand, we know we can’t live without them. Modern society, with remote work, digital streaming and modern communications all depend on data centers. Data centers are also one of sustainability’s biggest success stories. Although workloads grew by approximately 10x in the last decade with the rise of SaaS and streaming, total power consumption stayed almost flat at around 1% to 1.5%6 of worldwide electricity thanks to technology advances, workload consolidation, and new facility designs. Try and name another industry that increased output by 10x with a relatively fixed energy diet?

-

June 27, 2023

利用高速光学连接扩展人工智能基础设施

By Suhas Nayak, Senior Director of Solutions Marketing, Marvell

In the world of artificial intelligence (AI), where compute performance often steals the spotlight, there's an unsung hero working tirelessly behind the scenes. It's something that connects the dots and propels AI platforms to new frontiers. Welcome to the realm of optical connectivity, where data transfer becomes lightning-fast and AI's true potential is unleashed. But wait, before you dismiss the idea of optical connectivity as just another technical detail, let's pause and reflect. Think about it: every breakthrough in AI, every mind-bending innovation, is built on the shoulders of data—massive amounts of it. And to keep up with the insatiable appetite of AI workloads, we need more than just raw compute power. We need a seamless, high-speed highway that allows data to flow freely, powering AI platforms to conquer new challenges.

In this post, I’ll explain the importance of optical connectivity, particularly the role of DSP-based optical connectivity, in driving scalable AI platforms in the cloud. So, buckle up, get ready to embark on a journey where we unlock the true power of AI together.

-

June 13, 2023

FC-NVMe 成为 HPE 下一代块存储的主流

By Todd Owens, Field Marketing Director, Marvell

While Fibre Channel (FC) has been around for a couple of decades now, the Fibre Channel industry continues to develop the technology in ways that keep it in the forefront of the data center for shared storage connectivity. Always a reliable technology, continued innovations in performance, security and manageability have made Fibre Channel I/O the go-to connectivity option for business-critical applications that leverage the most advanced shared storage arrays.

A recent development that highlights the progress and significance of Fibre Channel is Hewlett Packard Enterprise’s (HPE) recent announcement of their latest offering in their Storage as a Service (SaaS) lineup with 32Gb Fibre Channel connectivity. HPE GreenLake for Block Storage MP powered by HPE Alletra Storage MP hardware features a next-generation platform connected to the storage area network (SAN) using either traditional SCSI-based FC or NVMe over FC connectivity. This innovative solution not only provides customers with highly scalable capabilities but also delivers cloud-like management, allowing HPE customers to consume block storage any way they desire – own and manage, outsource management, or consume on demand. HPE GreenLake for Block Storage powered by Alletra Storage MP

HPE GreenLake for Block Storage powered by Alletra Storage MPAt launch, HPE is providing FC connectivity for this storage system to the host servers and supporting both FC-SCSI and native FC-NVMe. HPE plans to provide additional connectivity options in the future, but the fact they prioritized FC connectivity speaks volumes of the customer demand for mature, reliable, and low latency FC technology.

-

March 10, 2023

Nova 介绍:针对下一代 AI/ML 系统中高性能光纤优化的 1.6T PAM4 DSP

By Kevin Koski, Product Marketing Director, Marvell

Last week, Marvell introduced Nova™, its latest, fourth generation PAM4 DSP for optical modules. It features breakthrough 200G per lambda optical bandwidth, which enables the module ecosystem to bring to market 1.6 Tbps pluggable modules. You can read more about it in the press release and the product brief.

In this post, I’ll explain why the optical modules enabled by Nova are the optimal solution to high-bandwidth connectivity in artificial intelligence and machine learning systems.

Let’s begin with a look into the architecture of supercomputers, also known as high-performance computing (HPC).

Historically, HPC has been realized using large-scale computer clusters interconnected by high-speed, low-latency communications networks to act as a single computer. Such systems are found in national or university laboratories and are used to simulate complex physics and chemistry to aid groundbreaking research in areas such as nuclear fusion, climate modeling and drug discovery. They consume megawatts of power.

The introduction of graphics processing units (GPUs) has provided a more efficient way to complete specific types of computationally intensive workloads. GPUs allow for the use of massive, multi-core parallel processing, while central processing units (CPUs) execute serial processes within each core. GPUs have both improved HPC performance for scientific research purposes and enabled a machine learning (ML) renaissance of sorts. With these advances, artificial intelligence (AI) is being pursued in earnest.

-

March 02, 2023

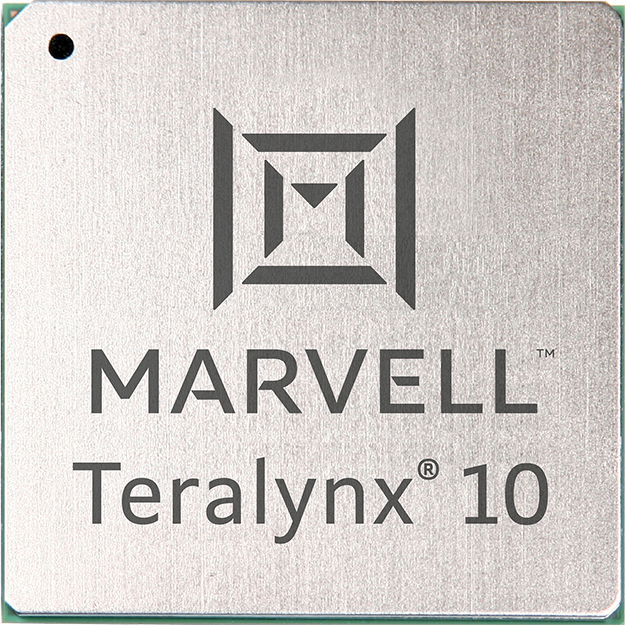

Introducing the 51.2T Teralynx 10, the Industry’s Lowest Latency Programmable Switch

By Amit Sanyal, Senior Director, Product Marketing, Marvell

If you’re one of the 100+ million monthly users of ChatGPT—or have dabbled with Google’s Bard or Microsoft’s Bing AI—you’re proof that AI has entered the mainstream consumer market.

And what’s entered the consumer mass-market will inevitably make its way to the enterprise, an even larger market for AI. There are hundreds of generative AI startups racing to make it so. And those responsible for making these AI tools accessible—cloud data center operators—are investing heavily to keep up with current and anticipated demand.

Of course, it’s not just the latest AI language models driving the coming infrastructure upgrade cycle. Operators will pay equal attention to improving general purpose cloud infrastructure too, as well as take steps to further automate and simplify operations.

To help operators meet their scaling and efficiency objectives, today Marvell introduces Teralynx® 10, a 51.2 Tbps programmable 5nm monolithic switch chip designed to address the operator bandwidth explosion while meeting stringent power- and cost-per-bit requirements. It’s intended for leaf and spine applications in next-generation data center networks, as well as AI/ML and high-performance computing (HPC) fabrics.

A single Teralynx 10 replaces twelve of the 12.8 Tbps generation, the last to see widespread deployment. The resulting savings are impressive: 80% power reduction for equivalent capacity.

-

February 21, 2023

Marvell 和 Aviz Networks 合作推动 SONiC 在云和企业数据中心的部署

By Kant Deshpande, Director, Product Management, Marvell

Disaggregation is the future

Disaggregation—the decoupling of hardware and software—is arguably the future of networking. Disaggregation lets customers select best-of-breed hardware and software, enabling rapid innovation by separating the hardware and software development paths.Disaggregation started with server virtualization and is being adapted to storage and networking technology. In networking, disaggregation promises that any networking operating system (NOS) can be integrated with any switch silicon. Open source-standards like ONIE allow a networking switch to load and install any NOS during the boot process.

SONiC: the Linux of networking OS

Software for Open Networking in Cloud (SONiC) has been gaining momentum as the preferred open-source cloud-scale network operating system (NOS).In fact, Gartner predicts that by 2025, 40% of organizations that operate large data center networks (greater than 200 switches) will run SONiC in a production environment.[i] According to Gartner, due to readily expanding customer interest and a commercial ecosystem, there is a strong possibility SONiC will become analogous to Linux for networking operating systems in next three to six years.

-

February 14, 2023

下一代数据中心网络化需要做的三件事

By Amit Sanyal, Senior Director, Product Marketing, Marvell

Data centers are arguably the most important buildings in the world. Virtually everything we do—from ordinary business transactions to keeping in touch with relatives and friends—is accomplished, or at least assisted, by racks of equipment in large, low-slung facilities.

And whether they know it or not, your family and friends are causing data center operators to spend more money. But it’s for a good cause: it allows your family and friends (and you) to continue their voracious consumption, purchasing and sharing of every kind of content—via the cloud.

Of course, it’s not only the personal habits of your family and friends that are causing operators to spend. The enterprise is equally responsible. They’re collecting data like never before, storing it in data lakes and applying analytics and machine learning tools—both to improve user experience, via recommendations, for example, and to process and analyze that data for economic gain. This is on top of the relentless, expanding adoption of cloud services.

-

November 28, 2022

真正了不起的黑客 – 赢得 SONiC 用户满意

By Kishore Atreya, Director of Product Management, Marvell

Recently the Linux Foundation hosted its annual ONE Summit for open networking, edge projects and solutions. For the first time, this year’s event included a “mini-summit” for SONiC, an open source networking operating system targeted for data center applications that’s been widely adopted by cloud customers. A variety of industry members gave presentations, including Marvell’s very own Vijay Vyas Mohan, who presented on the topic of Extensible Platform Serdes Libraries. In addition, the SONiC mini-summit included a hackathon to motivate users and developers to innovate new ways to solve customer problems.

So, what could we hack?

At Marvell, we believe that SONiC has utility not only for the data center, but to enable solutions that span from edge to cloud. Because it’s a data center NOS, SONiC is not optimized for edge use cases. It requires an expensive bill of materials to run, including a powerful CPU, a minimum of 8 to 16GB DDR, and an SSD. In the data center environment, these HW resources contribute less to the BOM cost than do the optics and switch ASIC. However, for edge use cases with 1G to 10G interfaces, the cost of the processor complex, primarily driven by the NOS, can be a much more significant contributor to overall system cost. For edge disaggregation with SONiC to be viable, the hardware cost needs to be comparable to that of a typical OEM-based solution. Today, that’s not possible.

-

October 05, 2022

设计节能芯片

By Rebecca O'Neill, Global Head of ESG, Marvell

Today is Energy Efficiency Day. Energy, specifically the electricity consumption required to power our chips, is something that is top of mind here at Marvell. Our goal is to reduce power consumption of products with each generation for set capabilities.

Our products play an essential role in powering data infrastructure spanning cloud and enterprise data centers, 5G carrier infrastructure, automotive vehicles, and industrial and enterprise networking. When we design our products, we focus on innovative features that deliver new capabilities while also improving performance, capacity and security to ultimately improve energy efficiency during product use.

These innovations help make the world’s data infrastructure more efficient and, by extension, reduce our collective impact on climate change. The use of our products by our customers contributes to Marvell’s Scope 3 greenhouse gas emissions, which is our biggest category of emissions.

-

October 20, 2021

By Radha Nagarajan, SVP and CTO, Optical and Copper Connectivity Business Group

As the volume of global data continues to grow exponentially, data center operators often confront a frustrating challenge: how to process a rising tsunami of terabytes within the limits of their facility’s electrical power supply – a constraint imposed by the physical capacity of the cables that bring electric power from the grid into their data center.

Fortunately, recent innovations in optical transmission technology – specifically, in the design of optical transceivers – have yielded tremendous gains in energy efficiency, which frees up electric power for more valuable computational work.

Recently, at the invitation of the Institute of Electrical and Electronics Engineers, my Marvell colleagues Ilya Lyubomirsky, Oscar Agazzi and I published a paper detailing these technological breakthroughs, titled Low Power DSP-based Transceivers for Data Center Optical Fiber Communications.

-

October 04, 2021

By Khurram Malik, Senior Manager, Technical Marketing, Marvell

-

August 31, 2020

By Raghib Hussain, President, Products and Technologies

-

August 27, 2020

如何在 2020 年获得 NVMe over Fabric 的优势

By Todd Owens, Field Marketing Director, Marvell

As native Non-volatile Memory Express (NVMe®) share-storage arrays continue enhancing our ability to store and access more information faster across a much bigger network, customers of all sizes – enterprise, mid-market and SMBs – confront a common question: what is required to take advantage of this quantum leap forward in speed and capacity?

NVMe provides an efficient command set that is specific to memory-based storage, provides increased performance that is designed to run over PCIe 3.0 or PCIe 4.0 bus architectures, and -- offering 64,000 command queues with 64,000 commands per queue -- can provide much more scalability than other storage protocols.

-

August 19, 2020

By Todd Owens, Field Marketing Director, Marvell

-

April 01, 2019

变革数据中心架构,迎接互联智能新纪元

作者:Marvell 公司资深架构师 George Hervey

超大规模的云数据中心架构已存在多年,也足以支持全球数据需求,但依然挡不住重大改变的发生。 新兴的 5G、工业自动化、智慧城市和自动驾驶汽车,都在推动数据需求朝着可直接在网络边缘获取的方向发展。 数据中心需要新的架构,来支持这些新要求,包括降低功耗、低延迟、缩减占用空间和组合式基础架构。

可组合性所带来的数据存储资源解聚,可成就更加灵活高效的平台,满足数据中心的需求。 不过,这当然需要有出色的交换机解决方案提供支持。 Marvell® Prestera® CX 8500 以太网交换机产品组合的运行速度可达 12.8Tbps,拥有以下两大关键创新,致力于重新定义数据中心架构: 切片 Terabit 以太网路由器转发架构 (FASTER) 技术和储存感知流引擎 (SAFE) 技术。

Marvell Prestera CX 8500 产品系列借助 FASTER 和 SAFE 技术,可以将整体网络成本降低 50% 以上;确保更低的功耗、空间与延迟;并可通过完善的按流量可见性,准确定位发生拥塞问题的位置。

观看下方视频,深入了解 Marvell Prestera CX 8500 设备如何成为数据中心架构的革命性方案。

-

August 03, 2018

IOPs 和延迟

By Marvell PR Team

That’s why system administrators try to understand its performance and plan accordingly.

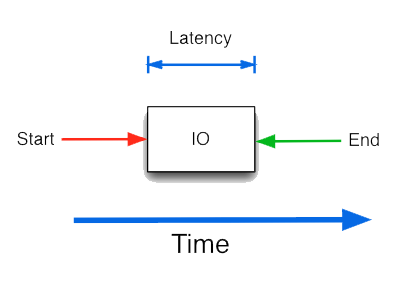

This can get quite complicated. Fortunately, storage performance can effectively be represented using two simple metrics: Input/Output operations per Second (IOPS) and Latency.

Let’s understand what these key factors are and how to use them to optimize of storage performance.

What is IOPS?

IOPS is a standard unit of measurement for the maximum number of reads and writes to a storage device for a given unit of time (e.g. seconds). IOPS represent the number of transactions that can be performed and not bytes of data. In order to calculate throughput, one would have to multiply the IOPS number by the block size used in the IO.

IOPS is a neutral measure of performance and can be used in a benchmark where two systems are compared using same block sizes and read/write mix.

IOPS is a neutral measure of performance and can be used in a benchmark where two systems are compared using same block sizes and read/write mix. What is a Latency?

Latency is the total time for completing a requested operation and the requestor receiving a response. Latency includes the time spent in all subsystems, and is a good indicator of congestion in the system.

IOPS is a neutral measure of performance and can be used in a benchmark where two systems are compared using same block sizes and read/write mix.

IOPS is a neutral measure of performance and can be used in a benchmark where two systems are compared using same block sizes and read/write mix. What is a Latency?

Latency is the total time for completing a requested operation and the requestor receiving a response.

Find more about Marvell’s QLogic Fibre Channel adapter technology at:

https://www.marvell.com/fibre-channel-adapters-and-controllers/qlogic-fibre-channel-adapters/

-

April 02, 2018

理解当今的网络遥测需求

作者:Tal Mizrahi,Marvell 特征定义架构师

最近几年,网络实施的方式发生根本变化,随着数据需求的增长,类型更广泛的功能和运行性能水平的提高已经成为一种必要条件。 与之相伴的是另一种更大的需求,即针对该项性能标准的实时准确测量方式,以及深度分析,以便在任何潜在问题发展升级之前,查找并后续进行解决。

当今的数据网络发展速度不断加快且复杂性不断攀升,意味着对这类活动的监控进程难度越来越大。 结果导致只能强制执行更加繁琐且本质灵活的遥测机制,尤其是对于数据中心和企业网络。

当人们试图提取遥测资料时,发现有范围广大的多种不同选项,包括被动监控、主动测量和混合方法。 最普遍的一种做法是骑肩跟随遥测信息进入正在通过网络的数据包。 这种策略应用于原处 OAM (IOAM) 和带内网络遥测 (INT),以及替代进行的性能测量 (AM-PM) 背景之下。

Marvell 的方法是提供多样化且通用性高的工具组,可以通过它执行各种各样的遥测方法,而非局限于一种特定的测量协议。 欲了解此主题的更多信息,包括长久以来普遍所用的被动和主动测量协议,以及崭新的混合型遥测方法,请观看以下视频并下载 Marvell 白皮书。

-

January 11, 2018

全球数据的存储

By Marvell PR Team

在当下以数据为中心的世界,数据存储是发展的基石,但未来的数据将如何存储却是一个广泛争论的问题。 显而易见,数据增长仍将保持大幅上升态势。 IDC 公司编写的一份题为“数据时代 2025”的报告指出,全世界生成的数据量将以接近指数级的速度增长。 到 2025 年,数据量预期将会超过 163 Zb(几乎是当今数据量的 8 倍,接近 2010 年数据量的 100 倍)。 随着云服务的使用量增加、物联网 (IoT) 节点的广泛部署,虚拟现实和增强现实应用、自动驾驶汽车、机器学习和“大数据”的盛行,都将在未来新的数据驱动时代发挥重要作用。

展望未来,智能城市的建设需要部署非常精密的基础设施,以达到减少交通拥堵、提高公共设施效率、优化环境等等种类繁杂的目的,从而导致数据量进一步攀升。 未来的很大一部分数据需要满足实时访问。 这种需求将对我们采用的技术产生影响,也会影响数据在网络中的存储位置。 另外,还必须考虑到一些重要的安全因素。

因此,为了能够控制开销,尽可能提高运营效率,数据中心和商业企业将尝试采用分层存储方法,使用非常合适的存储介质,以降低相关成本。 存储介质的选择将依据数据访问的频率及可接受的延迟程度而定。 This will require the use of numerous different technologies to make it fully economically viable - with cost and performance being important factors.

当前市面上有众多不同的存储介质。 有些存储介质已经非常成熟,有些则仍然处于新兴阶段。 在某些应用中,硬盘驱动器 (HDD) 正在被固态硬盘 (SSD) 取代,而在 SSD 领域,随着技术从 SATA 向 NVMe 的迁移,NVMe 正在促使 SSD 技术充分发挥自身性能潜力。 HDD 的容量持续大幅提高,整体性价比的提升也增加了设备的吸引力。 云计算保证了庞大的数据存储需求,这意味着 HDD 在这个领域具有强劲的发展动力。

未来将出现其他形式的存储器,它们将帮助我们应对日益增长的存储需求所带来的挑战。 其中包括更高容量的 3D 堆栈闪存,也包括全新的技术,例如相变存储器,它的写入速度很快,使用寿命也更长。 随着基于光纤的 NVMe (NVMf) 接口的问世,高带宽、超低延迟、非常高的可扩展性的 SSD 数据存储迎来了广阔的前景。

Marvell 很早就认识到数据存储的重要性将日益提高,并一直将这个领域作为发展重点,成为了业内 HDD 控制器和消费级固态硬盘控制器排名靠前的供应商。

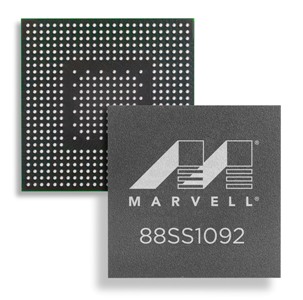

在产品发布之后仅 18 个月,采用 NANDEdge™ 纠错技术的 Marvell 88SS1074 SATA SSD 控制器的出货量就突破了 5000 万件。 凭借屡获殊荣的 88NV11xx 系列小尺寸 DRAM-less SSD 控制器(基于 28nm CMOS 半导体工艺),Marvell 为市场提供了优化的高性能 NVMe 存储控制器解决方案,可在紧凑型简化手持式计算设备上采用,例如平板电脑和超级本。 这些控制器能够支持 1600Mb/s 的读取速度,而且功耗非常低,有利于节省电池电量。 Marvell 提供 88SS1092 NVMe SSD 控制器等解决方案,针对新计算模式设计,让数据中心能够共享存储数据,从而非常大程度地降低成本和提高性能效率。

数据超乎想象的增长意味着需要更多的存储。 新兴应用和创新技术将促使我们采用全新方式来提高存储容量、缩短延迟和确保安全性。 Marvell 为行业提供一系列技术以满足数据存储需求,可以满足 SSD 或 HDD 实施要求,且配备所有配套接口类型,从 SAS 和 SATA 一直到 PCIe 和 NMVe。

欲了解有关 Marvell 产品如何存储全球数据的更多信息,请访问 www.marvell.com。

欲了解有关 Marvell 产品如何存储全球数据的更多信息,请访问 www.marvell.com。 -

January 10, 2018

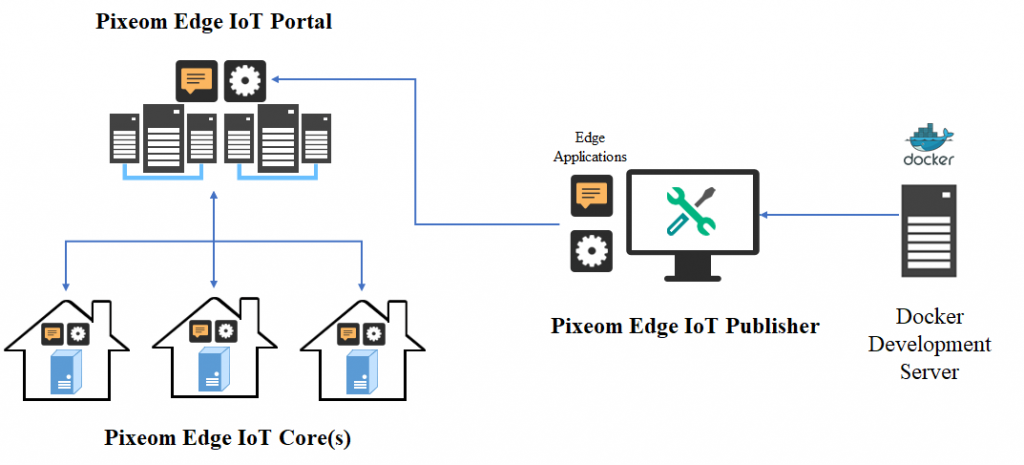

Marvell 利用 Pixeom Edge 平台将 Google 云扩展至网络前沿,在 CES 2018 上证实了其出众的计算能力

作者:Maen Suleiman,Marvell 软件产品线高级经理

随着越来越多的计算和存储服务融入云环境,千兆级别网络的采用和下一代 5G 网络的推出将进一步提供更大的可用带宽。 连接到网络的物联网和移动设备应用程序将随之变得越来越智能,计算能力更加强大, 但是这么多资源同时传输到云端,势必给当今的网络造成巨大的压力。

下一代网络架构将无法继续遵循传统的云端集中模式,而是需要在整个网络基础设施中分配更多的智能。 高性能计算硬件(以及相关软件)将需要置于网络的边缘。 分布式运行模式需要能够提供边缘设备所需的计算和安全功能,以便为汽车、虚拟现实和工业计算等应用,提供具有吸引力的实时服务,并克服固有的延迟问题。 除此之外,也需要对高分辨率视频和音频内容进行分析。

通过其高性能的 ARMADA® 嵌入式处理器,Marvell 提供了高效的解决方案,以促进边缘计算的实施。 在上周的 2018 年国际消费电子展 (CES) 上,Marvell 和 Pixeom 团队展示了一个非常有效,但价格经济的边缘计算系统,该系统结合了 Marvell MACCHIATObin 社区开发板与 Pixeom 公司的技术,扩展了 Google Cloud Platform™ 服务在网络边缘的功能。 Marvell MACCHIATObin 社区开发板运行 Pixeom Edge Platform(边缘平台)软件,能够通过在 MACCHIATObin 上编排和运行基于容器 Docker 的微服务来扩展云功能。

目前,将数据量庞大、高分辨率的视频内容传输到云端进行分析,对网络基础设施带来了很大的压力,既需要投入大量资源,成本也非常昂贵。 采用 Marvell 公司的 MACCHIATObin 硬件作为基础,Pixeom 将展示其基于容器的边缘计算解决方案,能够在网络边缘提供视频分析功能。 这种独特的硬件和软件结合提供了一种高度优化和直接的方式,使更多的处理和存储资源处于网络边缘。 该技术可以显著提高运营效率并降低延迟。

Marvell 和 Pixeom 的展示在网络边缘部署了 Google TensorFlow™ 微服务,能够实现各种不同的关键功能,包括物体检测、面部识别、文本阅读(名片、牌照等),以及用于安全/安全警报的智能通知等。 这项技术还能够涵盖从视频监控、自动驾驶汽车到智能零售和人工智能等各种潜在应用。 Pixeom 提供了完备的边缘计算解决方案,让云服务供应商能够大规模封装、部署和编排容器化的应用程序,运行本地部署“边缘物联网内核”。 为了加快开发速度,内核自带内置机器学习、FaaS、数据处理、信息传送、API 管理、分析、向 Google Cloud 分流能力等多种功能。

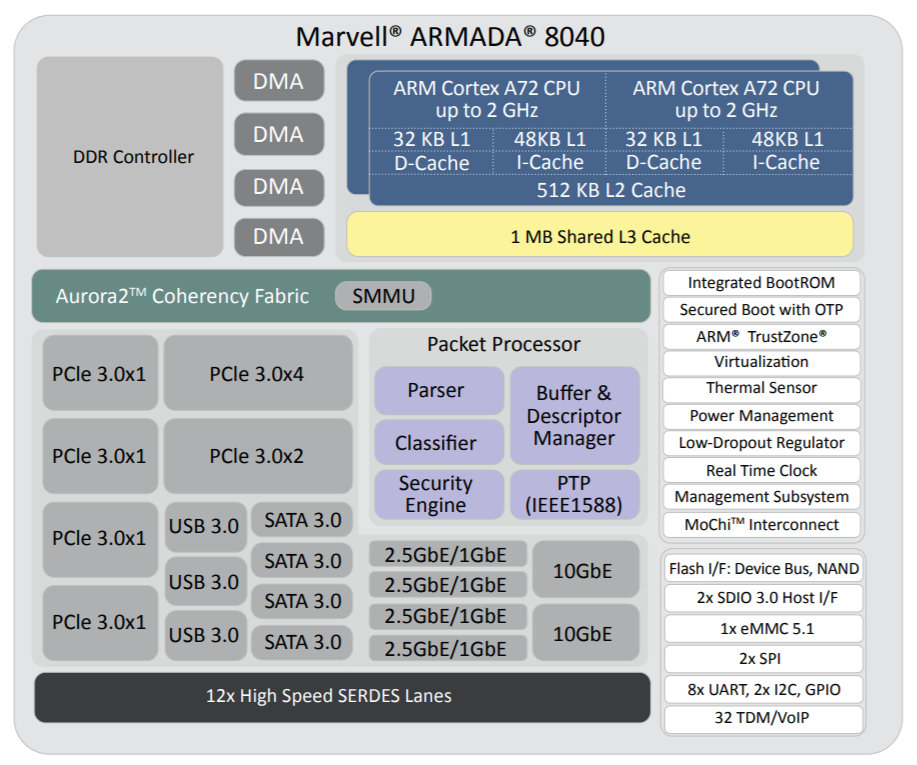

MACCHIATObin 社区开发板的核心是 Marvell 的 ARMADA 8040 处理器,该处理器具有 64 位 ARMv8 四核处理器(运行频率高达 2.0Ghz),支持高达 16GB 的 DDR4 内存和多种不同的 I / O。 通过在 Marvell MACCHIATObin 板上使用 Linux®,多功能的 Pixeom Edge 物联网平台可以促进在云网络外围实现边缘计算服务器或微云 (cloudlets), Marvell 将展示这款备受欢迎的硬件平台的强劲实力,作为 Pixeom 展示产品的重要组成部分,运行先进的机器学习、数据处理和物联网功能。 Pixeom Edge 物联网平台采用基于角色的访问功能,能够让处于不同地理位置的开发人员相互协作,以创建引人注目的边缘计算实现方案。 Pixeom 可以提供必要的所有边缘计算支持,能够让 Marvell 嵌入式处理器用户建立自己基于边缘的应用,从而可以减轻中心网络的负载。

MACCHIATObin 社区开发板的核心是 Marvell 的 ARMADA 8040 处理器,该处理器具有 64 位 ARMv8 四核处理器(运行频率高达 2.0Ghz),支持高达 16GB 的 DDR4 内存和多种不同的 I / O。 通过在 Marvell MACCHIATObin 板上使用 Linux®,多功能的 Pixeom Edge 物联网平台可以促进在云网络外围实现边缘计算服务器或微云 (cloudlets), Marvell 将展示这款备受欢迎的硬件平台的强劲实力,作为 Pixeom 展示产品的重要组成部分,运行先进的机器学习、数据处理和物联网功能。 Pixeom Edge 物联网平台采用基于角色的访问功能,能够让处于不同地理位置的开发人员相互协作,以创建引人注目的边缘计算实现方案。 Pixeom 可以提供必要的所有边缘计算支持,能够让 Marvell 嵌入式处理器用户建立自己基于边缘的应用,从而可以减轻中心网络的负载。 Marvell 还会展示其技术与 Google Cloud 平台的兼容性,从而能够对大规模部署完毕的边缘计算资源进行管理和分析。 因此,MACCHIATObin 开发板再一次证明能够提供工程师需要的硬件资源,并可为他们提供所有必要的处理、存储和连接功能。

Marvell 还会展示其技术与 Google Cloud 平台的兼容性,从而能够对大规模部署完毕的边缘计算资源进行管理和分析。 因此,MACCHIATObin 开发板再一次证明能够提供工程师需要的硬件资源,并可为他们提供所有必要的处理、存储和连接功能。Those visiting Marvell’s suite at CES (Venetian, Level 3 - Murano 3304, 9th-12th January 2018, Las Vegas) will be able to see a series of different demonstrations of the MACCHIATObin community board running cloud workloads at the network edge. 欢迎到场参观!

-

January 10, 2018

全球数据的传输

作者:Marvell 公关团队

有线和无线连接传输数据的方式正在发生重大转变, 而导致这些变化的原因来自于不同行业的多个层面。

在汽车领域,随着新特性和新功能的引入,基于 CAN 和 LIN 的传统通信技术已经不足以满足需求。 我们必须部署更先进的车载网络,能够支持千兆位级的数据速率,这样才能处理由高清摄像头、更精密的信息娱乐系统、汽车雷达和 LiDAR 生成的大量数据。 With CAN, LIN and other automotive networking technologies not offering viable upgrade paths, it is clear that Ethernet will be the basis of future in-vehicle network infrastructure - offering the headroom needed as automobile design progresses towards the long term goal of fully autonomous vehicles. Marvell 在这场博弈中已经占据了领先位置,公司发布了业界首款安全车载千兆以太网交换机,提供当今汽车高数据负载设计所需的速度,同时还确保安全运行,减少黑客攻击或拒绝服务 (DoS) 攻击的威胁。

随着工业 4.0 的到来,在现代工厂和加工厂环境中,我们将通过使用机器对机器 (M2M) 通信,进一步提升自动化程度。 这种通信能够实现数据访问,这些数据是由分布在生产现场的大量不同传感器节点提供。 对这些数据进行长期深入分析,最终将提高现代工厂环境的效率和生产率。 能够支持千兆数据速率的以太网经过考验已成为主要候选技术,并已得到广泛部署。 它不仅能够满足速度和带宽要求,具备在各种环境条件下(例如高温、静电放电、振动)必需的稳定性,还提供低延迟特性,这种特性对于实时控制/分析是必不可少的。 Marvell 开发了非常精密的千兆以太网收发器,主要针对此类应用,性能更加出色。

在数据中心,情况也在发生着变化,但在这种场景下,涉及的原则有些不同。 数据中心更加侧重于如何处理更大的数据量,同时控制相关的资本和运营开支。 Marvell has been championing a more cost effective and streamlined approach through its Prestera® PX Passive Intelligent Port Extender (PIPE) products. 当今的数据中心工程师采用了模块化方法,以部署能够满足其特定需求的网络基础设施,而无需提高不必要的复杂性,避免增加成本和功耗。 由此设计出的解决方案具有充分可扩展性,不仅更加经济实惠,而且能效更低。

In the wireless domain, there is ever greater pressure being placed upon WLAN hardware - in the home, office, municipal and retail environments. 随着用户密度增大,整体数据容量随之增加,网络运营商和服务提供商必须能够适应目前在用户行为方面发生的变化。 Wi-Fi 连接的用途不再限于下载数据,还更多地用于上传数据,这将是个重要的考虑因素。 很多不同应用都将需要数据上传,包括增强现实游戏、高清视频内容共享和基于云的创新活动等。 为了满足这种需求,Wi-Fi 技术必须在上行链路和下行链路上都展现出增强的带宽容量。

倍受期待的 802.11ax 协议的推出必将全盘改变 Wi-Fi 技术的实施方式。 Not only will this allow far greater user densities to be supported (thereby meeting the coverage demands of places where large numbers of people are in need of Internet access, such as airports, sports stadia and concert venues), it also offers greater uplink/downlink data capacity - supporting multi-Gigabit operation in both directions. Marvell 期望通过近期发布的千兆位级 802.11ax Wi-Fi 系统单晶片 (SoC),进一步推动 Wi-Fi 技术发展,它是业界首款可在上行链路和下行链路上都提供正交频分多址接入 (OFDMA) 和多用户 MIMO 运行的产品。

欲了解有关 Marvell 产品如何应对全球数据传输激增的更多信息,请访问 www.marvell.com。

欲了解有关 Marvell 产品如何应对全球数据传输激增的更多信息,请访问 www.marvell.com。 -

November 06, 2017

USR联盟:促成一个开放的多芯片模块 (MCM) 生态系统

By Gidi Navon, Senior Principal Architect, Marvell

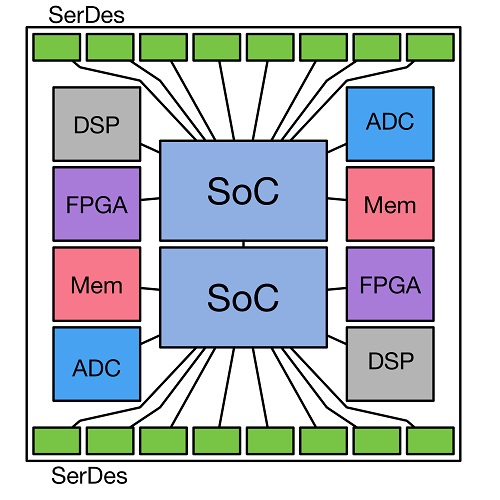

为了适应不断提高的带宽需求、设计的复杂性、新工艺的出现以及多学科技术的整合等要求,半导体行业正在经历指数性增长和更加快速的变化。 所有这些又都是在越来越短的开发周期和越来越激烈的竞争背景下发生的。 在软件和硬件等技术驱动的其他产业领域,正在通过建立一系列开放的联盟和开放的标准来解决类似的挑战。 This blog does not attempt to list all the open alliances that now exist -- the Open Compute Project, Open Data Path and the Linux Foundation are just a few of the most prominent examples. 但目前还有一个技术领域没有采纳这种开放式的合作,这就是多芯片模块 (MCM),它是将多个半导体晶片封装在一起,从而在一个单一封装中能够创建一个完整的系统。

为了适应不断提高的带宽需求、设计的复杂性、新工艺的出现以及多学科技术的整合等要求,半导体行业正在经历指数性增长和更加快速的变化。 所有这些又都是在越来越短的开发周期和越来越激烈的竞争背景下发生的。 在软件和硬件等技术驱动的其他产业领域,正在通过建立一系列开放的联盟和开放的标准来解决类似的挑战。 This blog does not attempt to list all the open alliances that now exist -- the Open Compute Project, Open Data Path and the Linux Foundation are just a few of the most prominent examples. 但目前还有一个技术领域没有采纳这种开放式的合作,这就是多芯片模块 (MCM),它是将多个半导体晶片封装在一起,从而在一个单一封装中能够创建一个完整的系统。MCM的概念已经在业界存在了一段时间,这种方式具有多种技术和市场方面的优势,其中包括:

- Improved yield - Instead of creating large monolithic dies with low yield and higher cost (which sometimes cannot even be fabricated), splitting the silicon into multiple die can significantly improve the yield of each building block and the combined solution. 更高的良率最终转化为成本的降低。

- Optimized process - The final MCM product is a mix-and-match of units in different fabrication processes which enables optimizing of the process selection for specific IP blocks with similar characteristics.

- Multiple fabrication plants - Different fabs, each with its own unique capabilities, can be utilized to create a given product.

- Product variety - New products are easily created by combining different numbers and types of devices to form innovative and cost‑optimized MCMs.

- Short product cycle time - Dies can be upgraded independently, which promotes ease in the addition of new product capabilities and/or the ability to correct any issues within a given die. 例如,可以给新产品集成全新的 I/O 接口,而无需重新设计解决方案中那些稳定运行且不需改变的部分,从而避免浪费时间和金钱。

- Economy of scale - Each die can be reused in multiple applications and products, increasing its volume and yield as well as the overall return on the initial investment made in its development.

Sub-dividing large semiconductor devices and mounting them on an MCM has now become the new printed circuit board (PCB) - providing smaller footprint, lower power, higher performance and expanded functionality.

现在,我们可以想象一下,上面列出的优势并不局限于某家芯片供应商,而是可以由整个行业共享。 通过开放和标准化晶片之间的接口,就能引入一个真正的开放平台,而在此平台下,来自不同公司且具有各自技术专长的设计团队,可以创造出任何单打独斗的公司所不能及的各种新产品。

这正是 USR 联盟所要倡导实施的所在。 USR 联盟已经定义了超短距离 (USR) 链路,针对单一封装中所含组件之间的非常短距离通信进行了优化。 现有的跨越封装边界和连接器的超短距离 (VSR) PHY 需要面对一些不属于封装内部的技术挑战,而 USR 链路则可以提供更高的带宽,更低的功耗和更小的芯片尺寸。 此外,USR PHY 是基于多线差分信号传输技术,专门针对 MCM 环境进行了优化。

有很多应用都可以通过 USR 链路实施, 例如 CPU、交换机和路由器、FPGA、DSP、模拟组件和各种长距离电光接口等等。

图 1: 可能的 MCM 布局范例

图 1: 可能的 MCM 布局范例 作为 USR 联盟的一个积极推动者,Marvell 公司正在努力建造一个关于互操作组件、互连、协议和软件的生态系统,以帮助半导体产业为市场带来更多价值。 USR 联盟正在与业界和其他标准开发组织合作,共同创建 PHY、MAC 和软件标准以及互操作性协议,同时为了确保广泛的互操作性,也在推动开发关于 USR 应用(包括认证计划)的完整生态系统。

欲了解关于 USR 联盟的详细信息,请访问: www.usr-alliance.org

-

October 03, 2017

Wi-Fi 20 周年庆 - 第 1 篇

作者:Prabhu Loganathan,Marvell 互联事业部市场营销高级总监

You can't see it, touch it, or hear it - yet Wi-Fi® has had a tremendous impact on the modern world - and will continue to do so. From our home wireless networks, to offices and public spaces, the ubiquity of high speed connectivity without reliance on cables has radically changed the way computing happens. It would not be much of an exaggeration to say that because of ready access to Wi-Fi, we are consequently able to lead better lives - using our laptops, tablets and portable electronics goods in a far more straightforward, simplistic manner with a high degree of mobility, no longer having to worry about a complex tangle of wires tying us down.

This first in a series of blogs will look at the history of Wi-Fi to see how it has overcome numerous technical challenges and evolved into the ultra-fast, highly convenient wireless standard that we know today.

Unlicensed Beginnings

While we now think of 802.11 wireless technology as predominantly connecting our personal computing devices and smartphones to the Internet, it was in fact initially invented as a means to connect up humble cash registers. In the late 1980s, NCR Corporation, a maker of retail hardware and point-of-sale (PoS) computer systems, had a big problem. Its customers - department stores and supermarkets - didn't want to dig up their floors each time they changed their store layout.

They were set the challenge of creating a wireless communication protocol. These engineers succeeded in developing ‘WaveLAN’, which would be recognized as the precursor to Wi-Fi. Rather than preserving this as a purely proprietary protocol, NCR could see that by establishing it as a standard, the company would be able to position itself as a leader in the wireless connectivity market as it emerged.

Wireless Ethernet

Though the 802.11 wireless standard was released in 1997, it didn't take off immediately. Slow speeds and expensive hardware hampered its mass market appeal for quite a while - but things were destined to change. 10 Mbit/s Ethernet was the networking standard of the day. The IEEE 802.11 working group knew that if they could equal that, they would have a worthy wireless competitor. In 1999, they succeeded, creating 802.11b. This used the same 2.4 GHz ISM frequency band as the original 802.11 wireless standard, but it raised the throughput supported considerably, reaching 11 Mbits/s. Wireless Ethernet was finally a reality.

Thanks to cheaper equipment and better nominal ranges, 802.11b proved to be the most popular wireless standard by far. But, while it was more cost effective than 802.11a, 802.11b still wasn't at a low enough price bracket for the average consumer.

Apple was launching a new line of computers at that time and wanted to make wireless networking functionality part of it. The terms set were tough - Apple expected to have the cards at a $99 price point, but of course the volumes involved could potentially be huge.

PC makers saw Apple computers beating them to the punch and wanted wireless networking as well.

Working with engineers from Lucent, Microsoft made Wi-Fi connectivity native to the operating system. Users could get wirelessly connected without having to install third party drivers or software. With the release of Windows XP, Wi-Fi was now natively supported on millions of computers worldwide - it had officially made it into the ‘big time’.

-

September 18, 2017

模块化网络对数据中心升级具有性价比

作者:Yaron Zimmerman,Marvell 公司高级职员产品线经理

To support the server-to-server traffic that virtualized data centers require, the networking spine will generally rely on high capacity 40 Gbit/s and 100 Gbit/s switch fabrics with aggregate throughputs now hitting 12.8 Tbit/s. But the ‘one size fits all’ approach being employed to develop these switch fabrics quickly leads to a costly misalignment for data center owners.

The switch can aggregate the data from lower speed network interfaces and so act as a front-end to the core network fabric. But such switches tend to be far more complex than is actually needed - often derived from older generations of core switch fabric. They perform a level of switching that is unnecessary and, as a result, are not cost effective when they are primarily aggregating traffic on its way to the core network’s 12.8 Tbits/s switching engines. The heightened expense manifests itself not only in terms of hardware complexity and the issues of managing an extra network tier, but also in relation to power and air-conditioning. It is not unusual to find five or more fans inside each unit being used to cool the silicon switch.

An attractive feature of this standard is the ability to allow port extenders to be cascaded, for even greater levels of modularity.

Reference designs have already been built that use a simple 65W open-frame power supply to feed all the devices required even in a high-capacity, 48-ports of 10 Gbits/s. Furthermore, the equipment dispenses with the need for external management. The management requirements can move to the core 12.8 Tbit/s switch fabric, providing further savings in terms of operational expenditure. It is a demonstration of exactly how a more modular approach can greatly improve the efficiency of today's and tomorrow's data center implementations.

-

August 31, 2017

利用硬件加密保护嵌入式存储

作者:Jeroen Dorgelo,Marvell 存储事业部战略总监

对于工业、军事和大量现代商业应用,数据安全性自然显得尤为重要。 虽然基于软件的加密对于消费者和企业环境而言通常运行良好,但工业和军事应用所使用的嵌入式系统背景,则通常需要一种结构更简单而本质性能更加强劲的软件。

自我加密硬盘采用板上加密处理器在硬盘层确保数据的安全。 这样不仅能自动提高硬盘安全性,而且过程对用户和主机操作系统透明。 这种设备通过在后台自动加密数据,来提供嵌入式系统所要求的使用简洁性和弹性数据安全。

嵌入式与企业数据安全

嵌入式和企业存储通常都要求很强的数据安全性。 根据相关行业领域的不同,通常会涉及到客户(或可能是患者)隐私、军事数据或商业数据的安全。 数据种类不同,共性也越来越少。 嵌入式存储与企业存储的使用方式有很大的差别,因而导致解决数据安全问题的方法也大相径庭。

企业存储通常由数据中心多层机架内互相连通的磁盘阵列组成,而嵌入式存储一般只是简单的将一块固态硬盘 (SSD) 安装到嵌入式电脑或设备之中。 企业经常会控制数据中心的物理安全,也会执行企业网络(或应用程序)的软件访问控制。 Embedded devices, on the other hand - such as tablets, industrial computers, smartphones, or medical devices - are often used in the field, in what are comparatively unsecure environments. 这种背景下的数据安全没有其他选择,只能在设备层面进行。

基于硬件的全盘加密

嵌入式应用的访问控制非常没有保障,这里的数据安全工作越自动化越透明越好。

全盘、基于硬件的加密已经证实是达到这个目的最佳方法。全盘加密 (FDE) 通过自动对硬盘所有内容进行加密的方式,达到更高程度的安全性和透明度。 基于文件的加密会要求用户选择要加密的文件或文件夹,且需要提供解密的密码或秘钥,与之相对,FDE 的工作则充分透明。 所有写入硬盘的数据都会被加密,不过一旦经过验证,用户对此硬盘的访问就如同未加密硬盘一样简单。 这不仅会让 FDE 更易于使用,也意味着这是一种更可靠的加密方法,因为所有的数据都会自动加以保护。 即使用户忘记加密或没有访问权限的文件(如隐藏的文件、临时文件和交换空间)也都会自动加以保护。

虽然 FDE 也可以通过软件技术实现,但基于硬件的 FDE 性能更好,且固有的保护特性更强。 基于硬件的 FDE 是在硬盘层以自我加密 SSD 的方式进行。SSD 控制器内含一个硬件加密引擎,也是在硬盘自身之内存储私有秘钥。

Because software based FDE relies on the host processor to perform encryption, it is usually slower - whereas hardware based FDE has much lower overhead as it can take advantage of the drive’s integrated crypto-processor. 基于硬盘的 FDE 还可以加密硬盘的主引导记录,与之相反,基于软件的加密却不具备这种功能。

以硬件为中心的 FDE 不仅对用户透明,对主机操作系统亦然。 他们在后台悄无声息的工作,而且运行无需任何特殊软件。 除有助于优化操作简便性之外,这也意味着敏感的加密秘钥会独立于主机操作系统和存储器进行保存,所有的私有秘钥全部存储于硬盘本身。

提高数据安全性

这款基于硬件的 FDE 不仅能够提供当今市场急需的透明且便于使用的加密过程,而且具备现代 SSD 特有的数据安全优势。 NAND 单元的使用寿命有限,而现代 SSD 采用先进的损耗均衡算法,来尽可能的延长使用寿命。 写入操作并不会在数据更新时覆盖原有的 NAND 单元,而是在文件更新时在硬盘中来回移动,通常的结果是同一条数据存在多个副本,分散存储于 SSD 之中。 这种损耗均衡技术非常有效,但是也让基于文件的加密和数据擦除变得更加困难,因为现在有多个数据副本需要加密或擦除。

FDE 为 SSD 解决了加密和擦除两方面的问题。 由于所有的数据都加密,所以无需担心是否存在未加密数据残留。 另外,因为所使用的加密方式(一般为 256 位 AES)非常安全,擦除硬盘就同擦除私有秘钥一样便于操作。

解决嵌入式数据安全问题

嵌入式设备通常都会成为 IT 部门的一大安全挑战,因为这些设备经常在非受控环境下使用,还有可能被未授权人员使用。 虽然企业 IT 有权限执行企业范围内的数据安全政策和访问控制,但是在工业环境或现场环境中针对嵌入式设备使用这种方法却难上加难。

针对嵌入式应用中数据安全的简易解决方案正是基于硬件的 FDE。 自我加密硬盘带有硬盘加密处理器,其处理开销非常低而且在后台运行,对用户和主机操作系统透明。 其便于使用的特性也有助于提高安全性,因为管理员不需要依靠用户执行安全政策,而且私有秘钥不会暴露给软件或操作系统。

-

July 17, 2017

合理精简以太网

作者:Marvell 公司资深架构师 George Hervey

Implementation of cloud infrastructure is occurring at a phenomenal rate, outpacing Moore's Law.

More and more switches are required, thereby increasing capital costs, as well as management complexity. To tackle the rising expense issues, network disaggregation has become an increasingly popular approach. By separating the switch hardware from the software that runs on it, vendor lock-in is reduced or even eliminated.

The number of managed switches basically stays the same.

Network Disaggregation

Almost every application we use today, whether at home or in the work environment, connects to the cloud in some way. Our email providers, mobile apps, company websites, virtualized desktops and servers, all run on servers in the cloud.

As demand increases, Moore's law has struggled to keep up. Scaling data centers today involves scaling out - buying more compute and storage capacity, and subsequently investing in the networking to connect it all.

Buying a switch, router or firewall from one vendor would require you to run their software on it as well. Larger cloud service providers saw an opportunity. These players often had no shortage of skilled software engineers.

802.1BR

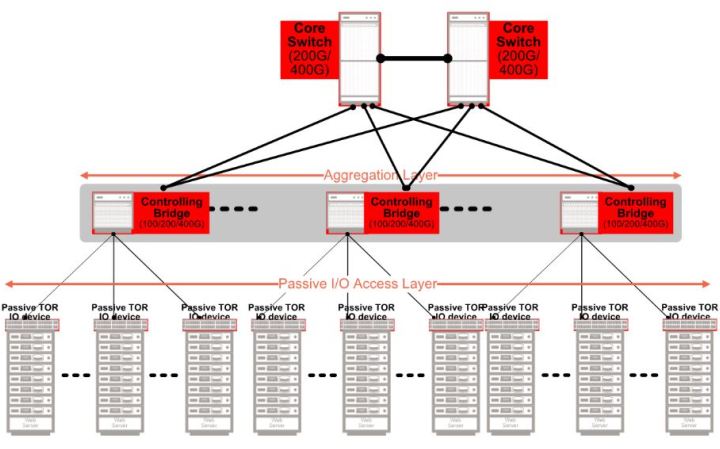

Today's cloud data centers rely on a layered architecture, often in a fat-tree or leaf-spine structural arrangement. Rows of racks, each with top-of-rack (ToR) switches, are then connected to upstream switches on the network spine. The ToR switches are, in fact, performing simple aggregation of network traffic. Using relatively complex, energy consuming switches for this task results in a significant capital expense, as well as management costs and no shortage of headaches.

By replacing ToR switches with port extenders, port connectivity is extended directly from the rack to the upstream.

The Next Step in Network Disaggregation

Though many of the port extenders on the market today fulfill 802.1BR functionality, they do so using legacy components. Instead of being optimized for 802.1BR itself, they rely on traditional switches. This, as a consequence impacts upon the potential cost and power benefits that the new architecture offers.

Designed from the ground up for 802.1BR, Marvell's Passive Intelligent Port Extender (PIPE) offering is specifically optimized for this architecture. PIPE is interoperable with 802.1BR compliant upstream bridge switches from all the industry’s leading OEMs. It enables fan-less, cost efficient port extenders to be deployed, which thereby provide upfront savings as well as ongoing operational savings for cloud data centers.

802.1BR's port extender architecture is bringing about the second wave, where ports are decoupled from the switches which manage them.

-

July 07, 2017

延长基于 3.2T 交换机架构设备的生命周期

By Yaron Zimmerman, Senior Staff Product Line Manager, Marvell and Yaniv Kopelman, Networking and Connectivity CTO, Marvell

While network demands have increased, Moore's law (which effectively defines the semiconductor industry) has not been able to keep up. Instead of scaling at the silicon level, data centers have had to scale out. This has come at a cost though, with ever increasing capital, operational expenditure and greater latency all resulting. Facing this challenging environment, a different approach is going to have to be taken. In order to accommodate current expectations economically, while still also having the capacity for future growth, data centers (as we will see) need to move towards a modularized approach.

Scaling out the datacenter

Scaling out the datacenter Data centers are destined to have to contend with demands for substantially heightened network capacity - as a greater number of services, plus more data storage, start migrating to the cloud.

In turn these are consuming more power - which impacts on the overall power budget and means that less power is available for the data center servers. Not only does this lead to power being unnecessarily wasted, in addition it will push the associated thermal management costs and the overall Opex upwards. As these data centers scale out to meet demand, they're often having to add more complex hierarchical structures to their architecture as well - thereby increasing latencies for both north-south and east-west traffic in the process.

We used to enjoy cost reductions as process sizes decreased from 90 nm, to 65 nm, to 40 nm. That is no longer strictly true however. As we see process sizes go down from 28 nm node sizes, yields are decreasing and prices are consequently going up. To address the problems of cloud-scale data centers, traditional methods will not be applicable.

PIPEs and Bridges

Today's data centers often run on a multi-tiered leaf and spine hierarchy. Racks with ToR switches connect to the network spine switches. These, in turn, connect to core switches, which subsequently connect to the Internet.

By following a modularized approach, it is possible to remove the ToR switches and replace them with simple IO devices - port extenders specifically. This effectively extends the IO ports of the spine switch all the way down to the ToR. What results is a passive ToR that is unmanaged. It simply passes the packets to the spine switch.

The spine switch now acts as the controlling bridge. It is able to manage the layer which was previously taken care of by the ToR switch. This means that, through such an arrangement, it is possible to disaggregate the IO ports of the network that were previously located at the ToR switch, from the logic at the spine switch which manages them. This innovative modularized approach is being facilitated by the increasing number of Port Extenders and Control Bridges now being made available from Marvell that are compatible with the IEEE 802.1BR bridge port extension standard.

Solving Data Center Scaling Challenges

Port extenders solve the latency by flattening the hierarchy. Instead of having conventional ‘leaf’ and ‘spine’ tiers, the port extender acts to simply extend the IO ports of the spine switch to the ToR. Each server in the rack has a near-direct connection to the managing switch.

The passive port extender is a greatly simplified device compared to a managed switch. This means lower up-front costs as well as lower power consumption and a simpler network management scheme are all derived.

-

June 07, 2017

社区开发板允许在数据中心、网络和存储生态系统中轻松采用 ARM 64 位性能

作者:Maen Suleiman,Marvell 软件产品线高级经理

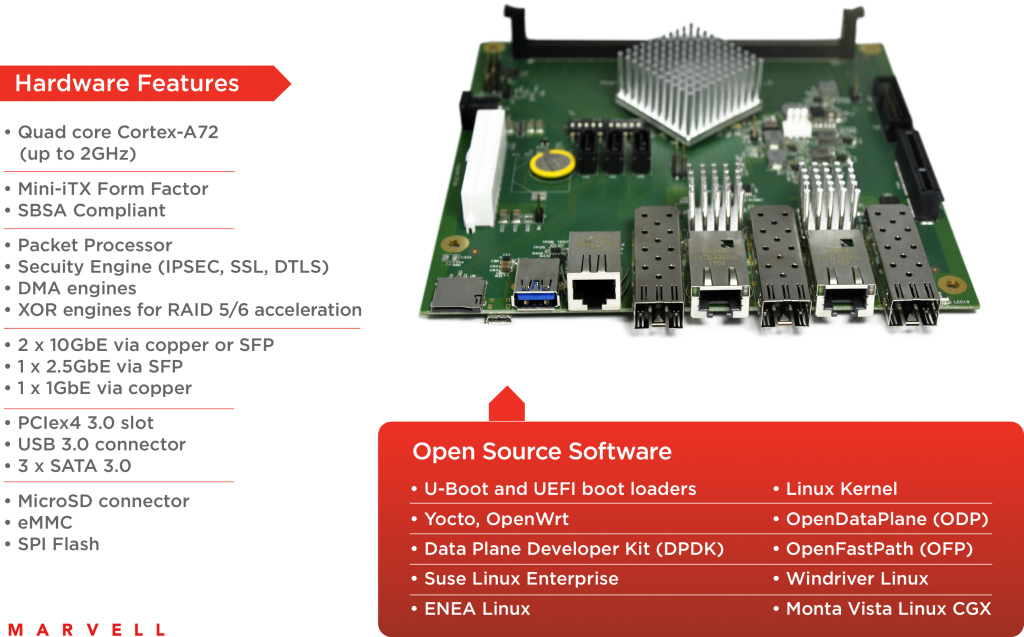

Marvell MACCHIATObin community board is first-of-its-kind, high-end ARM 64-bit networking and storage community board

The industry is answering that call through technologies and concepts such as software defined networking (SDN), network function virtualization (NFV) and distributed storage. Making the most of these technologies and unleashing the potential of the new applications requires a collaborative approach.

A key way to foster such collaboration is through open-source ecosystems. The rise of Linux has demonstrated the effectiveness of such ecosystems and has helped steer the industry towards adopting open-source solutions. AT&T Runs Open Source White Box Switch in its Live Network, SnapRoute and Dell EMC to Help Advance Linux Foundation's OpenSwitch Project, Nokia launches AirFrame Data Center for the Open Platform NFV community)

Communities have come together through Linux to provide additional value for the ecosystem. One example is the Linux Foundation Organization which currently sponsors more than 50 open source projects. Its activities cover various parts of the industry from IoT ( IoTivity , EdgeX Foundry ) to full NFV solutions, such as the Open Platform for NFV (OPNFV). This is something that would have been hard to conceive even a couple of years ago without the wide market acceptance of open-source communities and solutions.

Although there are numerous important open-source software projects for data-center applications, the hardware on which to run them and evaluate solutions has been in short supply. There are many ARM® development boards that have been developed and manufactured, but they primarily focus on simple applications.

All these open source software ecosystems require a development platform that can provide a high-performance central processing unit (CPU), high-speed network connectivity and large memory support. But they also need to be accessible and affordable to ARM developers. Marvell MACCHIATObin® is the first ARM 64-bit community platform for open-source software communities that provides solutions for, among others, SDN, NFV and Distributed Storage.

A high-performance ARM 64-bit community platform

A high-performance ARM 64-bit community platform It is based on the Marvell hyperscale SBSA-compliant ARMADA® 8040 system on chip (SoC) that features four high-performance Cortex®-A72 ARM 64-bit CPUs.

SolidRun (https://www.solid-run.com/) started shipping the Marvell MACCHIATObin community board in March 2017, providing an early access of the hardware to open-source communities.

The Marvell MACCHIATObin community board is easy to deploy. It uses the compact mini-ITX form factor, enabling developers to purchase one of the many cases based on the popular standard mini-ITX case to meet their requirements. The ARMADA 8040 SoC itself is SBSA-compliant (http://infocenter.arm.com/help/topic/com.arm.doc.den0029/) to offer unified extensible firmware interface (UEFI) support.

In addition, the SoC provides two security engines that can perform full IPSEC, DTL and other protocol-offload functions at 10G rates.

For hardware expansion, the Marvell MACCHIATObin community board provides one PCIex4 3.0 slot and a USB3.0 host connector. For non-volatile storage, options include a built-in eMMC device and a micro-SD card connector. Mass storage is available through three SATA 3.0 connectors. For debug, developers can access the board’s processors through a choice of a virtual UART running over the microUSB connector, 20-pin connector for JTAG access or two UART headers. MACCHIATObin Specification.

Open source software enables advanced applications

The Marvell MACCHIATObin community board comes with rich open source software that includes ARM Trusted Firmware (ATF), U-Boot, UEFI, Linux Kernel, Yocto, OpenWrt, OpenDataPlane (ODP) , Data Plane Development Kit (DPDK), netmap and others; many of the Marvell MACCHIATObin open source software core components are available at: https://github.com/orgs/MarvellEmbeddedProcessors/.

To provide the Marvell MACCHIATObin community board with ready-made support for the open-source platforms used at the edge and data centers for SDN, NFV and similar applications, standard operating systems like Suse Linux Enterprise, CentOS, Ubuntu and others should boot and run seamlessly on the Marvell MACCHIATObin community board.

As the ARMADA 8040 SoC is SBSA compliant and supports UEFI with ACPI, along with Marvell’s upstreaming of Linux kernel support, standard operating systems can be enabled on the Marvell MACCHIATObin community board without the need of special porting.

On top of this core software, a wide variety of ecosystem applications needed for the data center and edge applications can be assembled.

For example, using the ARMADA 8040 SoC high-speed networking and security engine will enable the kernel netdev community to develop and maintain features such as XDP or other kernel network features on ARM 64-bit platforms. The ARMADA 8040 SoC security engine will enable many other Linux kernel open-source communities to implement new offloads.

Thanks to the virtualization support available on the ARM Cortex A72 processors, virtualization technology projects such as KVM and XEN can be enabled on the platform; container technologies like LXC and Docker can also be enabled to maximize data center flexibility and enable a virtual CPE ecosystem where the Marvell MACCHIATObin community board can be used to develop edge applications on a 64-bit ARM platform.

In addition to the mainline Linux kernel, Marvell is upstreaming U-Boot and UEFI, and is set to upstream and open the Marvell MACCHIATObin ODP and DPDK support. This makes the Marvell MACCHIATObin board an ideal community platform for both communities, and will open the door to related communities who have based their ecosystems on ODP or DPDK. These may be user-space network-stack communities such as OpenFastPath and FD.io or virtual switching technologies that can make use of both the ARMADA 8040 SoC virtualization support and networking capabilities such as Open vSwitch (OVS) or Vector Packet Processing (VPP). Similar to ODP and DPDK, Marvell MACCHIATObin netmap support can enable VALE virtual switching technology or security ecosystem such as pfsense.

Thanks to its hardware features and upstreamed software support, the Marvell MACCHIATObin community board is not limited to data center SDN and NFV applications. It is highly suited as a development platform for network and security products and applications such as network routers, security appliances, IoT gateways, industrial computing, home customer-provided equipment (CPE) platforms and wireless backhaul controllers; a new level of scalable and modular solutions can be further achieved when combining the Marvell MACCHIATObin community board with Marvell switches and PHY products.

Summary

The board supports a rich software ecosystem and has made available high-performance, high-speed networking ARM 64-bit community platforms at a price that is affordable for the majority of ARM developers, software vendors and other interested companies.

-

2017 年 5 月 23 日

Marvell MACCHIATObin 社区开发板开始发货

作者:Maen Suleiman,Marvell 软件产品线高级经理

First-of-its-kind community platform makes ARM-64bit accessible for data center, networking and storage solutions developers

Now, with the shipping of the Marvell MACCHIATObin™ community board, developers and companies have access to a high-performance, affordable ARM®-based platform with the required technologies such as an ARMv8 64bit CPU, virtualization, high-speed networking and security accelerators, and the added benefit of open source software. SolidRun started shipping the Marvell MACCHIATObin community board in March 2017, providing an early access of the hardware to open-source communities.

Click image to enlarge

The Marvell MACCHIATObin community board is a mini-ITX form-factor ARMv8 64bit network- and storage-oriented community platform. It is based on the Marvell® hyperscale SBSA-compliant ARMADA® 8040 system on chip (SoC) (http://www.marvell.com/embedded-processors/armada-80xx/) that features quad-core high-performance Cortex®-A72 ARM 64bit CPUs

This power does not come at the cost of affordability: the Marvell MACCHIATObin community board is priced at $349.

The Marvell MACCHIATObin community board is easy to deploy. It uses the compact mini-ITX form factor enabling developers and companies to purchase one of the many cases based on the popular standard mini-ITX case to meet their requirements. The ARMADA 8040 SoC itself is SBSA- compliant to offer unified extensible firmware interface (UEFI) support. You can find the full specification at: http://wiki.macchiatobin.net/tiki-index.php?page=About+MACCHIATObin.

To provide the Marvell MACCHIATObin community board with ready-made support for the open-source platforms used in SDN, NFV and similar applications, Marvell is upstreaming MACCHIATObin software support to the Linux kernel, U-Boot and UEFI, and is set to upstream and open the Marvell MACCHIATObin community board for ODP and DPDK support.

In addition to upstreaming the MACCHIATObin software support, Marvell added MACCHIATObin support to the ARMADA 8040 SDK and plans to make the ARMADA 8040 SDK publicly available. Many of the ARMADA 8040 SDK components are available at: https://github.com/orgs/MarvellEmbeddedProcessors/.

For more information about the many innovative features of the Marvell MACCHIATObin community board, please visit: http://wiki.macchiatobin.net. To place an order for the Marvell MACCHIATObin community board, please go to: http://macchiatobin.net/.

-

April 27, 2017

11ac Wave 2 和 11ax 在 Wi-Fi 部署中的挑战: 如何经济高效地升级到 2.5GBASE-T 和 5GBASE-T

By Nick Ilyadis

IEEE has also noted that in the past two decades, the IEEE 802.11 wireless local area networks (WLANs) have also experienced tremendous growth with the proliferation of IEEE 802.11 devices, as a major Internet access for mobile computing. Therefore, the IEEE 802.11ax specification is under development as well. Giving equal time to Wikipedia, its definition of 802.11ax is: a type of WLAN designed to improve overall spectral efficiency in dense deployment scenarios, with a predicted top speed of around 10 Gbps. It works in 2.4GHz or 5GHz and in addition to MIMO and MU-MIMO, it introduces Orthogonal Frequency-Division Multiple Access (OFDMA) technique to improve spectral efficiency and also higher order 1024 Quadrature Amplitude Modulation (QAM) modulation support for better throughputs. Though the nominal data rate is just 37 percent higher compared to 802.11ac, the new amendment will allow a 4X increase of user throughput. This new specification is due to be publicly released in 2019.

Faster “Cats” Cat 5, 5e, 6, 6e and on

And yes, even cabling is moving up to keep up. Differences between CAT5, CAT5e, CAT6 and CAT6e Cables for specifics), but suffice it to say, each iteration is capable of moving more data faster, starting with the ubiquitous Cat 5 at 100Mbps at 100MHz over 100 meters of cabling to Cat 6e reaching 10,000 Mbps at 500MHz over 100 meters. Cat 7 can operate at 600MHz over 100 meters, with more “Cats” on the way. All of this of course, is to keep up with streaming, communications, mega data or anything else being thrown at the network.

How to Keep Up Cost-Effectively with 2.5BASE-T and 5BASE-T

This is where Marvell steps in with a whole solution. Marvell’s products, including the Avastar wireless products, Alaska PHYs and Prestera switches, provide an optimized solution that will help support up to 2.5 and 5.0 Gbps speeds, using existing cabling. For example, the Marvell Avastar 88W8997 wireless processor was the industry's first 28nm, 11ac (wave-2), 2x2 MU-MIMO combo with full support for Bluetooth 4.2, and future BT5.0. To address switching, Marvell created the Marvell® Prestera® DX family of packet processors, which enables secure, high-density and intelligent 10GbE/2.5GbE/1GbE switching solutions at the access/edge and aggregation layers of Campus, Industrial, Small Medium Business (SMB) and Service Provider networks. And finally, the Marvell Alaska family of Ethernet transceivers are PHY devices which feature the industry's lowest power, highest performance and smallest form factor.

These transceivers help optimize form factors, as well as multiple port and cable options, with efficient power consumption and simple plug-and-play functionality to offer the most advanced and complete PHY products to the broadband market to support 2.5G and 5G data rate over Cat5e and Cat6 cables.

You mean, I don’t have to leave the wiring closet?

# # #

-

April 27, 2017

如何解决数据中心对高带宽的饥渴,Marvell 谈数据中心八大发展趋势

作者:Nick Ilyadis,Marvell 产品线技术副总裁

IoT devices, online video streaming, increased throughput for servers and storage solutions – all have contributed to the massive explosion of data circulating through data centers and the increasing need for greater bandwidth. IT teams have been chartered with finding the solutions to support higher bandwidth to attain faster data speeds, yet must do it in the most cost-efficient way - a formidable task indeed. Marvell recently shared with eWeek about what it sees as the top trends in data centers as they try to keep up with the unprecedented demand for higher and higher bandwidth. Below are the top eight data center trends Marvell has identified as IT teams develop the blueprint for achieving high bandwidth, cost-effective solutions to keep up with explosive data growth.

Real-time analytics allow organizations to monitor and make adjustments as needed to effectively allocate precious network bandwidth and resources. Leveraging analytics has become a key tool for data center operators to maximize their investment.

能源成本往往是导致数据中心成本居高不下的主因。 Ethernet solutions designed with greater power efficiency help data centers transition to the higher GbE rates needed to keep up with the higher bandwidth demands, while keeping energy costs in check.

By using the NVMe protocol, data centers can exploit the full performance of SSDs, creating new compute models that no longer have the limitations of legacy rotational media. SSD performance can be maximized, while server clusters can be enabled to pool storage and share data access throughout the network.

8.) Transition from Servers to Network Storage With the growing amount of data transferred across networks, more data centers are deploying storage on networks vs. servers.

8.) Transition from Servers to Network Storage With the growing amount of data transferred across networks, more data centers are deploying storage on networks vs. servers. # # #

-

March 17, 2017

三天,两次谈话和一条新生产线: Marvell 高调亮相 2017 年美国 OCP 峰会,发布符合 IEEE 802.1BR 标准的数据中心 解决方案

作者:Michael Zimmerman,Marvell CSIBU 副总裁兼总经理

At last week’s 2017 OCP U.S. Summit, it was impossible to miss the buzz and activity happening at Marvell’s booth. Taking our mantra #MarvellOnTheMove to heart, the team worked tirelessly throughout the week to present and demo Marvell’s vision for the future of the data center, which came to fruition with the launch of our newest Prestera® PX Passive Intelligent Port Extender (PIPE) family.

But we’re getting ahead of ourselves…

Marvell kicked off OCP with two speaking sessions from its leading technologists. Yaniv Kopelman, Networking CTO of the Networking Group, presented "Extending the Lifecycle of 3.2T Switches,” a discussion on the concept of port extender technology and how to apply it to future data center architecture. Michael Zimmerman, vice president and general manager of the Networking Group, then spoke on "Modular Networking" and teased Marvell's first modular solution based on port extender technology.

Marvell kicked off OCP with two speaking sessions from its leading technologists. Yaniv Kopelman, Networking CTO of the Networking Group, presented "Extending the Lifecycle of 3.2T Switches,” a discussion on the concept of port extender technology and how to apply it to future data center architecture. Michael Zimmerman, vice president and general manager of the Networking Group, then spoke on "Modular Networking" and teased Marvell's first modular solution based on port extender technology. Marvell's Prestera PX PIPE family purpose-built to reduce power consumption, complexity and cost in the data center

Marvell’s 88SS1092 NVMe SSD controller designed to help boost next-generation storage and data center systems

Marvell’s Prestera 98CX84xx switch family designed to help data centers break the 1W per 25G port barrier for 25G Top-of-Rack (ToR) applications

Marvell’s ARMADA® 64-bit ARM®-based modular SoCs developed to improve the flexibility, performance and efficiency of servers and network appliances in the data center

Marvell’s Alaska® C 100G/50G/25G Ethernet transceivers which enable low-power, high-performance and small form factor solutions

We’re especially excited to introduce our PIPE solution on the heels of OCP because of the dramatic impact we anticipate it will have on the data center…

Amidst all of the announcements, speaking sessions and demos, our very own George Hervey, principal architect, also sat down with Semiconductor Engineering’s Ed Sperling for a Tech Talk. In the white board session, George discussed the power efficiency of networking in the enterprise and how costs can be saved by rightsizing Ethernet equipment.

Summit was filled with activity for Marvell, and we can’t wait to see how our customers benefit from our suite of data center solutions.

What were some of your OCP highlights? Did you get a chance to stop by the Marvell booth at the show? Tweet us at @marvellsemi to let us know, and check out all of the activity from last week. We want to hear from you!

-

March 13, 2017

端口扩展器技术让网络交换焕然一新

作者:Marvell 公司资深架构师 George Hervey

Our lives are increasingly dependent on cloud-based computing and storage infrastructure. It is no surprise therefore that the demands on such infrastructure are growing at an alarming rate, especially as the trends of big data and the internet of things start to make their impact. With an increasing number of applications and users, the annual growth rate is believed to be 30x per annum, and even up to 100x in some cases. Such growth leaves Moore’s law and new chip developments unable to keep up with the needs of the computing and network infrastructure. These factors are making the data and communication network providers invest in multiple parallel computing and storage resources as a way of scaling to meet demands.

Our lives are increasingly dependent on cloud-based computing and storage infrastructure. It is no surprise therefore that the demands on such infrastructure are growing at an alarming rate, especially as the trends of big data and the internet of things start to make their impact. With an increasing number of applications and users, the annual growth rate is believed to be 30x per annum, and even up to 100x in some cases. Such growth leaves Moore’s law and new chip developments unable to keep up with the needs of the computing and network infrastructure. These factors are making the data and communication network providers invest in multiple parallel computing and storage resources as a way of scaling to meet demands. Within a data center a classic approach to networking is a hierarchical one, with an individual rack using a leaf switch – also termed a top-of-rack or ToR switch – to connect within the rack, a spine switch for a series of racks, and a core switch for the whole center. And, like the servers and storage appliances themselves, these switches all need to be managed. In the recent past there have usually been one or two vendors of data center network switches and the associated management control software, but things are changing fast. Most of the leading cloud service providers, with their significant buying power and technical skills, recognised that they could save substantial cost by designing and building their own network equipment. Many in the data center industry saw this as the first step in disaggregating the network hardware and the management software controlling it. With no shortage of software engineers, the cloud providers took the management software development in-house while outsourcing the hardware design.

The concept rests on the belief that there are many nodes in the network that don’t need the extensive management capabilities most switches have. Essentially this introduces a parent/child relationship, with the controlling switch, the parent, being the managed switch and the child, the port extender, being fed from it. This port extender approach was ratified into the networking standard 802.1BR in 2012, and every network switch built today complies with this standard.

Look under the lid of a port extender and you’ll find the same switch chip being used as in the parent bridge. We have moved forward, sort of. Without a chip specifically designed as a port extender switch vendors have continued to use their standard chips sets, without realising potential cost and power savings. However, the truly modular approach to network switching has taken a leap forward with the launch of Marvell’s 802.1BR compliant port extender IC termed PIPE – passive intelligent port extender, enabling interoperability with a controlling bridge switch from any of the industry’s leading OEMs. It also offers attractive cost and power consumption benefits, something that took the shine off the initial interest in port extender technology. Seen as the second stage of network disaggregation, this approach effectively leads to decoupling the port connectivity from the processing power in the parent switch, creating a far more modular approach to networking.

Marvell’s Prestera® PIPE family targets data centers operating at 10GbE and 25GbE speeds that are challenged to achieve lower CAPEX and OPEX costs as the need for bandwidth increases. The Prestera PIPE family will facilitate the deployment of top-of-rack switches at half the cost and power consumption of a traditional Ethernet switch. The PIPE approach also includes a fast fail over and resiliency function, essential for providing continuity and high availability to critical infrastructure.

-

March 08, 2017

NVMe 控制器突破数据中心传统磁介质的局限性具备高速且低成本优势的 NVMe SSD 共享存储已进入第二代

作者:Nick Ilyadis,Marvell 产品线技术副总裁

Marvell 在 OCP 峰会上初次推出第二代 NVM Express SSD 控制器 88SS1092

数据中心 SSD: NVMe and Where We’ve Been

数据中心 SSD: NVMe and Where We’ve Been When solid-state drives (SSDs) were first introduced into the data center, the infrastructure mandated they work within the confines of the then current bus technology, such as Serial ATA (SATA) and Serial Attached SCSI (SAS), developed for rotational media. 即使非常快的 HDD 也比不上 SSD 的速度,其对应总线的吞吐能力也成为阻碍充分发挥 SSD 技术优势的一大瓶颈。 作为一种在网络,图形以及其他插入式设备上广泛应用的高带宽总线, PCIe 成为了可行的选择,但 PCIe 总线配合原本为 HDD 开发的存储协议(例如 AHCI)仍然无法有效发挥易失性存储介质的性能优势。 因此,NVM Express (NVMe) 行业工作组成立,创建一组为 PCIe 总线开发的标准化协议和命令,以便允许多条路径可以充分利用数据中心中 SSD 的优势。 The NVMe specification was designed from the ground up to deliver high-bandwidth and low-latency storage access for current and future NVM technologies.

NVMe 优化了命令发送和完成通路, 单个 I/O 队列中支持多达 64K 条命令。 此外,增加了许多企业功能支持,例如端到端数据保护(与 T10 DIF 和 DIX 标准兼容),增强错误报告和虚拟化功能。 总而言之,NVMe 作为一种可扩展的存储应用协议,旨在充分发挥 PCIe SSD 性能,从而更好的满足企业级、数据中心和消费级等各种场景的应用需求。

SSD Network Fabrics

New NVMe controllers from companies like Marvell allowed the data center to share storage data to further maximize cost and performance efficiencies. 使用 SSD 建立存储集群,取代以往为每台服务器单独配置存储的方式,提高数据中心存储总容量。 此外,通过为其他服务器建立公共区传送数据,可以方便地访问共享数据。 因此,这些新计算模型使数据中心不仅能够充分优化 SSD 的高速性能,而且能够更经济地在整个数据中心部署 SSD,从而降低总体成本并简化维护。 针对负载较高的服务器,不用增加额外的 SSD,而是从存储池动态分配满足其需求。

以下是这些网络结构工作原理的简单示例: 如果一个系统有 10 个服务器,每个服务器的 PCIe 总线上都有一个 SSD,那么可以通过每个 SSD 形成一个 SSD 组成的存储池群,它不仅提供了一种额外的存储手段,而且还提供了一种集中和共享数据访问的方法。 假设一台服务器的利用率只有 10%,而另一台服务器被超负荷使用,SSD 组成的存储池群将为超负荷的服务器提供更多的存储空间,而无需为其额外添加 SSD。 在这个例子中如果是数百台服务器,您会看到其成本、维护和性能效率是非常高的。

Marvell 当初推出第一款 NVMe SSD 控制器,就是服务于这种新的数据中心存储架构。 该产品仅可支持四个 PCIe 3.0 通道,根据主机需要,可以配置为支持 4BG/S 和 2BG/S 两种带宽方式。 它使用 NVMe 高级命令处理实现了出类拔萃的 IOPS 性能。 为了充分利用 PCIe 总线带宽,Marvell 创新的 NVMe 设计通过大量的硬件辅助来增强 PCIe 链路数据的传送。 这有助于解决传统的主机控制瓶颈问题,发挥出闪存真正的性能。

第二代 NVMe 控制器已经面市!

Marvell 第二代 NVMe SSD 控制器芯片 88SS1092 已推出,并已通过了内部测试和第三方操作系统/平台的兼容性测试。 因此,Marvell®88SS1092 已为用于增强下一代存储和数据中心系统做好准备,并在 2017 年 3 月举行的美国加州圣荷塞举行的开放计算项目 (OCP) 峰会上初次亮相。

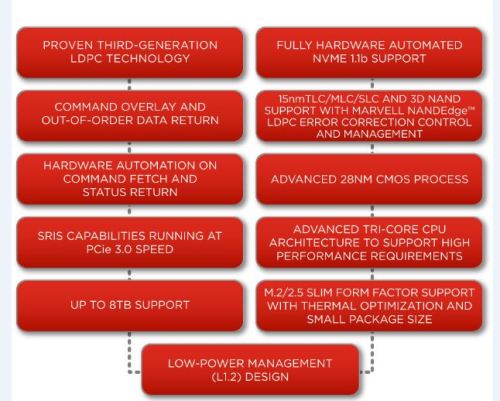

The Marvell 88SS1092 is Marvell's second-generation NVMe SSD controller capable of PCIe 3.0 X 4 end points to provide full 4GB/s interface to the host and help remove performance bottlenecks. While the new controller advances a solid-state storage system to a more fully flash-optimized architecture for greater performance, it also includes Marvell's third-generation error-correcting, low-density parity check (LDPC) technology for the additional reliability enhancement, endurance boost and TLC NAND device support on top of MLC NAND.

今天,NVMe SSD 共享存储的速度和成本优势不仅成为了现实,而且已经发展到了第二代。 网络模式已经发生了变化。 通过使用 NVMe 协议充分发挥 SSD 的全部性能,突破传统磁介质的限制,建立全新的架构。 SSD 性能还可以进一步提高,而 SSD 组成的存储池群和新的网络架构支持实现存储池和共享数据访问。 在当今的数据中心,随着新的控制器和技术帮助优化了 SSD 技术的性能和成本效率,NVMe 工作组的辛勤工作正在付诸实践。

Marvell 88SS1092 Second-Generation NVMe SSD Controller

New process and advanced NAND controller design includes:

-

January 20, 2017

全新的扩展范例:以太网端口扩展器

作者:Michael Zimmerman,Marvell CSIBU 副总裁兼总经理

Over the last three decades, Ethernet has grown to be the unifying communications infrastructure across all industries. More than 3M Ethernet ports are deployed daily across all speeds, from FE to 100GbE. In enterprise and carrier deployments, a combination of pizza boxes — utilizing stackable and high-density chassis-based switches — are used to address the growth in Ethernet. However, over the past several years, the Ethernet landscape has continued to change. With Ethernet deployment and innovation happening fastest in the data center, Ethernet switch architecture built for the data center dominates and forces adoption by the enterprise and carrier markets. This new paradigm shift has made architecture decisions in the data center critical and influential across all Ethernet markets. However, the data center deployment model is different.

How Data Centers are Different

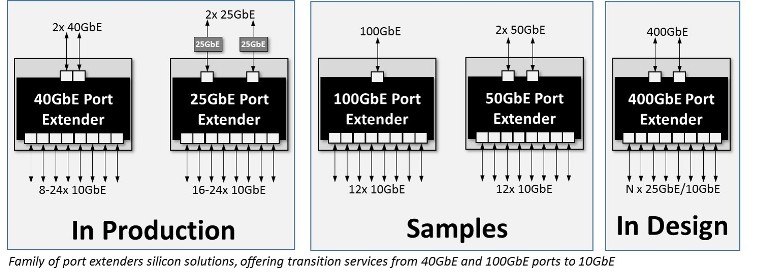

The ultimate goal is to pack as many Ethernet ports at the highest commercially available speed onto the Ethernet switch, and make it the most economical and power efficient. The end point connected to the ToR switch is the server NIC which is typically the highest available speed in the market (currently 10/25GbE moving to 25/50GbE). Today, 128 ports of 25GbE switches are in deployment, going to 64x 100GbE and beyond in the next few years. But while data centers are moving to higher port density and higher port speeds, and homogenous deployment, there is still a substantial market for lower speeds such as 10GbE that continues to be deployed and must be served economically. The innovation in data centers drives higher density and higher port speeds but many segments of the market still need a solution with lower port speeds with different densities. How can this problem be solved?

The ultimate goal is to pack as many Ethernet ports at the highest commercially available speed onto the Ethernet switch, and make it the most economical and power efficient. The end point connected to the ToR switch is the server NIC which is typically the highest available speed in the market (currently 10/25GbE moving to 25/50GbE). Today, 128 ports of 25GbE switches are in deployment, going to 64x 100GbE and beyond in the next few years. But while data centers are moving to higher port density and higher port speeds, and homogenous deployment, there is still a substantial market for lower speeds such as 10GbE that continues to be deployed and must be served economically. The innovation in data centers drives higher density and higher port speeds but many segments of the market still need a solution with lower port speeds with different densities. How can this problem be solved? Bridging the Gap

The IEEE standards codified the 802.1br port extender standard as the protocol needed to allow a fan-out of ports from an originating higher speed port. In essence, one high end, high port density switch can fan out hundreds or even thousands of lower speed ports. The high density switch is the control bridge, while the devices which fan out the lower speed ports are the port extenders.

Why Use Port Extenders

In addition to re-packaging the data center switch as a control bridge, there are several unique advantages for using port extenders:

Port extenders are only a fraction of the cost, power and board space of any other solution aimed for serving Ethernet ports.

Port extenders have very little or no software. This simplified operational deployment results in the number of managed entities limited to only the high end control bridges.

Port extenders communicate with any high-end switch, via standard protocol 802.1br. Additional options such as Marvell DSA, or programmable headers are possible.)

Port extenders work well with any transition service: 100GbE to 10GbE ports, 400GbE to 25GbE ports, etc.

Port extenders can operate in any downstream speed: 1GbE, 2.5GbE, 10GbE, 25GbE, etc.

Port extenders can be oversubscribed or non-oversubscribed, which means the ratio of upstream bandwidth to downstream bandwidth can be programmable from 1:1 to 1:4 (depending on the application). This by itself can lower cost and power by a factor of 4x.

Marvell Port Extenders

Marvell has launched multiple purpose-built port extender products, which allow fan-out of 1GbE and 10GbE ports of 40GbE and 100GbE higher speed ports. Along with the silicon solution, software reference code is available and can be easily integrated to a control bridge. Marvell conducted interoperability tests with a variety of control bridge switches, including the leading switches in the market. The benchmarked design offers 2x cost reduction and 2x power savings. SDK, data sheet and design package are available. Marvell IEEE802.1br port extenders are shipping to the market now. Contact your sales representatives for more information.

-

January 09, 2017

以太网达尔文主义: 适者生存(唯快不破)

作者:Michael Zimmerman,Marvell CSIBU 副总裁兼总经理

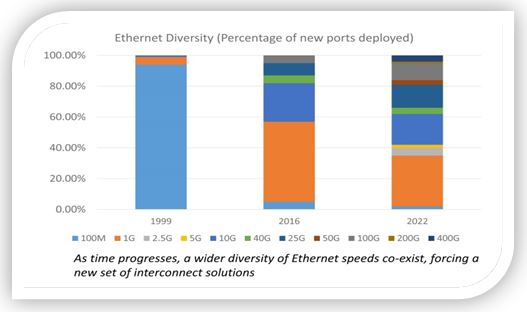

The most notable metric of Ethernet technology is the raw speed of communications. With the introduction of 100BASE-T, the massive 10BASE-T installed base was replaced, showing a clear Darwinism effect of the fittest (fastest) displacing the prior and older generation. However, when 1000BASE-T (GbE – Gigabit Ethernet) was introduced, contrary to industry experts’ predictions, it did not fully displace 100BASE-T, and the two speeds have co-existed for a long time (more than 10 years). In fact, 100BASE-T is still being deployed in many applications. The introduction — and slow ramp — of 10GBASE-T has not impacted the growth of GbE, and it is only recently that GbE ports began consistently growing year over year. This trend signaled a new evolution paradigm of Ethernet: the new doesn’t replace the old, and the co-existence of multi variants is the general rule. The introduction of 40GbE and 25GbE augmented the wide diversity of Ethernet speeds, and although 25GbE was rumored to displace 40GbE, it is expected that 40GbE ports will still be deployed over the next 10 years1.

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect.

Hence, a new market reality evolved: there is less of a cannibalizing effect (i.e. newer speed cannibalizing the old), and more co-existence of multiple variants. This new diversity will require a set of solutions which allow effective support for multiple speed interconnect. 能够经济地缩小到几个端口2

Support of multiple Ethernet speeds

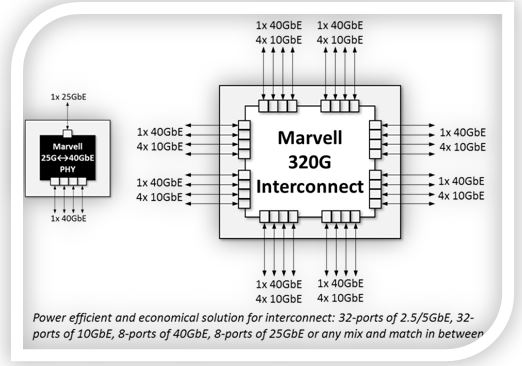

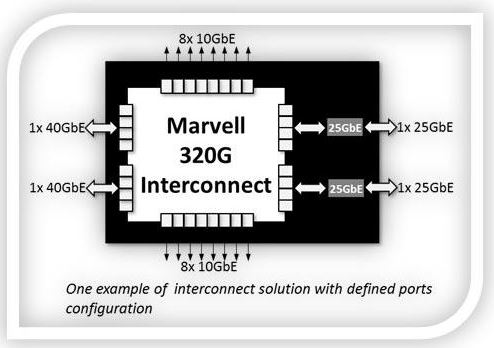

The first products in the family are the Prestera® 98DX83xx 320G interconnect switch, and the Alaska® 88X5113 25G/40G Gearbox PHY. The 98DX83xx switch fans-out up to 32-ports of 10GbE or 8-ports of 40GbE, in economical 24x20mm package, with power of less than 0.5Watt/10G port.

The 88X5113 Gearbox converts a single port of 40GbE to 25GbE. The combination of the two devices creates unique connectivity configurations for a myriad of Ethernet speeds, and most importantly enables scale down to a few ports. While data center- scale 25GbE switches have been widely available for 64-ports, 128-ports (and beyond), a new underserved market segment evolved for a lower port count of 25GbE and 40GbE. Marvell has addressed this space with the new interconnect solution, allowing customers to configure any number of ports to different speeds, while keeping the power envelope to sub-20Watt, and a fraction of the hardware/thermal footprint of comparable data center solutions. The optimal solution to serve low port count connectivity of 10GbE, 25GbE, and 40GbE is now well addressed by Marvell. Samples and development boards with SDK are ready, with the option of a complete package of application software.