The Custom Era of Chips

This article was originally published in VentureBeat.

Artificial intelligence is about to face some serious growing pains.

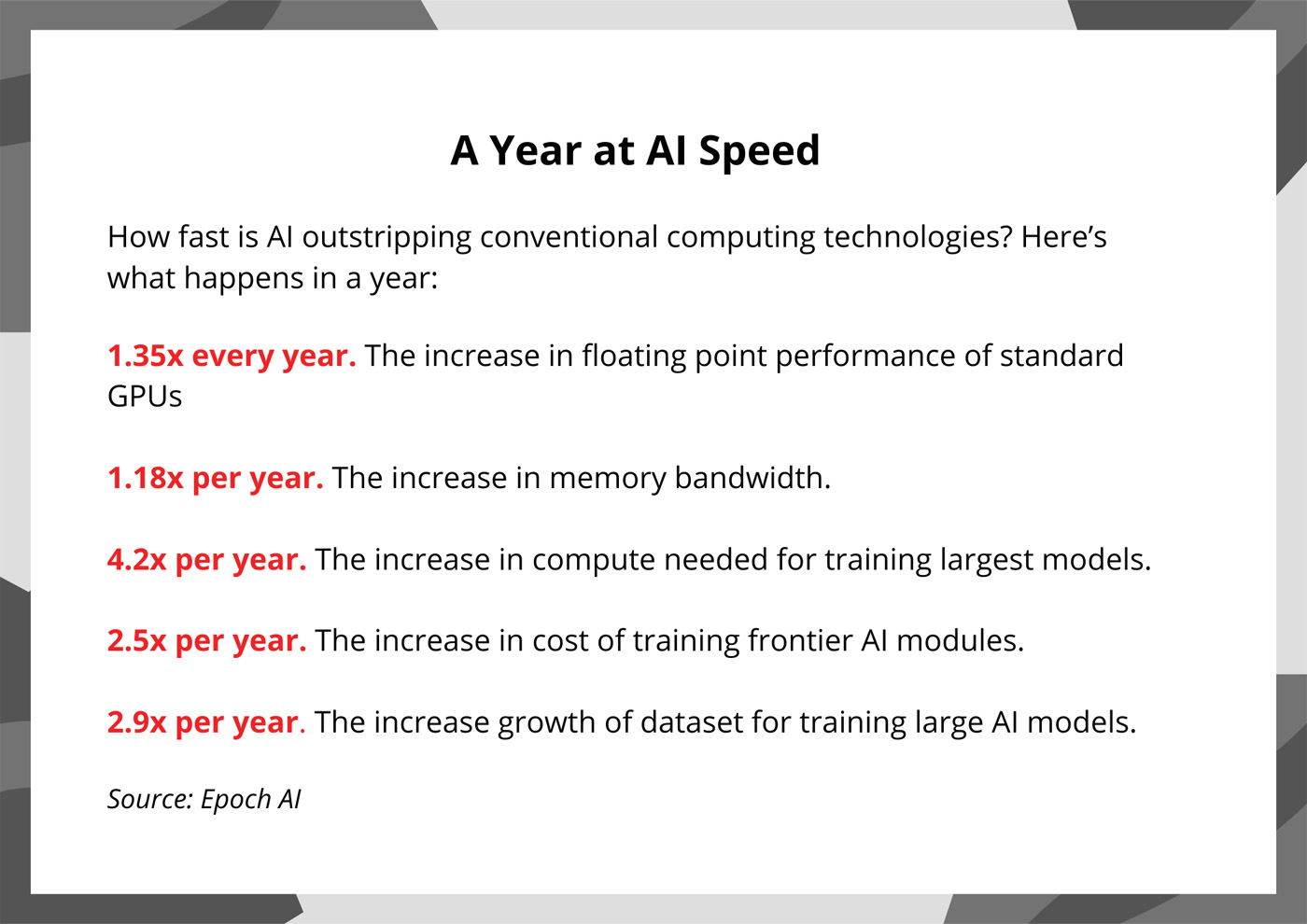

Demand for AI services is exploding globally. Unfortunately, so is the challenge of delivering those services in an economical and sustainable manner. AI power demand is forecast to grow by 44.7% annually, a surge that will double data center power consumption to 857 terawatt hours in 20281: as a nation today, that would make data centers the sixth largest consumer of electricity, right behind Japan’s2 consumption. It’s an imbalance that threatens the “smaller, cheaper, faster” mantra that has driven every major trend in technology for the last 50 years.

It also doesn’t have to happen. Custom silicon—unique silicon optimized for specific use cases—is already demonstrating how we can continue to increase performance while cutting power even as Moore’s Law fades into history. Custom may account for 25% of AI accelerators (XPUs) by 20283 and that’s just one category of chips going custom.

The Data Infrastructure is the Computer

Jensen Huang’s vision for AI factories is apt. These coming AI data centers will churn at an unrelenting pace 24/7. And, like manufacturing facilities, their ultimate success or failure for service providers will be determined by operational excellence, the two-word phrase that rules manufacturing. Are we consuming more, or less, energy per token than our competitor? Why is mean time to failure rising? What’s the current operational equipment effectiveness (OEE)? In oil and chemicals, the end products sold to customers are indistinguishable commodities. Where they differ is in process design, leveraging distinct combinations of technologies to squeeze out marginal gains.

The same will occur in AI. Cloud operators already are engaged in differentiating their backbone facilities. Some have adopted optical switching to reduce energy and latency. Others have been more aggressive at developing their own custom CPUs. In 2010, the main difference between a million-square-foot hyperscale data center and a data center inside a regional office was size. Both were built around the same core storage devices, servers and switches. Going forward, diversity will rule, and the operators with the lowest cost, least downtime and ability to roll out new differentiating services and applications will become the favorite of businesses and consumers.

The best infrastructure, in short, will win.

The Custom Concept

And the chief way to differentiate infrastructure will be through custom infrastructure that are enabled by custom semiconductors, i.e., chips containing unique IP or features for achieving leapfrog performance for an application. It’s a spectrum ranging from AI accelerators built around distinct, singular design to a merchant chip containing additional custom IP, cores and firmware to optimize it for a particular software environment. While the focus is now primarily on higher value chips such as AI accelerators, every chip will get customized: Meta, for example, recently unveiled a custom NIC, a relatively unsung chip that connects servers to networks, to reduce the impact of downtime.

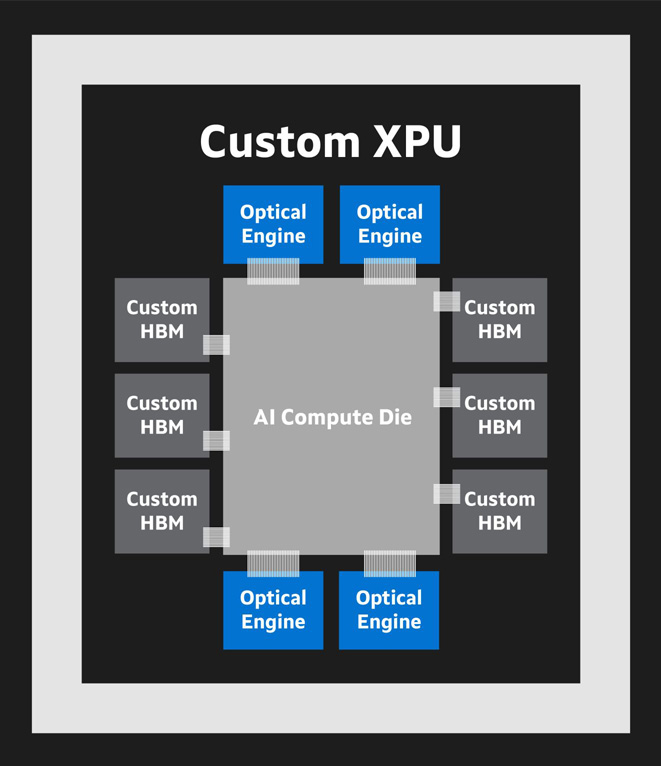

A single stack of high bandwidth memory (HBM) can require an interface with 2,048 pins to transfer data, or over 8,000 per XPU. Customizing can dramatically reduce power, pin count and while increasing memory capacity. XPUs with custom HBM are expected in one to two years.

Customization will involve rethinking every aspect of semiconductor design. Some, for example, are looking at ways to optimize the base chip and interfaces for managing the gigabytes of high bandwidth memory (HBM) used as a cache in high-end AI accelerators. Optimization can potentially increase memory inside the chip package by up to 33%, reduce interface power by 70% and increase the available silicon real estate for logic functions by close to 25%4.

The custom category also includes new, emerging classes of interconnect chips aimed at scaling up the size and capabilities of computing systems. Today, servers typically contain eight or fewer XPUs and/or CPUs and all of the components are housed in an aluminum box that slides into a rack. In the future, the box becomes an octopus. AI systems will contain hundreds of accelerators along with storage and memory spread over several racks connected with a portfolio of optical engines tailored to the specifications of XPUs, CXL controllers, PCIe retimers, transmit-receive optical digital signal processors (DSPs) and other devices. Many of these devices didn’t even exist a few years ago but are expected to grow rapidly: 75% of AI and cloud servers may contain PCIe retimers within two years, according to The 650 Group5. While these devices and servers will be grounded in technology standards, architectures and designs will vary widely from cloud to cloud.

A Periodic Table for Semis

But how does one make custom semiconductors – where designing a platform for producing 3nm or 2nm chips can cost over $500 million? In a market where Large Language Models (LLMs) change every few months? And how will these technologies work with emerging ideas like cold plate or immersive cooling?

As basic as it sounds, it starts with the elemental ingredients. Serializer-deserializer (SerDes) circuits are the textbook “most important-technology in the world” you’ve never heard about. These components control the flow of data between chips and infrastructure devices such as switches and servers. An 800G optical module, for example, is built with eight 100G SerDes. A single data center rack will contain tens of thousands of SerDes. You can think of them as the molecules of networking: fundamental building blocks that have an outsized influence on the health of the system as a whole. Slightly reducing the picojoules consumed in transmitting bits across a SerDes can translate into substantial energy saving across a global infrastructure.

Similarly, chip packaging now plays an outsized role in chip design because it provides a mechanism for streamlining power delivery and data paths while continuing to boost computing performance. More than 50% of power in a chip can get consumed by moving data from between different subsystems inside the chip itself.

Chip Industry 2.0?

As custom becomes the norm, we will also face a new dilemma: how does a company deliver custom products and still leverage the benefits of mass manufacturing? Semiconductor makers to date have succeeded by making very large numbers of a small handful of devices. ”Custom” used to mean taking fairly simple actions like tweaking speed or cache size, similar to how a retailer might offer the same basic sweater in a few new colors.

The first step—and you’re seeing it already—will come through a better definition of the services involved in developing custom semiconductors. Some will likely concentrate mostly on chip design and IP. At the other end of the spectrum, you will see companies offer sourcing and manufacturability services. Still others may concentrate on specific devices and categories. Only a few select companies will provide the full portfolio of services. AI itself will also play a role, slashing the time and cost required for design tasks from months to in many cases, minutes. This in turn will open the door to more customers and more classes of chips.

Will the industry succeed? Of course. It’s not the first time we’ve been tasked with doing the impossible.

1. IDC. Sept 2024.

2. Statista.

3. Marvell estimates based on analysts’ reports and internal forecasts.

4. Marvell internal estimate.

5. 650 Group May 2024.

6. IBM

7. Graphic: Epoch AI, June 2024.

# # #

Marvell and the M logo are trademarks of Marvell or its affiliates. Please visit www.marvell.com for a complete list of Marvell trademarks. Other names and brands may be claimed as the property of others.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency