In AI, The Voyage from Bigger to Better Is Underway

Bigger is better, right? Look at AI: the story swirls with superlatives.

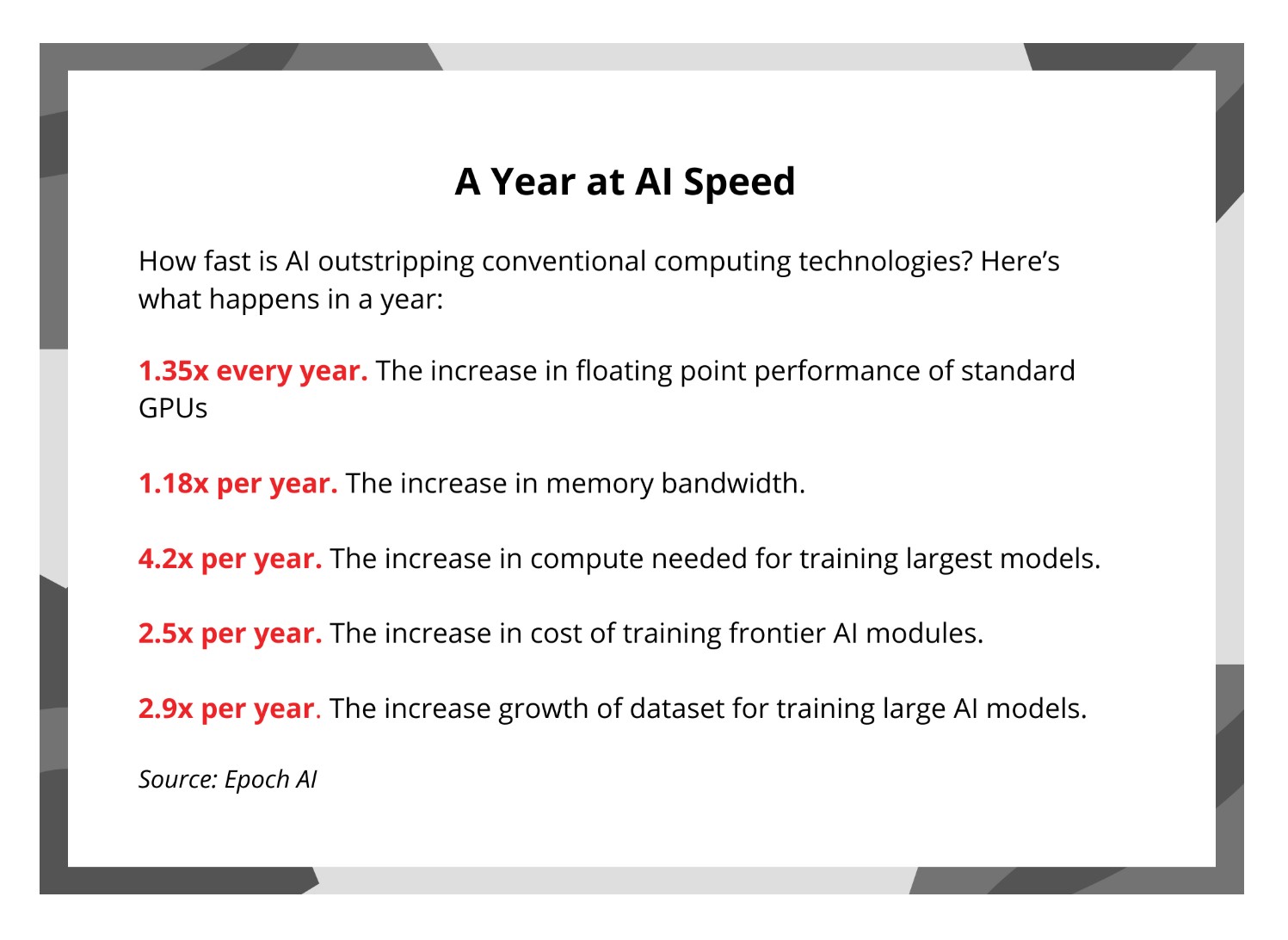

ChatGPT landed one million users within five days,1 far surpassing the pace of any previous technology. The compute requirements of training notable AI models increases 4.5x per year while training data sets mushroom by 3x per year,2 etc.

Bigger, however, comes at a price. Data center power consumption threatens to nearly triple by 2028 primarily because of AI3. Water withdrawals, meanwhile, are escalating as well: by 2027, AI data centers could need up to 6.6 billion cubic meters, or about half of what the UK uses.4 The economic and environmental toll over the long run may not be sustainable.

Conceptually it is easier to understand how larger models translate into a "better and more capable" model. The more layers or parameters the models have, contribute to the quality and accuracy of the model. Yet, can we sustain that extracted value at the same cadence by continuing the size increase? Or will the curve start to plateau at some point?

Here Come the Experts

Instead, let’s shift from bigger to better.

We can, for instance, shift from massive, comprehensive models that can handle a broad swath of tasks to smaller expert models. You could think of them as human experts – doctors, lawyers, engineers –each specializing in their own fields.

DeepSeek showed how fewer, smaller expert models can provide high efficiency and accelerated results. But it’s just a first step in how experts might operate in the future. Take health care. Science still doesn’t have a complete understanding of how certain conditions arise, why some people are more susceptible to diseases, and how best to treat individual patients. Doctors could consult expert models focused on different disciplines—endocrinology, epidemiology, nutrition, genetic contributions to particular diseases, local air and water quality, even personal tracking information—to attempt to develop a more focused course of treatment. These expert models could evolve at their own pace, thereby accelerating the overall rate of innovation, and researchers could experiment with cross-referencing different experts to test different hypotheses. In such an environment, the strengths of human intelligence and AI could complement each other.

An Archipelago for Accelerated Infrastructure

Expert models also pave the way for distributing infrastructure. A data center with 400,000 XPUs would require approximately 1GW of power capacity. That’s as much as a city like San Francisco with one million residents.5 Such a project would take years of planning and billions of dollars, and with the pace of evolution today, may not be enough at the day of first operation.

By contrast, smaller expert models can be distributed across a constellation of smaller data centers that fit more seamlessly into existing grid capacity or land use regulations, thereby potentially reducing the time and cost of building new infrastructure. Data center constellations can also help reduce the power and cost consumed in operations as cloud service providers now have a footprint for shifting workloads between locations based on utilization, prevailing power prices, and performance factors such as proximity to the customer or latency. In this case, you’ll likely see AI helping AI, i.e. systems that could anticipate near-term power and computing demand and shift applications before problems emerge. New technologies such as coherent lite for efficiently linking data centers between 2km and 20km apart are laying the groundwork for these architectures.

While constellations will be used for training and inference, the impact on inference will be particularly notable. Without widespread, efficient inferencing, even the best-trained models will have limited real-world impact. Inference is where artificial intelligence meets reality, and this is where we would like to maximize efficiency and maximize returns on the massive investments that are being made.

A more flexible infrastructure will demand fundamental advances at the chip and system level. At scale, and these AI platforms are at scale as they can be, every extra milliwatt turns into a megawatt, every overspent dollar snowballs into millions of dollars, and every non-optimized nanosecond translates into significant inefficiencies. Custom design boosts performance per bit by tailoring silicon to the exact needs of the application and provider. Marvell estimates that custom devices could account for 25% of the market for AI processors by 20285. Optics, even at the chip level, will also become more pervasive. While copper will continue to be used where possible, optics will increasingly take over as data centers scale, and just around the horizon we already see the vision of optics everywhere within the AI data center.

The Human Factor

Finally, we have to expand the role human intelligence can play. Specialized models, distributed computing resources and AI-enhanced technology will make infrastructure more efficient infrastructure, but adding learned experience and an ability to balance interests will effectively allow us to deploy it in a way that works for all. Will AI replace people? I don’t believe so. Augment, yes. But people with AI will accomplish far more than AI or people on their own.

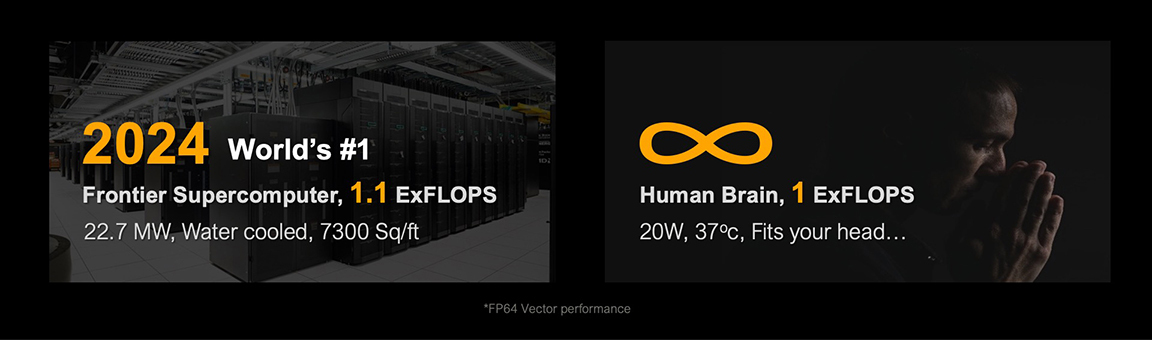

You Can Leave Your Hat On. Frontier, the world’s fastest supercomputer, uses 1 million times more energy and takes up close to 15,000x more space than the average human brain but only enjoys a marginal performance gain.

----

1. Exploding Topics.

2. Epoch AI. https://epoch.ai/trends

3. US Dept. of Energy, Dec 2024.

4. UC Riverside and UT Arlington, Oct 2023.

5. Marvell Estimates published at Industry Analyst Day, April 2024.

# # #

Marvell and the M logo are trademarks of Marvell or its affiliates. Please visit www.marvell.com for a complete list of Marvell trademarks. Other names and brands may be claimed as the property of others.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, data centers, AI infrastructure

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP