With AI computing and cloud data centers requiring unprecedented levels of performance and power, Marvell is leading the way with transformative optical interconnect solutions for accelerated infrastructure to meet the rising demand for network bandwidth.

At the ECOC 2024 Exhibition Industry Awards event, Marvell received the Most Innovative Pluggable Transceiver/Co-Packaged Module Award for the Marvell® COLORZ® 800 family. Launched in 2020 for ECOC’s 25th anniversary, the ECOC Exhibition Industry Awards spotlight innovation in optical communications, transport, and photonic technologies. This recognition highlights the company’s innovations in ZR/ZR+ technology for accelerated infrastructure and demonstrates its critical role in driving cloud and AI workloads.

Coherent optical digital signal processors (DSPs) are the long-haul truckers of the communications world. The chips are essential ingredients in the 600+ subsea Internet cables that crisscross the oceans (see map here) and the extended geographic links weaving together telecommunications networks and clouds.

One of the most critical trends for long-distancer communications has been the shift from large, rack-scale transport equipment boxes running on embedded DSPs often from the same vendor to pluggable modules based on standardized form factors running DSPs from silicon suppliers tuned to the power limits of modules.

With the advent of 800G ZR/ZR+ modules, the market arrives at another turning point. Here’s what you need to know.

It’s the Magic of Modularity

PCs, smartphones, solar panels and other technologies that experienced rapid adoption had one thing in common: general agreement on the key ingredients. By building products around select components, accepted standards and modular form factors, an ecosystem of suppliers sprouted. And for customers that meant fewer shortages, lower prices and accelerated innovation.

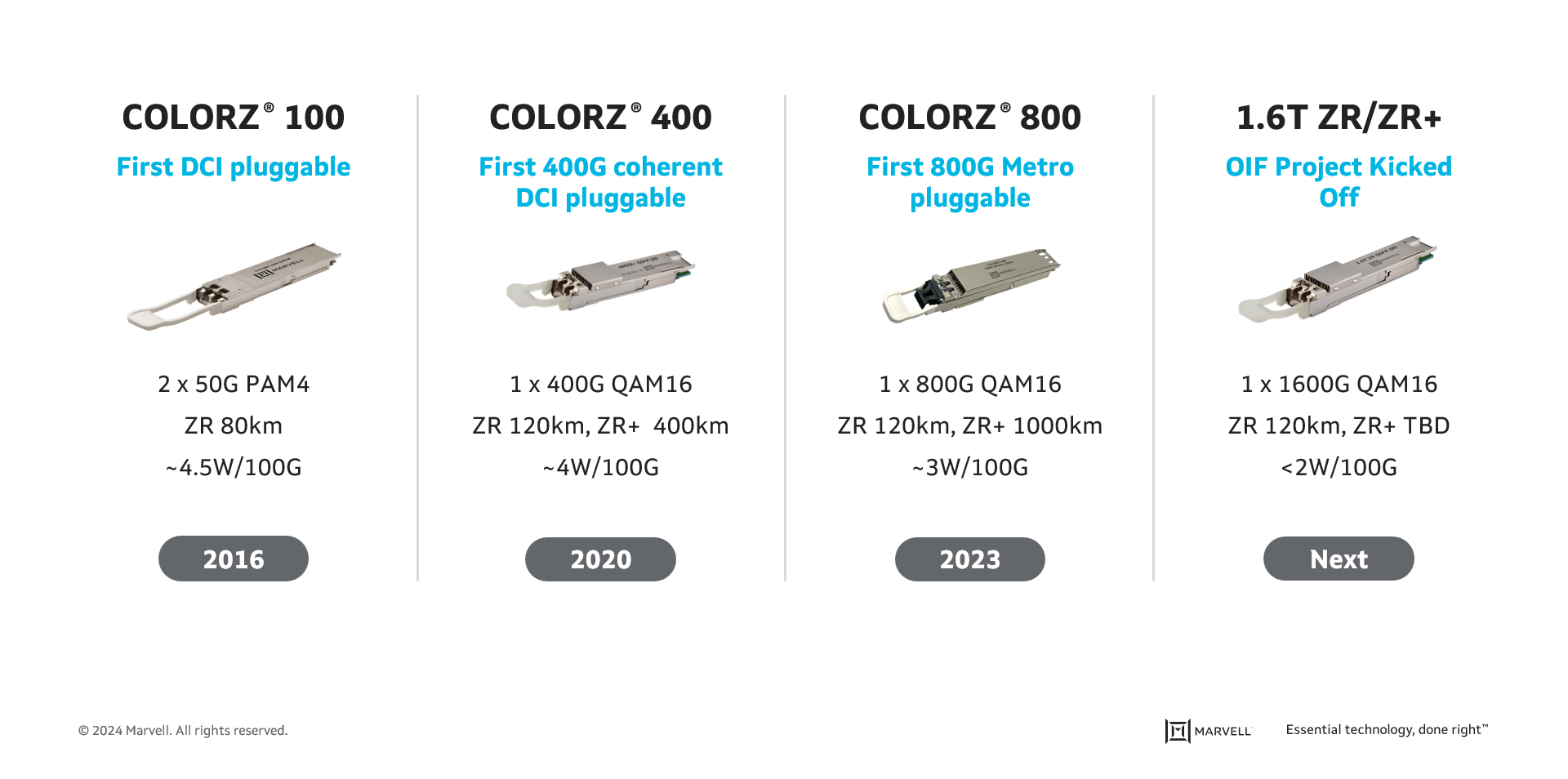

The same holds true of pluggable coherent modules. 100 Gbps coherent modules based on the ZR specification debuted in 2017. The modules could deliver data approximately 80 kilometers and consumed approximately 4.5 watts per 100G of data delivered. Microsoft became an early adopter and used the modules to build a mesh of metro data centers1.

Flash forward to 2020. Power per 100G dropped to 4W and distance exploded: 120k connections became possible with modules based on the ZR standard and 400k with the ZR+ standard. (An organization called OIF maintains the ZR standard. ZR+ is controlled by OpenROADM. Module makers often make both varieties. The main difference between the two is the amplifier: the DSPs, number of channels and form factors are the same.) ®

The market responded. 400ZR/ZR+ became adopted more rapidly than any other technology in optical history, according to Cignal AI principal analyst Scott Wilkinson.

“It opened the floodgates to what you could do with coherent technology if you put it in the right form factor,” he said during a recent webinar.

This article is part five in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

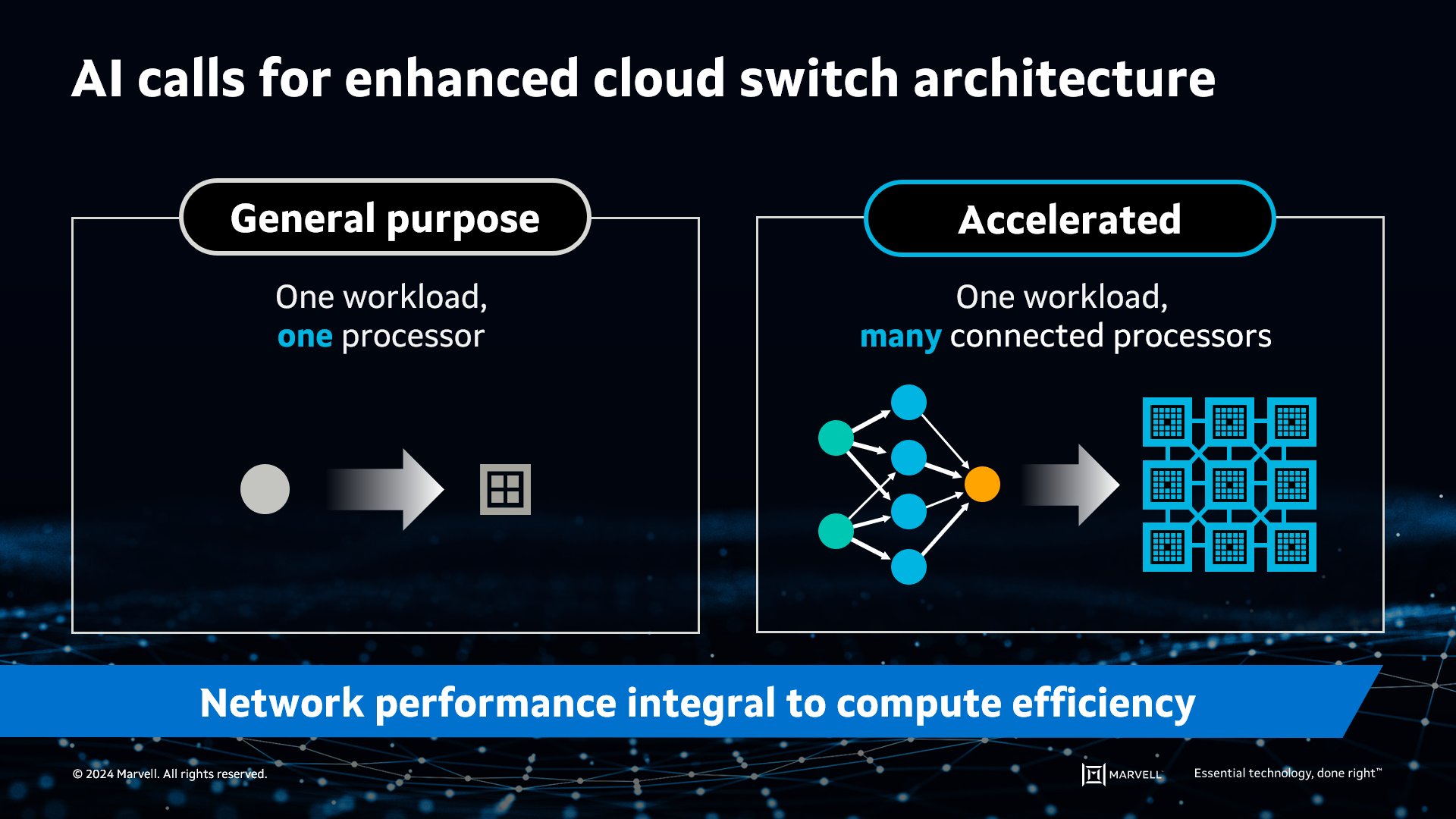

AI has fundamentally changed the network switching landscape. AI requirements are driving foundational shifts in the industry roadmap, expanding the use cases for cloud switching semiconductors and creating opportunities to redefine the terrain.

Here’s how AI will drive cloud switching innovation.

A changing network requires a change in scale

In a modern cloud data center, the compute servers are connected to themselves and the internet through a network of high-bandwidth switches. The approach is like that of the internet itself, allowing operators to build a network of any size while mixing and matching products from various vendors to create a network architecture specific to their needs.

Such a high-bandwidth switching network is critical for AI applications, and a higher-performing network can lead to a more profitable deployment.

However, expanding and extending the general-purpose cloud network to AI isn’t quite as simple as just adding more building blocks. In the world of general-purpose computing, a single workload or more can fit on a single server CPU. In contrast, AI’s large datasets don’t fit on a single processor, whether it’s a CPU, GPU or other accelerated compute device (XPU), making it necessary to distribute the workload across multiple processors. These accelerated processors must function as a single computing element.

AI requires accelerated infrastructure to split workloads across many processors.

This article is part four in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

Silicon photonics—the technology of manufacturing the hundreds of components required for optical communications with CMOS processes—has been employed to produce coherent optical modules for metro and long-distance communications for years. The increasing bandwidth demands brought on by AI are now opening the door for silicon photonics to come inside data centers to enhance their economics and capabilities.

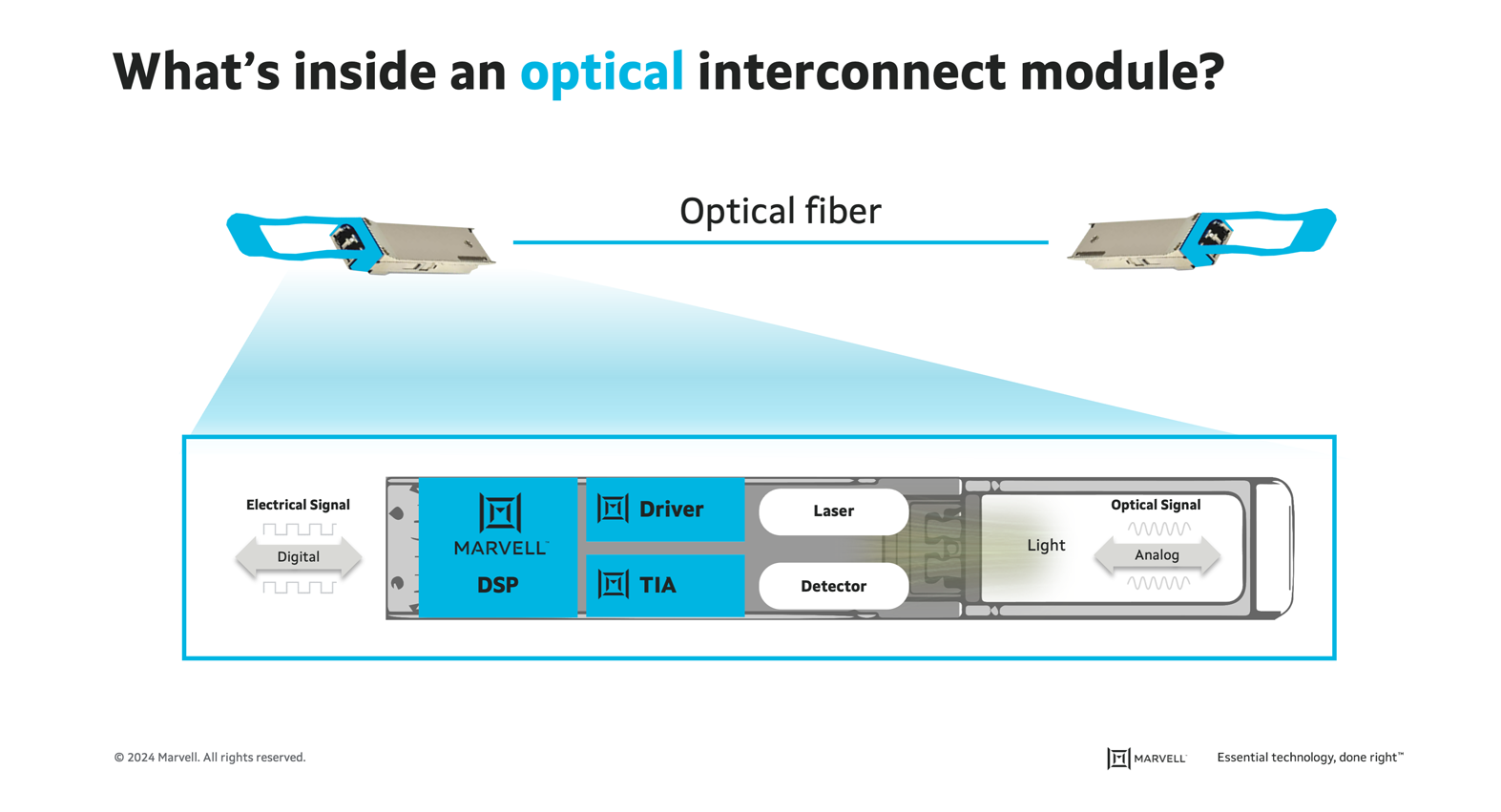

What’s inside an optical module?

As the previous posts in this series noted, critical semiconductors like digital signal processors (DSPs), transimpedance amplifiers (TIAs) and drivers for producing optical modules have steadily improved in terms of performance and efficiency with each new generation of chips thanks to Moore’s Law and other factors.

The same is not true for optics. Modulators, multiplexers, lenses, waveguides and other devices for managing light impulses have historically been delivered as discrete components.

“Optics pretty much uses piece parts,” said Loi Nguyen, executive vice president and general manager of cloud optics at Marvell. “It is very hard to scale.”

Lasers have been particularly challenging with module developers forced to choose between a wide variety of technologies. Electro-absorption-modulated (EML) lasers are currently the only commercially viable option capable of meeting the 200G per second speed necessary to support AI models. Often used for longer links, EML is the laser of choice for 1.6T optical modules. Not only is fab capacity for EML lasers constrained, but they are also incredibly expensive. Together, these factors make it difficult to scale at the rate needed for AI.

This article is part three in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

Twenty-five years ago, network bandwidth ran at 100 Mbps, and it was aspirational to think about moving to 1 Gbps over optical. Today, links are running at 1 Tbps over optical, or 10,000 times faster than cutting edge speeds two decades ago.

Another interesting fact. “Every single large language model today runs on compute clusters that are enabled by Marvell’s connectivity silicon,” said Achyut Shah, senior vice president and general manager of Connectivity at Marvell.

To keep ahead of what customers need, Marvell continually seeks to boost capacity, speed, and performance of the digital signal processors (DSPs), transimpedance amplifiers or TIAs, drivers, firmware and other components inside interconnects. It’s an interdisciplinary endeavor involving expertise in high frequency analog, mixed signal, digital, firmware, software and other technologies. The following is a map to the different components and challenges shaping the future of interconnects and how that future will shape AI.

Inside the Data Center

From a high level, optical interconnects perform the task their name implies: they deliver data from one place to another while keeping errors from creeping in during transmission. Another important task, however, is enabling data center operators to scale quickly and reliably.

“When our customers deploy networks, they don’t start deploying hundreds or thousands at a time,” said Shah. “They have these massive data center clusters—tens of thousands, hundreds of thousands and millions of (computing) units—that all need to work and come up at the exact same time. These are at multiple locations, across different data centers. The DSP helps ensure that they don’t have to fine tune every link by hand.”