By Todd Owens, Field Marketing Director, Marvell

By Todd Owens, Field Marketing Director, Marvell

Marvell 已于 2018 年 7 月 6 日完成收购 Cavium 的相关事宜,目前整合工作正在顺利进行。 Cavium 将充分整合到 Marvell 公司。 Marvell 后,我们担负的共同使命是开发和交付半导体解决方案,实现对全球数据进行更加快速安全的处理、移动、存储和保护。两家公司强强联合使基础设施行业巨头的地位更加稳固,服务的客户包括云/数据中心、企业/校园、服务供应商、SMB/SOHO、工业和汽车行业等。

有关与 HPE 之间的业务往来,您首先需要了解的是所有事宜仍将照常进行。 针对向 HPE 提供 I/O 和处理器技术相关事宜,收购前后您所打交道的合作伙伴保持不变。 Marvell 是存储技术领域排名靠前的供应商,业务涵盖非常高速的读取通道、高性能处理器和收发器等,广泛应用于当今 HPE ProLiant 和 HPE 存储产品所使用的绝大多数硬盘驱动器 (HDD) 和固态硬盘驱动器 (SSD) 模块。

行业排名靠前的 QLogic® 8/16/32Gb 光纤通道和 FastLinQ® 10/20/25/50Gb 以太网 I/O 技术将继续提供 HPE 服务器连接和存储解决方案。 此类产品仍将 HPE 智能 I/O 选择作为重点,并以卓越的性能、灵活性和可靠性著称。

Marvell FastLinQ 以太网和 Qlogic 光纤通道 I/O 适配器组合

我们将继续推出适用于 HPC 服务器的 ThunderX2® Arm® 处理器技术,如高性能计算应用程序适用的 HPE Apollo 70。 今后我们还将继续推出嵌入 HPE 服务器和存储的以太网网络技术,以及应用于所有 HPE ProLiant 和 HPE Synergy Gen10 服务器中 iLO5 基板管理控制器 (BMC) 的 Marvell ASIC 技术。

iLO 5 for HPE ProLiant Gen10 部署在 Marvell SoC 上

这听起来非常棒,但是真正合并后会出现什么变化呢?

如今,合并后的公司拥有更为广泛的技术组合,这将帮助 HPE 在边缘、网络以及数据中心提供出众的解决方案。

Marvell 拥有行业排名靠前的交换机技术,可实现 1GbE 到 100GbE 乃至更大速率。 这让我们能够提供从 IoT 边缘到数据中心和云之间的连接。 我们的智能网卡 (Intelligent NIC) 技术具有压缩、加密等多重功能,客户能够比以往更加快速智能地对网络流量进行分析。 我们的安全解决方案和增强型 SoC 和处理器性能将帮助我们的 HPE 设计团队与 HPE 展开合作,共同对新一代服务器和解决方案进行革新。

随着合并的不断深化,您将注意到品牌的转变,并且访问信息的位置也在不断变更。 但是我们的特定产品品牌仍将保留,具体包括如 Arm ThunderX2、光纤通道 QLogic 以及以太网 FastLinQ 等,但是多数产品将从 Cavium 向 Marvell 转移。 并且我们的网络资源和电子邮件地址也将进行变更。 例如,您现在可以通过 www.marvell.com/hpe 访问 HPE 子网站。 并且您很快就能通过“hpesolutions@marvell.com”与我们取得联系. 您当前使用的附属产品随后仍将不断更新。 现已对 HPE 特定网卡, HPE以太网快速参考指南, 光纤通道快速参考 指南和演示材料等切实进行更新。 更新将在未来几个月内持续进行。

总而言之,我们正在不断发展突破、创造辉煌。 如今,作为一个 统一的团队 凭借出众的技术,我们将更加专注于为 HPE 及其有合作的伙伴和客户的发展提供助力。 即刻联系我们,了解更多相关内容。 由此获取我们的相关领域联系方式. 这一全新起点让我们兴奋不已,日益巩固“I/O 和基础设施的关键地位!”。

By Todd Owens, Field Marketing Director, Marvell

Are you considering deploying HPE Cloudline servers in your hyper-scale environment? If you are, be aware that HPE now offers select Cavium ™ FastLinQ® 10GbE and 10/25GbE Adapters as options for HPE Cloudline CL2100, CL2200 and CL3150 Gen 10 Servers. The adapters supported on the HPE Cloudline servers are shown in table 1 below.

Faster processors, increase in scale, high performance NVMe and SSD storage and the need for better performance and lower latency have started to shift some of the performance bottlenecks from servers and storage to the network itself.

* Source; Demartek findings

Table 2: Advanced Features in Cavium FastLinQ Adapters for HPE Cloudline

Network Partitioning (NPAR) virtualizes the physical port into eight virtual functions on the PCIe bus. This makes a dual port adapter appear to the host O/S as if it were eight individual NICs. Furthermore, the bandwidth of each virtual function can be fine-tuned in increments of 500Mbps, providing full Quality of Service on each connection. SR-IOV is an additional virtualization offload these adapters support that moves management of VM to VM traffic from the host hypervisor to the adapter. This frees up CPU resources and reduces VM to VM latency.

Remote Direct Memory Access (RDMA) is an offload that routes I/O traffic directly from the adapter to the host memory. This bypasses the O/S kernel and can improve performance by reducing latency. The Cavium adapters support what is called Universal RDMA, which is the ability to support both RoCEv2 and iWARP protocols concurrently. This provides network administrators more flexibility and choice for low latency solutions built with HPE Cloudline servers.

SmartAN is a Cavium technology available on the 10/25GbE adapters that addresses issues related to bandwidth matching and the need for Forward Error Correction (FEC) when switching between 10Gbe and 25GbE connections. For 25GbE connections, either Reed Solomon FEC (RS-FEC) or Fire Code FEC (FC-FEC) is required to correct bit errors that occur at higher bandwidths. For the details behind SmartAN technology you can refer to the Marvell technology brief here.

For simplified management, Cavium provides a suite of utilities that allow for configuration and monitoring of the adapters that work across all the popular O/S environments including Microsoft Windows Server, VMware and Linux. Cavium’s unified management suite includes QCC GUI, CLI and v-Center plugins, as well as PowerShell Cmdlets for scripting configuration commands across multiple servers. Cavum’s unified management utilities can be downloaded from www.cavium.com .

By Todd Owens, Field Marketing Director, Marvell

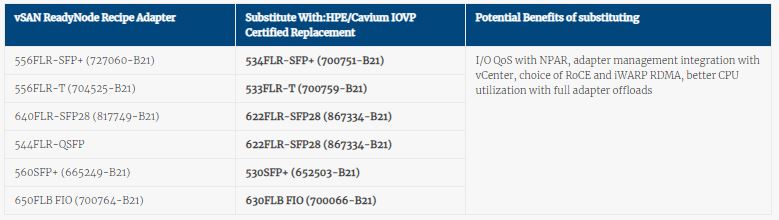

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

Ok, so we know what substitutions we can make in these vSAN storage solutions. What are the benefits to the customer for making this change?

There are several benefits to the HPE/Cavium technology compared to the other adapter offerings.

By Todd Owens, Field Marketing Director, Marvell

Like a kid in a candy store, choose I/O wisely.

But with so many choices, the decision was often confusing. With time running out, you’d usually just grab the name-brand candy you were familiar with. But what were you missing out on?

There are lots of choices and it takes time to understand all the differences. As a result, system architects in many cases just fall back to the legacy name-brand adapter they have become familiar with. Is this the best option for their client though? Not always.

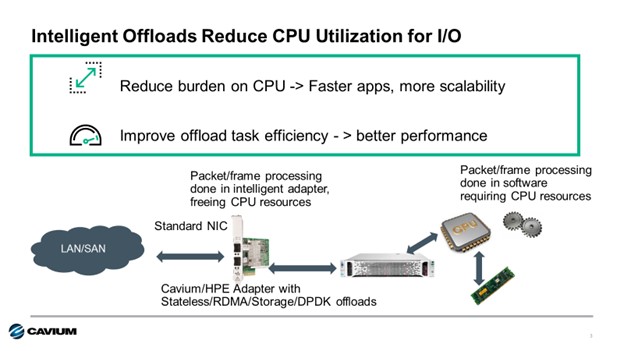

These adapters utilize a variety of offload technology and other capabilities to take on tasks associated with I/O processing that are typically done in software by the CPU when using a basic “standard” Ethernet adapter. Intelligent offloads include things like SR-IOV, RDMA, iSCSI, FCoE or DPDK. Each of these offloads the work to the adapter and, in many cases, bypasses the O/S kernel, speeding up I/O transactions and increasing performance.

Another reason is to mitigate performance impact to the Spectre and Meltdown fixes required now for X86 server processors. The side channel vulnerability known as Spectre and Meltdown in X86 processors required kernel patches from the CPU vendor. These patches can have a significantly reduce CPU performance. For example, Red Hat reported the impact could be as much as a 19% performance degradation. That’s a big performance hit.

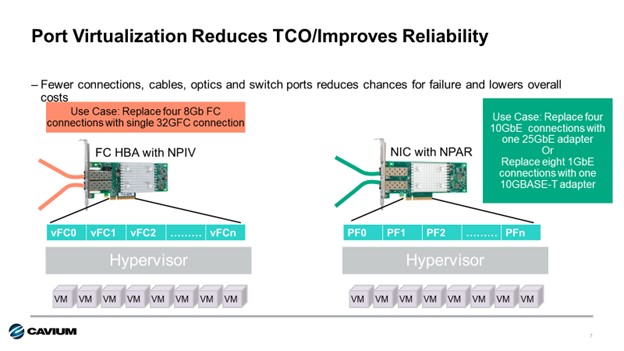

Some intelligent I/O adapters have port virtualization capabilities. Cavium Fibre Channel HBAs implement N-port ID Virtualization, or NPIV, to allow a single Fibre Channel port appear as multiple virtual Fibre Channel adapters to the hypervisor. For Cavium FastLinQ Ethernet Adapters, Network Partitioning, or NPAR, is utilized to provide similar capability for Ethernet connections. Up to eight independent connections can be presented to the host O/S making a single dual-port adapter look like 16 NICs to the operating system. Each virtual connection can be set to specific bandwidth and priority settings, providing full quality of service per connection.

At HPE, there are more than fifty 10Gb-100GbE Ethernet adapters to choose from across the HPE ProLiant, Apollo, BladeSystem and HPE Synergy server portfolios. That’s a lot of documentation to read and compare. Cavium is proud to be a supplier of eighteen of these Ethernet adapters, and we’ve created a handy quick reference guide to highlight which of these offloads and virtualization features are supported on which adapters. View the complete Cavium HPE Ethernet Adapter Quick Reference guide here.

For Fibre Channel HBAs, there are fewer choices (only nineteen), but we make a quick reference document available for our HBA offerings at HPE as well. You can view the Fibre Channel HBA Quick Reference here.